AI data security: understanding vulnerabilities and safeguards is crucial in today’s data-driven world. Artificial intelligence systems, while offering transformative potential, are increasingly vulnerable to sophisticated attacks. From data poisoning to model theft, the risks are substantial, impacting not only organizational reputations but also sensitive user information. This exploration delves into the core vulnerabilities of AI systems, examines effective safeguarding techniques, and highlights the critical role of human awareness in maintaining a secure AI environment.

Understanding these complexities is paramount for organizations seeking to harness the power of AI responsibly.

The rapid advancement of AI has outpaced the development of comprehensive security protocols in many instances. This leaves organizations exposed to a range of threats, including malicious data manipulation, unauthorized access, and intellectual property theft. This guide will equip you with the knowledge and strategies to navigate these challenges, providing practical steps to secure your AI systems and data.

Introduction to AI Data Security

The rapid proliferation of artificial intelligence (AI) systems across various sectors has ushered in an era of unprecedented technological advancement, but it has also created a new and evolving landscape of data security threats. AI’s reliance on vast amounts of data for training and operation makes it a prime target for malicious actors seeking to exploit vulnerabilities, steal sensitive information, or disrupt operations.

Understanding and mitigating these risks is paramount for organizations deploying AI systems. Proactive security measures are no longer optional; they are essential for ensuring the integrity, confidentiality, and availability of AI-driven applications and the data they utilize.The importance of proactive security measures in AI systems cannot be overstated. Reactive approaches, which focus on addressing breaches after they occur, are insufficient in the dynamic AI environment.

Proactive measures, including robust data encryption, access control mechanisms, and regular security audits, are crucial for preventing breaches and minimizing damage. Furthermore, a proactive approach fosters a security-conscious culture within organizations, encouraging employees to report potential vulnerabilities and adopt secure practices. This layered approach significantly strengthens an organization’s overall security posture.

Real-World AI Data Breaches and Their Consequences

Several high-profile AI data breaches have highlighted the devastating consequences of inadequate security measures. For example, the 2017 Equifax data breach, while not directly related to AI, exposed the vulnerabilities of massive databases holding sensitive personal information, emphasizing the broader context of data security risks that AI systems inherit and amplify. A hypothetical scenario could involve a malicious actor compromising a self-driving car’s AI system, potentially leading to accidents or even fatalities.

Similarly, a breach targeting an AI-powered medical diagnostic system could lead to misdiagnosis and harm patients. The financial consequences of such breaches can be substantial, including regulatory fines, legal costs, reputational damage, and loss of customer trust. These incidents underscore the critical need for robust security protocols tailored to the specific vulnerabilities of AI systems.

Common Vulnerabilities in AI Systems: AI Data Security: Understanding Vulnerabilities And Safeguards

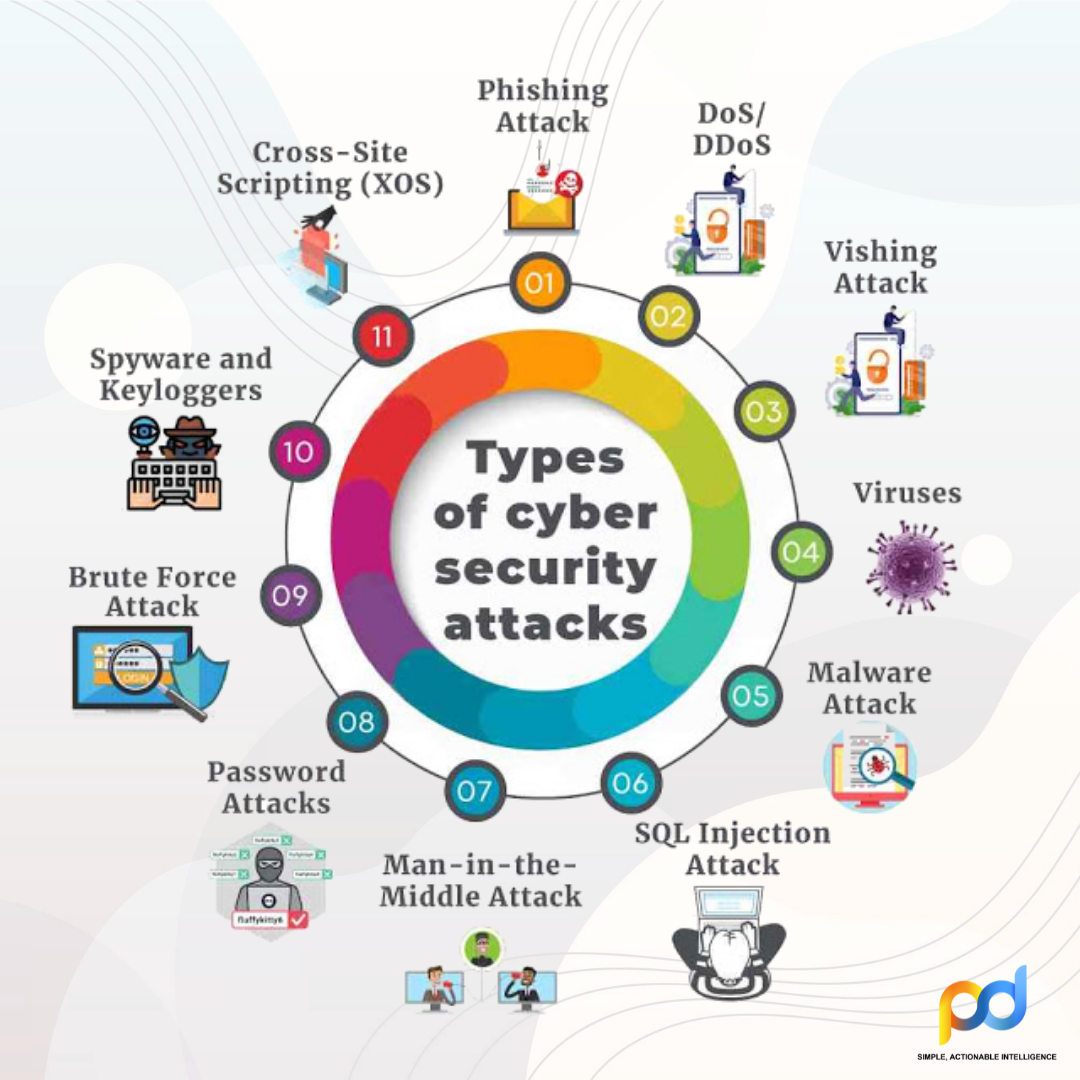

AI systems, while offering transformative capabilities, are susceptible to a range of security vulnerabilities that can compromise data integrity, confidentiality, and availability. These vulnerabilities stem from the unique characteristics of AI, including its reliance on vast datasets, complex algorithms, and often opaque decision-making processes. Understanding these vulnerabilities is crucial for developing effective safeguards and mitigating potential risks.AI data security threats are multifaceted, impacting various stages of the AI lifecycle, from data collection and training to model deployment and maintenance.

The following sections detail some of the most prevalent vulnerabilities and the associated risks.

Top Five Vulnerabilities in AI Data Security

Five key vulnerabilities consistently emerge as significant threats to AI data security. These include data poisoning, model extraction, adversarial attacks, model inversion, and backdoor attacks. Each presents unique challenges and requires tailored mitigation strategies. Data poisoning, for instance, involves manipulating training data to compromise the model’s accuracy or introduce malicious behavior. Model extraction aims to replicate a model’s functionality without access to its underlying code or data.

Adversarial attacks exploit vulnerabilities in model inputs to cause misclassifications or unexpected outputs. Model inversion seeks to recover sensitive training data from the model itself. Finally, backdoor attacks insert hidden triggers into the model, activating malicious behavior under specific conditions.

Data Poisoning Attacks and Associated Risks

Data poisoning attacks represent a significant threat to AI systems. These attacks involve injecting malicious or manipulated data into the training dataset, thereby influencing the model’s behavior in undesirable ways. For example, an attacker might subtly alter images in a facial recognition dataset to misclassify certain individuals or inject biased data to create discriminatory outcomes. The risks associated with data poisoning are substantial.

A poisoned model might make inaccurate predictions, leading to flawed decisions in critical applications such as medical diagnosis or autonomous driving. Furthermore, poisoned models can be exploited to manipulate systems, potentially leading to financial losses, reputational damage, or even physical harm. The impact of a successful data poisoning attack is directly proportional to the model’s reliance and the criticality of its application.

Consider a self-driving car trained on a poisoned dataset containing manipulated images of traffic signs; the consequences of misclassification could be catastrophic.

Securing AI Models During Training and Deployment

Securing AI models throughout their lifecycle, from training to deployment, presents unique challenges. During training, securing the training data is paramount. This includes protecting against unauthorized access, modification, or deletion. Robust access control mechanisms, data encryption, and secure storage solutions are essential. Furthermore, rigorous data validation and anomaly detection techniques can help identify and mitigate data poisoning attempts.

During deployment, the focus shifts to protecting the deployed model from adversarial attacks and model extraction attempts. This may involve input validation, model obfuscation, and robust monitoring to detect anomalies in model behavior. Regular model updates and retraining with fresh, clean data can also help mitigate the impact of vulnerabilities discovered after deployment. Implementing these safeguards across the entire lifecycle ensures a more resilient and secure AI system.

Comparison of AI Data Vulnerabilities, AI data security: understanding vulnerabilities and safeguards

| Vulnerability Type | Description | Impact | Mitigation Strategies |

|---|---|---|---|

| Data Poisoning | Malicious data injected into training data | Inaccurate predictions, biased outcomes | Data validation, anomaly detection, secure data handling |

| Model Extraction | Unauthorized replication of model functionality | Intellectual property theft, unauthorized access | Model obfuscation, watermarking, access control |

| Adversarial Attacks | Manipulated inputs causing model misclassification | System malfunction, inaccurate predictions | Input validation, robust model design, adversarial training |

| Model Inversion | Recovery of training data from the model | Data breach, privacy violation | Differential privacy, model sanitization |

| Backdoor Attacks | Hidden triggers activating malicious behavior | Unforeseen consequences, system compromise | Robust model training, anomaly detection, rigorous testing |

Data Privacy and AI

The intersection of artificial intelligence and data privacy presents significant challenges and opportunities. AI systems, by their very nature, require vast amounts of data to learn and function effectively. However, much of this data is sensitive, containing personally identifiable information (PII) or other confidential details. Balancing the need for data-driven AI with the imperative to protect individual privacy necessitates a robust understanding and implementation of data privacy best practices and regulatory compliance.The effective use of AI while maintaining data privacy requires a multi-faceted approach, encompassing data anonymization and pseudonymization techniques, a well-defined framework for complying with relevant regulations, and the careful selection and implementation of data encryption methods.

These elements work in concert to create a secure environment for AI development and deployment.

Anonymization and Pseudonymization Techniques

Effective anonymization and pseudonymization are crucial for protecting sensitive data used in AI development and training. Anonymization aims to remove all identifying information from a dataset, making it impossible to link the data back to individuals. Pseudonymization, on the other hand, replaces identifying information with pseudonyms, allowing for data linkage while protecting individual identities. Robust anonymization requires careful consideration of various identification methods and potential re-identification risks.

For example, simply removing names and addresses may not be sufficient, as other seemingly innocuous data points, such as age, gender, and location combined, could still enable re-identification through linkage attacks. Advanced techniques like k-anonymity and l-diversity can help mitigate these risks by ensuring that each individual’s data is indistinguishable from at least k-1 other individuals based on quasi-identifiers (attributes that could lead to re-identification).

Pseudonymization often involves creating unique identifiers for each individual, allowing for data aggregation and analysis while preserving anonymity. Careful management of the mapping between pseudonyms and real identities is crucial to prevent unauthorized access and re-identification.

Framework for Complying with Data Privacy Regulations

A comprehensive framework for complying with data privacy regulations like GDPR and CCPA is essential for responsible AI development. This framework should incorporate several key elements. First, a thorough Data Protection Impact Assessment (DPIA) should be conducted at the outset of any AI project to identify potential privacy risks and determine appropriate mitigation strategies. This assessment needs to identify the types of data used, the purpose of its use, the potential risks to individuals, and the measures to be taken to minimize those risks.

Next, data minimization principles should be strictly adhered to, using only the minimum amount of data necessary for the AI system to function effectively. Data governance procedures should be established to ensure the proper handling and storage of sensitive data, including access control mechanisms and data retention policies that align with regulatory requirements. Furthermore, transparency is vital; individuals should be informed about how their data is being used by the AI system, and they should have the right to access, correct, and delete their data.

Finally, mechanisms for addressing data breaches and ensuring accountability should be in place, allowing for swift and effective response in the event of a security incident.

Data Encryption Methods for AI Applications

Different encryption methods offer varying levels of security and performance trade-offs, impacting the suitability for specific AI applications. Symmetric encryption, using a single key for both encryption and decryption, offers faster processing speeds but requires secure key exchange. Examples include AES (Advanced Encryption Standard), widely considered a robust and secure option. Asymmetric encryption, employing separate public and private keys, offers enhanced security for key management but is generally slower.

RSA (Rivest-Shamir-Adleman) is a common example, often used for key exchange in hybrid encryption systems. Homomorphic encryption allows computations to be performed on encrypted data without decryption, offering a high level of security for AI applications involving sensitive data processing. However, it is computationally intensive and currently less mature than other methods. The choice of encryption method depends on factors such as the sensitivity of the data, the computational resources available, and the specific requirements of the AI application.

For example, a real-time AI application might prioritize speed and choose symmetric encryption, while an application handling highly sensitive medical data might opt for homomorphic encryption despite its performance limitations.

Safeguarding AI Data

Protecting AI systems and the sensitive data they process requires a multi-layered approach encompassing robust access controls, proactive threat detection, and regular security audits. Failure to implement these safeguards can lead to data breaches, model poisoning, and significant financial and reputational damage. This section details practical methods and techniques for securing AI data.

Implementing Robust Access Control Mechanisms

Effective access control is paramount in preventing unauthorized access to AI systems and their associated data. A layered approach, combining various access control methods, offers the strongest protection. This includes implementing granular permission systems, leveraging role-based access control (RBAC), and employing multi-factor authentication (MFA). For example, a data scientist might only have access to the training data, while a model deployment engineer would have access to the deployed model but not the raw data.

RBAC ensures that users only have access to resources necessary for their specific roles, minimizing the risk of unauthorized data access. MFA adds an extra layer of security by requiring multiple forms of authentication, such as a password and a one-time code from a mobile device, before granting access. Regularly reviewing and updating access permissions is crucial to maintain security as roles and responsibilities evolve within the organization.

Intrusion Detection and Prevention Systems in AI Security

Intrusion Detection and Prevention Systems (IDPS) play a critical role in identifying and mitigating security threats targeting AI systems. These systems monitor network traffic and system activity for suspicious patterns, alerting security personnel to potential breaches. For AI-specific threats, IDPS can be customized to detect anomalies in model behavior, such as unexpected changes in model accuracy or prediction patterns, which could indicate a poisoning attack.

Furthermore, IDPS can be integrated with other security tools, such as Security Information and Event Management (SIEM) systems, to provide a comprehensive view of security events and facilitate faster incident response. Real-time threat detection and prevention capabilities are essential to minimize the impact of successful intrusions. For instance, a sudden spike in requests to a specific model endpoint could be flagged as suspicious, triggering an automated response like rate limiting or temporary system shutdown.

Regular Security Audits of AI Models and Datasets

Regular security audits are essential for identifying and addressing vulnerabilities in AI systems and datasets. These audits should encompass both technical and non-technical aspects of security. Technical audits involve assessing the security of the underlying infrastructure, software, and algorithms. This might include penetration testing to identify vulnerabilities in the system, code reviews to detect security flaws in the AI model, and data validation to ensure the integrity and accuracy of the training data.

Non-technical audits focus on policies, procedures, and personnel. This includes reviewing access control policies, incident response plans, and employee training programs. Regular audits help ensure that security measures are effective and up-to-date, mitigating the risk of data breaches and other security incidents. A comprehensive audit report should include a detailed assessment of identified vulnerabilities, recommended remediation steps, and a timeline for implementing those steps.

Security Tools and Technologies for AI Data Protection

Several security tools and technologies are specifically designed to protect AI data. These include:

- Data Loss Prevention (DLP) tools: These tools monitor data movement and prevent sensitive information from leaving the organization’s control. They can be configured to identify and block the transfer of sensitive AI model parameters or training data.

- Data encryption: Encrypting data both in transit and at rest protects it from unauthorized access even if a breach occurs. This is crucial for protecting sensitive AI models and training datasets.

- Federated learning platforms: These platforms allow for collaborative model training without directly sharing sensitive data, enhancing privacy and security.

- AI-specific security monitoring tools: These tools are designed to detect anomalies and malicious activities specifically targeting AI systems. They can monitor model performance, data integrity, and access patterns to identify potential threats.

- Blockchain technologies: Blockchain can enhance data provenance and integrity, providing a secure and auditable record of data usage and access.

AI Model Security

Protecting AI models is crucial for maintaining a competitive edge and ensuring the integrity of AI-driven systems. The value embedded within these models, often representing significant investment in research, development, and data, makes them prime targets for malicious actors. This section explores the key challenges and effective strategies for securing AI models throughout their lifecycle.AI model security encompasses a range of threats, from unauthorized access and reverse engineering to sophisticated adversarial attacks.

The unique vulnerabilities inherent in AI models necessitate a multi-faceted approach to security, combining technical safeguards with robust organizational policies and procedures. This approach must consider the model’s entire lifecycle, from development and training to deployment and maintenance.

Reverse Engineering and Intellectual Property Theft

Protecting AI models from reverse engineering and intellectual property theft requires a proactive approach that integrates various security measures. Malicious actors may attempt to steal proprietary algorithms or training data to replicate a model’s functionality, undermining a company’s competitive advantage and potentially causing significant financial losses. Techniques like model obfuscation, watermarking, and the use of secure enclaves can significantly hinder these attempts.

Obfuscation techniques aim to make the model’s internal workings difficult to understand, while watermarking embeds imperceptible markers within the model to help identify theft. Secure enclaves provide isolated execution environments that protect the model from unauthorized access. For example, a financial institution might use model obfuscation and secure enclaves to protect its fraud detection model, preventing competitors from replicating its sophisticated algorithms.

Securing AI Models Against Adversarial Attacks

Adversarial attacks involve manipulating input data to cause an AI model to produce incorrect or unintended outputs. These attacks can be incredibly subtle, often imperceptible to humans, yet capable of causing significant damage. For example, a self-driving car might misinterpret a subtly altered stop sign, leading to a dangerous situation. Techniques to mitigate adversarial attacks include adversarial training, input validation, and robust model design.

Adversarial training involves exposing the model to adversarial examples during training, improving its robustness. Input validation ensures that incoming data conforms to expected formats and ranges, reducing the likelihood of successful attacks. Robust model design focuses on creating models that are inherently less susceptible to manipulation. A robust model might incorporate multiple layers of checks and balances to prevent a single point of failure from leading to a catastrophic outcome.

Securing an AI Model Throughout its Lifecycle

The security of an AI model is not a one-time event but an ongoing process that spans its entire lifecycle. A comprehensive approach is essential, encompassing multiple stages and incorporating diverse security measures.

The Human Factor in AI Security

The security of AI systems is not solely dependent on technological safeguards; a significant portion rests on the shoulders of the humans who design, implement, and manage them. Human error remains a primary vulnerability, often surpassing technological weaknesses in its impact on data breaches and system compromise. Understanding these vulnerabilities and implementing robust training programs are crucial for mitigating risks associated with the human factor in AI security.Human negligence and malicious intent are two primary avenues through which human factors compromise AI data security.

These weaknesses can manifest in various ways, from simple oversights to deliberate attacks, all potentially leading to significant data breaches or system failures. Effective security awareness training is paramount to minimizing these risks.

Common Human Errors Compromising AI Data Security

Numerous human errors can inadvertently or intentionally compromise AI data security. These errors range from simple mistakes in password management and access control to more complex issues involving the mishandling of sensitive data or the failure to implement adequate security protocols. For example, an employee might inadvertently download malware onto a company device, thereby compromising the security of the entire AI system.

Similarly, a lack of awareness about phishing attempts could lead to unauthorized access to sensitive data. Failure to properly secure data storage, leaving sensitive information exposed on unprotected servers or unsecured cloud storage, is another prevalent error. Finally, neglecting regular security audits and updates leaves the system vulnerable to known exploits.

Best Practices for Training Employees on AI Security Awareness

A comprehensive training program should encompass various aspects of AI security. This includes educating employees about the types of threats they might encounter, the potential consequences of security breaches, and the specific security protocols in place within the organization. The program should be interactive, engaging, and tailored to the specific roles and responsibilities of the employees. Regular refresher training sessions are essential to ensure that employees remain up-to-date on the latest threats and best practices.

Simulated phishing attacks and security awareness quizzes can effectively assess the effectiveness of the training and reinforce learning. Furthermore, clear communication channels for reporting security incidents are crucial, enabling prompt response and mitigation.

Designing a Security Awareness Program for AI Personnel

A robust security awareness program for personnel involved in AI development and deployment should be structured around several key components. Firstly, a comprehensive risk assessment should identify the specific threats and vulnerabilities relevant to the organization’s AI systems. This assessment should inform the design and content of the training program, ensuring that it addresses the most pertinent risks. Secondly, the program should utilize a multi-faceted approach, incorporating various training methods such as online modules, workshops, and interactive simulations.

This will cater to different learning styles and ensure comprehensive knowledge acquisition. Thirdly, the program should be regularly updated to reflect the evolving threat landscape and advancements in AI security technologies. Finally, the effectiveness of the program should be continuously monitored and evaluated through regular assessments and feedback mechanisms. This iterative approach ensures the program remains relevant and effective in mitigating human-related security risks.

Future Trends in AI Data Security

The landscape of AI data security is constantly evolving, driven by the increasing sophistication of AI systems and the expansion of their applications across various sectors. Predicting future trends requires understanding the inherent vulnerabilities of current systems and anticipating how attackers will exploit them. This necessitates a proactive approach to security, encompassing both defensive measures and the leveraging of AI’s own capabilities to enhance its own protection.The next few years will witness a surge in both the quantity and complexity of attacks targeting AI systems.

These attacks will not only aim to compromise sensitive data but also to manipulate AI models, leading to inaccurate predictions or biased outputs with potentially devastating consequences. The rise of quantum computing also poses a significant long-term threat, potentially rendering current cryptographic methods obsolete and requiring the development of new, quantum-resistant algorithms for protecting AI data.

Emerging Threats to AI Data Security

AI systems are increasingly becoming targets for sophisticated attacks. Model poisoning, where malicious data is introduced during training to corrupt the model’s output, is a growing concern. Adversarial attacks, involving subtly altering input data to cause misclassification, pose a significant threat to the reliability of AI systems in critical applications like autonomous driving and medical diagnosis. Data breaches targeting training datasets, exposing sensitive personal information or intellectual property, represent another major vulnerability.

Furthermore, the increasing interconnectedness of AI systems presents opportunities for attackers to exploit vulnerabilities in one system to gain access to others, creating cascading failures. For example, a compromised smart home device could serve as an entry point for a larger attack on a connected industrial control system.

The Role of Blockchain Technology in Enhancing AI Data Security

Blockchain technology, with its inherent immutability and decentralized nature, offers a promising solution to several AI data security challenges. Blockchain can be used to create a secure, auditable record of data access and modifications, enhancing transparency and accountability. Decentralized storage of training data on a blockchain can mitigate the risk of single points of failure and data breaches.

Furthermore, blockchain-based identity management systems can improve the security and privacy of AI systems by providing verifiable credentials and access control mechanisms. For instance, a pharmaceutical company could use blockchain to securely store and share clinical trial data used to train AI models for drug discovery, ensuring data integrity and preventing unauthorized access.

AI-Driven Improvements to AI Data Security

The irony, and the opportunity, lies in leveraging AI itself to improve its own security. AI-powered threat detection systems can analyze vast amounts of data to identify anomalies and potential attacks in real-time. These systems can learn to recognize patterns indicative of malicious activity, enabling faster response times and more effective mitigation strategies. AI can also be used to automate security tasks, such as vulnerability scanning and patching, freeing up human resources to focus on more complex issues.

For example, an AI-powered system could monitor network traffic for suspicious activity, automatically blocking malicious connections and alerting security personnel to potential threats. Furthermore, AI can enhance data anonymization techniques, protecting sensitive information while still allowing for the training of effective AI models.

Last Recap

Securing AI systems requires a multi-faceted approach that encompasses robust technical safeguards, stringent data governance policies, and a highly trained workforce. While the evolving landscape of AI threats necessitates constant vigilance, the strategies and techniques Artikeld in this guide provide a strong foundation for mitigating risks and building a resilient AI security posture. By understanding the vulnerabilities and implementing the appropriate safeguards, organizations can confidently leverage the power of AI while safeguarding sensitive data and maintaining a strong security posture.

The future of AI depends on a proactive and informed approach to security.