Best practices to secure data from malicious AI applications are crucial in today’s rapidly evolving digital landscape. Malicious AI, leveraging sophisticated techniques like adversarial machine learning and deepfakes, poses a significant threat to sensitive information. Understanding these threats and implementing robust security measures is no longer optional; it’s a necessity for organizations of all sizes. This guide delves into the essential strategies and technologies needed to protect your data from the growing menace of malicious AI applications.

From designing multi-layered security architectures and implementing robust data encryption to leveraging AI-powered security solutions and establishing comprehensive data governance frameworks, we’ll explore a range of practical steps to safeguard your valuable assets. We’ll also cover critical aspects like incident response planning, employee training, and regular security audits—all vital components of a comprehensive data security strategy in the age of malicious AI.

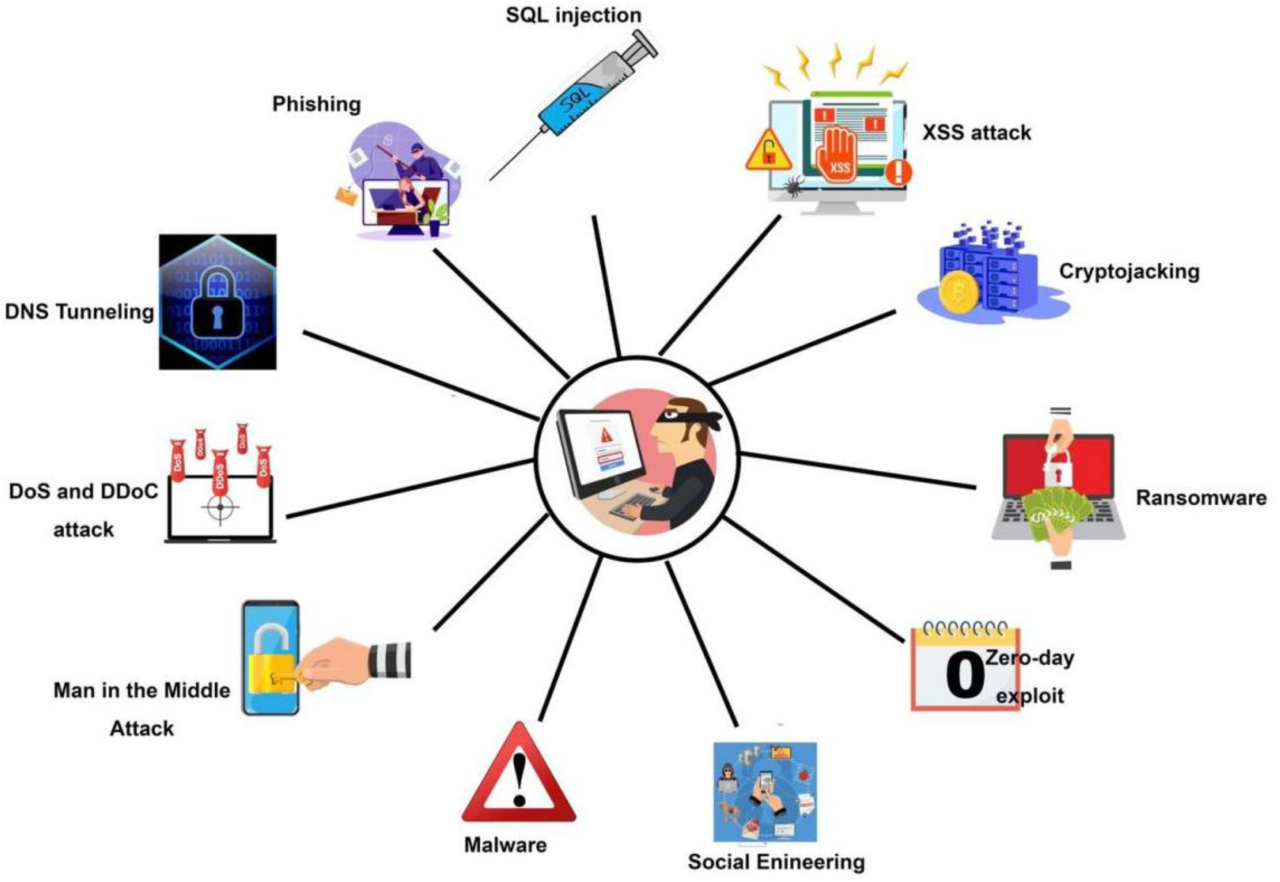

Identifying Potential Threats from Malicious AI

Malicious AI applications represent a growing threat to data security, leveraging sophisticated techniques to bypass traditional defenses. These applications exploit vulnerabilities in various systems and employ adversarial methods to manipulate data, resulting in significant breaches and financial losses. Understanding the common attack vectors and vulnerabilities is crucial for implementing effective countermeasures.The rise of malicious AI applications necessitates a comprehensive understanding of the threats they pose.

These applications are not simply using existing vulnerabilities; they are actively developing new attack vectors, making traditional security measures increasingly ineffective. This necessitates a proactive and adaptive approach to data security.

Attack Vectors and System Vulnerabilities

Malicious AI applications utilize a range of attack vectors to compromise data. These vectors often exploit vulnerabilities present in cloud-based systems, on-premise infrastructure, and even within the algorithms of machine learning models themselves. For example, a compromised cloud service account could provide access to sensitive data, while a vulnerability in an on-premise system’s security software could allow for unauthorized access and data exfiltration.

Furthermore, adversarial attacks targeting machine learning models can manipulate their outputs, leading to incorrect decisions that have significant consequences.

Adversarial Machine Learning Techniques

Adversarial machine learning focuses on manipulating input data to cause a machine learning model to produce incorrect or misleading outputs. This can involve adding carefully crafted noise to images used for facial recognition, causing the system to misidentify individuals. Similarly, subtle changes to input data in a loan application processing system could potentially manipulate the model’s risk assessment, leading to fraudulent approvals.

These attacks often exploit vulnerabilities in the model’s training data or its underlying architecture. For instance, a model trained on biased data will be more susceptible to adversarial attacks designed to exploit those biases.

The Role of Deepfakes and Synthetic Media, Best practices to secure data from malicious AI applications

Deepfakes and other forms of synthetic media present a significant threat to data security and integrity. These technologies can be used to create highly realistic but fabricated videos and audio recordings, which can then be used for identity theft, blackmail, or spreading disinformation. The creation of convincing deepfakes requires significant computational resources and expertise, but the accessibility of such technologies is rapidly increasing, lowering the barrier to entry for malicious actors.

A recent example involved a deepfake video being used to impersonate a CEO and authorize a fraudulent wire transfer. The realistic nature of these deepfakes makes them particularly difficult to detect, highlighting the need for robust authentication and verification mechanisms.

Data Security Best Practices

Protecting data from malicious AI applications requires a proactive and multi-faceted approach. This goes beyond traditional cybersecurity measures, demanding a deep understanding of AI’s capabilities and potential vulnerabilities. A robust strategy involves implementing preventative controls, establishing robust detection mechanisms, and maintaining a continuous improvement cycle. This section details best practices for achieving this.

Multi-Layered Security Architecture

A multi-layered security architecture is crucial for mitigating risks associated with malicious AI. This approach involves implementing security controls at various levels, from the network perimeter to the application layer and the data itself. Each layer acts as a defense in depth, ensuring that even if one layer is compromised, others remain intact to prevent data breaches. This architecture can include firewalls, intrusion detection systems, data loss prevention (DLP) tools, and access control mechanisms, all tailored to the specific threats posed by AI-driven attacks.

For example, a company might use a combination of network segmentation, application-level security, and robust data encryption to protect sensitive data used in machine learning models. If one layer, such as the firewall, is breached, the other layers act as additional safeguards to prevent complete system compromise.

Essential Security Controls for Data Protection

The following table Artikels essential security controls for protecting data from AI-driven attacks. These controls should be implemented and regularly reviewed to ensure effectiveness.

| Control Type | Description | Implementation | Effectiveness |

|---|---|---|---|

| Access Control | Restricting access to sensitive data based on the principle of least privilege. | Implementing role-based access control (RBAC) and multi-factor authentication (MFA). | High; significantly reduces the risk of unauthorized access. |

| Data Encryption | Protecting data at rest and in transit using strong encryption algorithms. | Implementing encryption at the database, application, and network levels; using AES-256 or similar. | High; renders data unusable without the decryption key. |

| Intrusion Detection/Prevention Systems (IDS/IPS) | Monitoring network traffic and system activity for malicious behavior. | Deploying network-based and host-based IDS/IPS solutions; regularly updating signatures. | Medium to High; detects and prevents many attacks, but sophisticated attacks may evade detection. |

| Regular Security Audits | Periodically assessing the security posture of AI systems and data. | Conducting vulnerability scans, penetration testing, and code reviews. | High; identifies vulnerabilities and weaknesses before they can be exploited. |

| Data Loss Prevention (DLP) | Preventing sensitive data from leaving the organization’s control. | Implementing DLP tools to monitor data movement and block unauthorized transfers. | High; prevents data exfiltration and data breaches. |

| Anomaly Detection | Identifying unusual patterns or behaviors in AI systems and data. | Implementing machine learning-based anomaly detection systems to identify deviations from normal behavior. | Medium to High; effectiveness depends on the sophistication of the anomaly detection system. |

Data Encryption and Key Management

Data encryption is paramount for protecting data used in AI applications. Strong encryption algorithms, such as AES-256, should be used to protect data at rest and in transit. Key management is equally critical; keys must be securely generated, stored, and managed to prevent unauthorized access. This includes using hardware security modules (HSMs) for secure key storage and employing robust key rotation policies.

For example, a company training a machine learning model on sensitive customer data would encrypt the data both during storage and transmission, using strong encryption and regularly rotating encryption keys to mitigate the risk of long-term compromise. Failure to properly manage keys can negate the security benefits of encryption.

Anomaly Detection Methods

Detecting anomalies and unusual patterns indicative of malicious AI activity involves employing various techniques. These include statistical methods to identify outliers in data, machine learning algorithms trained to detect deviations from normal system behavior, and security information and event management (SIEM) systems to correlate security events and identify suspicious patterns. For instance, a sudden spike in unusual queries to a database, or a significant increase in the error rate of a machine learning model, could signal a malicious AI attack.

These anomalies can be detected by monitoring key performance indicators (KPIs) and setting thresholds for alerts. Prompt investigation of any detected anomaly is crucial for effective mitigation.

AI-Powered Security Measures

The escalating sophistication of malicious AI applications necessitates a robust and adaptive defense strategy. AI itself offers a powerful toolkit for enhancing data security, providing a proactive and intelligent countermeasure against evolving threats. By leveraging machine learning and advanced algorithms, organizations can significantly improve their ability to detect, respond to, and mitigate attacks. This section explores the various ways AI enhances data security in the face of malicious AI.

AI’s role in bolstering data security against malicious AI applications is multifaceted. It allows for the creation of security systems that can learn and adapt to new attack vectors, surpassing the limitations of traditional rule-based systems. This dynamic approach is crucial in the constantly shifting landscape of cyber threats, where malicious actors continuously refine their techniques.

Machine Learning in Threat Detection and Response

Machine learning algorithms, a core component of AI, are instrumental in identifying and responding to threats. These algorithms analyze vast datasets of network traffic, system logs, and other security-related information to identify patterns indicative of malicious activity. For example, anomaly detection algorithms can flag unusual network behavior, such as sudden spikes in data transfer or access attempts from unusual geographic locations, which might signal an intrusion attempt.

Similarly, supervised learning models, trained on datasets of known attacks, can classify new events as malicious or benign with high accuracy. Beyond detection, machine learning enables automated responses, such as blocking malicious IP addresses or isolating infected systems, significantly reducing the impact of successful attacks. This automation allows security teams to focus on more complex issues, increasing overall efficiency.

AI-Driven Intrusion Detection Systems

AI-driven intrusion detection systems (IDS) represent a significant advancement in cybersecurity. Unlike traditional signature-based IDS that rely on predefined patterns of malicious activity, AI-powered IDS utilize machine learning to detect novel and zero-day attacks. These systems analyze network traffic in real-time, identifying subtle anomalies that might escape detection by traditional methods. For instance, an AI-driven IDS might detect unusual patterns in data packets or identify subtle variations in network communication that indicate a sophisticated attack.

Furthermore, these systems can adapt to evolving attack techniques, automatically updating their detection models based on new data and learning from past incidents. This adaptive capability is critical in mitigating the effectiveness of advanced persistent threats (APTs) and other sophisticated attacks. The implementation typically involves deploying AI algorithms on network sensors or security information and event management (SIEM) systems to monitor and analyze network traffic.

Comparison of AI-Based Security Solutions

Various AI-based security solutions exist, each with its strengths and weaknesses. For example, some solutions focus primarily on anomaly detection, identifying deviations from established baselines, while others employ supervised learning to classify malicious activities based on known attack signatures. Some solutions leverage deep learning techniques for enhanced accuracy and adaptability. The choice of the most appropriate solution depends on specific organizational needs and resources.

Factors to consider include the size and complexity of the network, the level of threat sophistication, and the availability of skilled personnel to manage and maintain the system. A robust solution might involve a combination of different AI-based techniques, offering a layered approach to data protection. For instance, an organization might use anomaly detection for initial threat identification, followed by supervised learning for classification and response.

This layered approach increases the overall effectiveness of the security system.

Data Governance and Compliance: Best Practices To Secure Data From Malicious AI Applications

Protecting data from malicious AI requires a robust data governance framework that proactively addresses potential threats. This framework must integrate with existing security protocols and adapt to the evolving landscape of AI-driven attacks. Effective data governance is not merely a technical solution; it’s a strategic imperative that ensures compliance with regulations and protects organizational reputation.Data governance in the context of malicious AI necessitates a multi-faceted approach, encompassing policy development, risk assessment, and ongoing monitoring.

This framework should define roles and responsibilities, data access controls, and procedures for incident response. Crucially, it should specify how data is collected, stored, processed, and ultimately disposed of, with specific attention paid to mitigating risks associated with AI manipulation or misuse.

Relevant Data Protection Regulations and Standards

Numerous regulations and standards provide a baseline for data protection, particularly relevant when considering the potential for malicious AI to exploit vulnerabilities. Compliance with these frameworks is crucial for organizations handling sensitive data. Failure to comply can lead to significant financial penalties and reputational damage.

Examples include the General Data Protection Regulation (GDPR) in the European Union, which grants individuals significant control over their personal data, and the California Consumer Privacy Act (CCPA) in the United States, which provides California residents with similar rights. Other relevant standards include ISO 27001 (information security management) and NIST Cybersecurity Framework, which offer comprehensive guidelines for managing data security risks, including those posed by AI.

Data Anonymization and Pseudonymization Techniques

Data anonymization and pseudonymization are crucial techniques for mitigating the risks associated with malicious AI. These methods reduce the identifiability of individuals within datasets, making it significantly harder for malicious actors to target specific individuals or groups through AI-powered attacks.

Anonymization involves removing or altering identifying information from datasets to the point where individuals cannot be re-identified. Pseudonymization, on the other hand, replaces identifying information with pseudonyms, allowing data to be linked to individuals only through a secure, controlled process. The effectiveness of these techniques depends on the thoroughness of the anonymization or pseudonymization process and the robustness of the security measures implemented to protect the mapping between pseudonyms and real identities.

For example, a hospital might pseudonymize patient records for research purposes, replacing names and other identifying information with unique codes. However, strong access controls would be needed to prevent unauthorized access to the mapping between codes and actual patient identities.

Data Loss Prevention (DLP) Tools in Securing Data from Malicious AI

Data Loss Prevention (DLP) tools play a vital role in securing data from malicious AI applications. These tools monitor data movement within and outside an organization’s network, identifying and preventing sensitive data from being exfiltrated or misused.

DLP tools can be configured to detect various types of data breaches, including those involving AI-powered attacks. For example, a DLP system could detect and block attempts to upload sensitive data to cloud storage services or transmit it via email or other communication channels. Furthermore, advanced DLP solutions incorporate machine learning algorithms to identify patterns and anomalies that might indicate malicious activity, enhancing their ability to detect and prevent sophisticated AI-driven attacks.

Effective DLP implementation necessitates a comprehensive understanding of the organization’s data assets, potential threats, and the capabilities of available DLP tools. Regular updates and adjustments are crucial to keep pace with evolving AI-driven attack techniques.

Incident Response and Recovery

A robust incident response plan is crucial for organizations facing data breaches instigated by malicious AI applications. These attacks often differ from traditional cyberattacks, requiring a specialized approach that accounts for the adaptive and autonomous nature of AI-driven threats. A well-defined plan minimizes damage, accelerates recovery, and provides valuable lessons for future security enhancements.The effectiveness of incident response hinges on a rapid and coordinated effort to contain, eradicate, and recover from an AI-driven attack.

This involves isolating affected systems, identifying the attack vector, removing malicious code, restoring data from backups, and implementing preventative measures to avoid future compromises. The speed and efficiency of this process directly impact the overall cost and reputational damage associated with the breach.

Incident Response Plan Design

A comprehensive incident response plan should clearly define roles and responsibilities, establish communication protocols, and Artikel specific procedures for various attack scenarios. This plan should be regularly tested and updated to reflect evolving threats and technological advancements. Key components include a pre-defined escalation path, detailed procedures for data recovery, and a communication strategy for stakeholders including affected individuals, regulatory bodies, and the media.

Regular simulations and tabletop exercises help refine the plan and ensure its efficacy under pressure. For example, a simulation might involve a scenario where a malicious AI compromises a customer database, forcing the team to practice containment, eradication, and recovery procedures.

Containing, Eradicating, and Recovering from an AI-Driven Attack

Containment involves isolating affected systems to prevent further spread of the malicious AI. This might involve disconnecting affected servers from the network, shutting down specific applications, or implementing network segmentation. Eradication focuses on removing the malicious AI and any compromised data. This may require specialized malware removal tools, system reimaging, and potentially a complete system rebuild. Recovery involves restoring data from backups, verifying system integrity, and restoring normal operations.

A layered approach, involving multiple backup strategies and secure data storage locations, is crucial for efficient recovery. For instance, a company might employ a 3-2-1 backup strategy (three copies of data on two different media, with one copy offsite).

Post-Incident Analysis and Lessons Learned

Post-incident analysis is critical for identifying vulnerabilities and improving future security measures. This involves a thorough review of the incident timeline, the attack vector, the impact of the breach, and the effectiveness of the response. The analysis should pinpoint areas for improvement in security protocols, incident response procedures, and employee training. Lessons learned should be documented and incorporated into updated security policies and procedures.

This iterative process of learning and improvement is vital for building a more resilient security posture against future AI-driven attacks. For example, analyzing a past breach might reveal a weakness in the AI model’s input validation, leading to the implementation of stricter input sanitization techniques.

Reporting Data Breaches to Relevant Authorities

Prompt and accurate reporting is paramount in mitigating the impact of a data breach. Failure to comply with reporting regulations can result in significant penalties. The steps involved in reporting a data breach are as follows:

- Identify the breach: Determine the scope and nature of the compromised data.

- Gather evidence: Collect all relevant information about the attack, including logs, timestamps, and affected systems.

- Notify affected individuals: Inform individuals whose data has been compromised, providing them with necessary information and support.

- Contact relevant authorities: Report the breach to appropriate regulatory bodies, such as the relevant data protection authority (e.g., the ICO in the UK or the FTC in the US).

- Document the incident: Maintain detailed records of the incident, including the response actions taken and lessons learned.

- Cooperate with investigations: Fully cooperate with any investigations conducted by law enforcement or regulatory bodies.

Employee Training and Awareness

A robust employee training program is crucial for mitigating the risks associated with malicious AI applications. Educating employees about the evolving threat landscape and implementing effective security practices is paramount to safeguarding sensitive data. A comprehensive program should cover various aspects of data security, focusing on practical applications and real-world scenarios to enhance employee understanding and engagement.Employee awareness training should go beyond simple theoretical explanations.

It needs to equip employees with the knowledge and skills to identify, respond to, and prevent malicious AI-driven attacks. This includes understanding the techniques used by malicious actors and the importance of adhering to established security protocols. Regular refresher training is essential to keep employees updated on emerging threats and best practices.

Understanding Malicious AI Tactics

Malicious actors leverage AI for sophisticated attacks, including advanced phishing scams and highly targeted social engineering campaigns. Phishing emails, for instance, can now mimic legitimate communications with remarkable accuracy, making them harder to detect. Social engineering attacks might involve impersonating trusted individuals or organizations to manipulate employees into divulging sensitive information or granting access to systems. Training should cover examples of these attacks, highlighting the subtle cues that can reveal their malicious nature, such as unexpected email addresses, grammatical errors, or suspicious links.

For example, a phishing email might appear to be from a company’s IT department, requesting password resets under the guise of system maintenance. Another example could be a social engineering tactic where an attacker poses as a senior executive, requesting confidential data urgently.

Password Security and Multi-Factor Authentication

Strong passwords and multi-factor authentication (MFA) are fundamental to data security. Employees should be trained on creating complex, unique passwords for each account, avoiding easily guessable combinations or using the same password across multiple platforms. The training should emphasize the importance of regularly changing passwords and utilizing password managers to securely store and manage credentials. MFA adds an extra layer of security by requiring multiple forms of verification, such as a password and a one-time code sent to a mobile device, significantly reducing the risk of unauthorized access even if a password is compromised.

This makes it exponentially more difficult for attackers to gain access, even if they obtain a password through phishing or other means.

Key Security Awareness Messages

A concise list of key security awareness messages should be integrated into the training materials. These messages should be clear, concise, and easily understood by all employees. They should also be reinforced regularly through various communication channels. For example:

- Never click on suspicious links or attachments in emails.

- Report any suspicious activity immediately to the IT department.

- Use strong and unique passwords for all accounts.

- Enable multi-factor authentication whenever possible.

- Be cautious about sharing personal or company information online.

- Understand the company’s data security policies and procedures.

- Regularly update software and operating systems.

- Be aware of social engineering tactics and phishing scams.

These messages serve as a constant reminder of best practices and reinforce the importance of employee vigilance in maintaining data security. Regular reinforcement through email campaigns, posters, and interactive modules helps to ensure that employees internalize these crucial security guidelines.

Regular Security Audits and Assessments

Regular security audits and assessments are crucial for maintaining the robust data protection necessary in the face of increasingly sophisticated malicious AI applications. These audits provide a systematic method for identifying vulnerabilities and weaknesses in your data security infrastructure, allowing for proactive mitigation before breaches occur. A comprehensive approach incorporates various techniques and tools to ensure a thorough evaluation of your systems and processes.Proactive identification of vulnerabilities is paramount to preventing data compromise.

Regular audits provide a snapshot of your current security posture, revealing gaps and weaknesses that malicious actors could exploit. This proactive approach is significantly more cost-effective than reacting to a data breach, which can lead to significant financial losses, reputational damage, and legal repercussions.

Penetration Testing and Vulnerability Scanning

Penetration testing simulates real-world attacks to identify exploitable vulnerabilities. This involves ethical hackers attempting to breach your systems using various methods, mirroring the tactics of malicious actors. Vulnerability scanning, on the other hand, uses automated tools to identify known security flaws in software, hardware, and configurations. The combination of both penetration testing and vulnerability scanning provides a comprehensive view of your security posture, highlighting both known and unknown vulnerabilities.

For example, a penetration test might reveal a weakness in a custom-built application, while a vulnerability scan could identify outdated software libraries with known exploits. The results from both are then used to prioritize remediation efforts.

Security Information and Event Management (SIEM) Systems

Security Information and Event Management (SIEM) systems collect and analyze security logs from various sources across your IT infrastructure. This centralized view allows for real-time monitoring of system activity, enabling the detection of suspicious behavior and potential security incidents. SIEM systems can correlate events from different sources, identifying patterns that might indicate a sophisticated attack. For instance, a SIEM system might detect unusual login attempts from unfamiliar geographic locations, combined with data exfiltration attempts, indicating a potential breach in progress.

This allows for immediate response and containment of the threat. Effective SIEM implementation requires careful configuration, data normalization, and ongoing tuning to optimize alert accuracy and minimize false positives.

Interpreting Audit Results and Improving Data Protection

The results of security audits and assessments should be meticulously analyzed to identify the most critical vulnerabilities and prioritize remediation efforts. This analysis often involves assigning risk scores to vulnerabilities based on their severity and likelihood of exploitation. A risk-based approach allows for the efficient allocation of resources to address the most significant threats first. For example, a high-severity vulnerability with a high likelihood of exploitation would be prioritized over a low-severity vulnerability with a low likelihood.

The remediation process should involve patching software, updating configurations, implementing security controls, and retraining employees on security best practices. Post-remediation, follow-up audits are essential to verify the effectiveness of the implemented changes and ensure ongoing data protection.

Summary

Securing data from malicious AI applications requires a proactive, multi-faceted approach. By combining robust security architectures, AI-powered defenses, strong data governance, comprehensive employee training, and regular security assessments, organizations can significantly reduce their vulnerability to AI-driven attacks. Remember, the landscape of AI threats is constantly shifting, demanding continuous vigilance and adaptation. Staying informed and implementing the best practices Artikeld here is crucial to maintaining the integrity and confidentiality of your data in this increasingly complex threat environment.