AI’s ability to generate diverse musical styles and genres is rapidly evolving, pushing the boundaries of musical creativity. This exploration delves into the technical underpinnings, creative control offered to users, and the resulting sonic landscapes produced by these increasingly sophisticated algorithms. We’ll examine how AI mimics existing genres, its potential to create entirely new ones, and the ethical considerations that arise from this technological advancement.

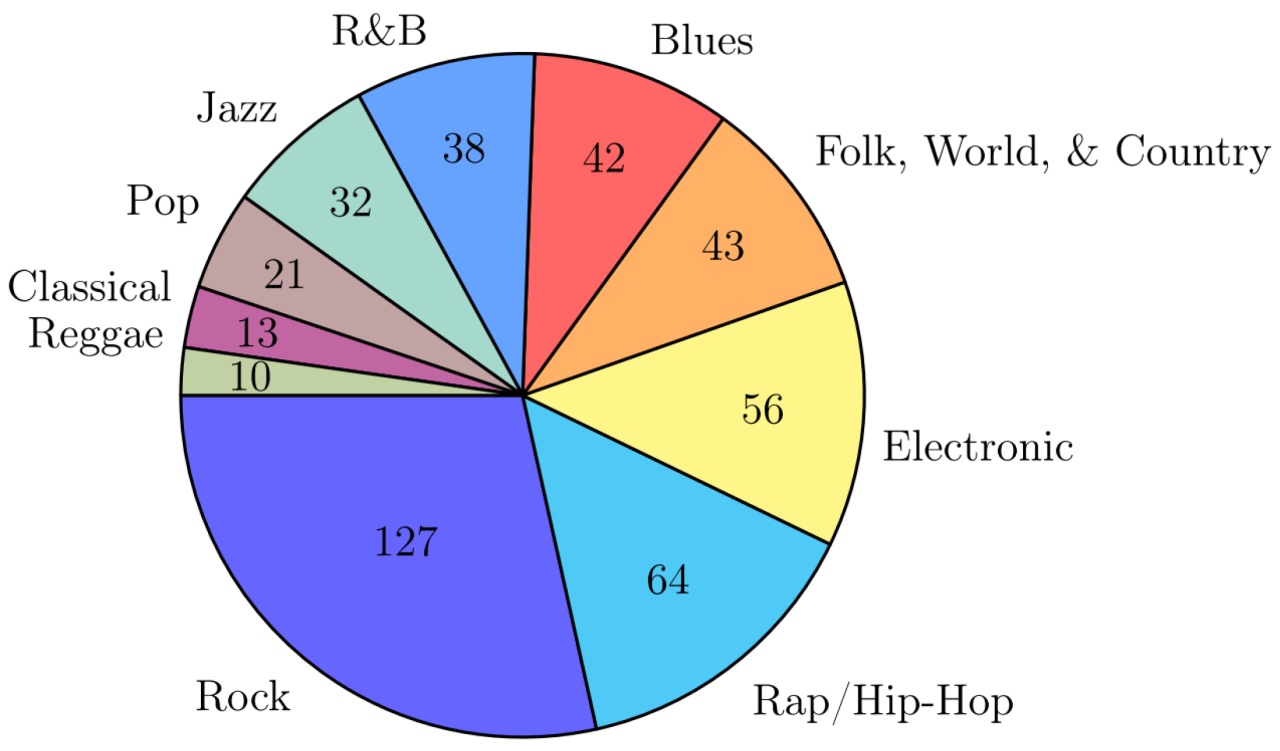

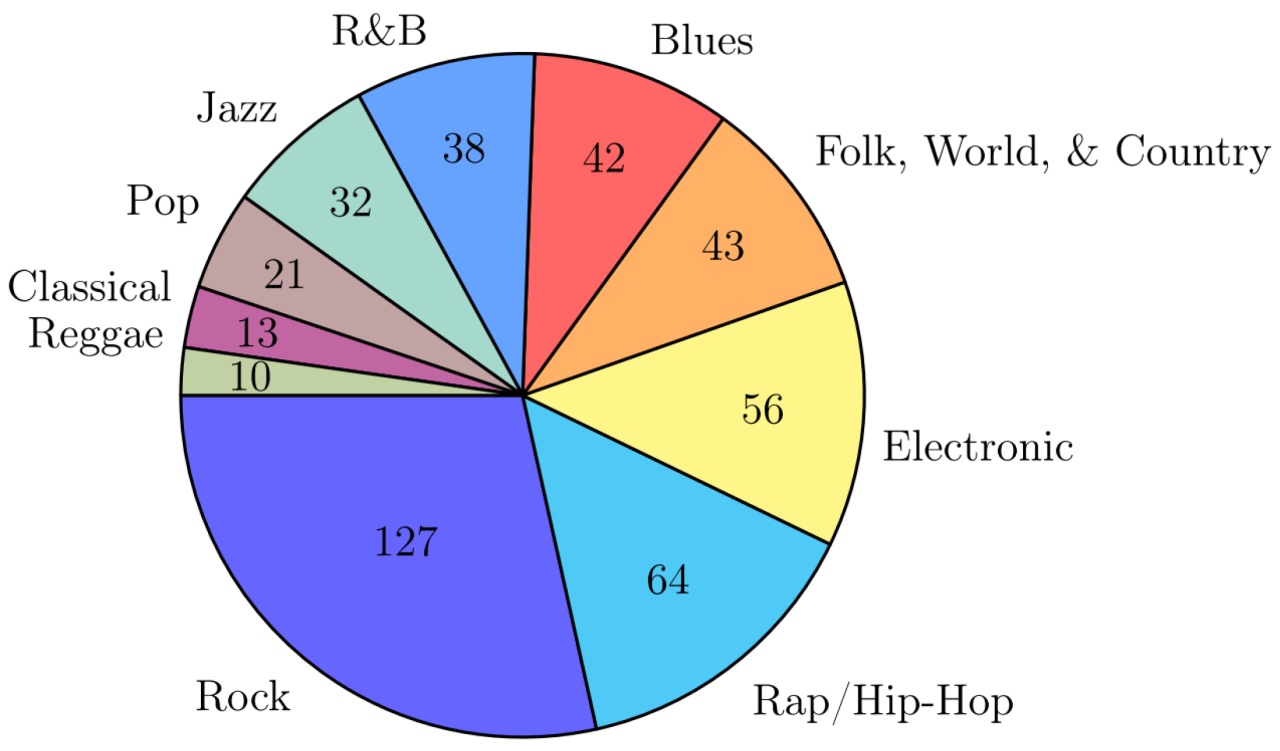

From replicating the intricate rhythms of Afrobeat to the melancholic melodies of blues, AI demonstrates a remarkable capacity for stylistic versatility. However, the fidelity and authenticity of AI-generated music vary across genres, highlighting both the successes and limitations of current technology. Understanding the parameters influencing style generation—training data, algorithm design, and user interaction—is crucial to grasping the full potential and limitations of AI in music composition.

AI’s Musical Style Range

AI music generation tools have rapidly expanded their capabilities, demonstrating a remarkable breadth of stylistic expression across various musical genres. These advancements are driven by sophisticated algorithms and increasingly large datasets of musical examples, allowing for the creation of pieces that convincingly emulate diverse musical traditions and aesthetics. This ability to generate music spanning numerous styles presents both exciting opportunities and interesting challenges for the future of music composition and production.AI’s ability to generate music across a wide range of styles is a testament to the power of machine learning.

Current AI models can convincingly generate music resembling classical compositions, jazz improvisations, pop songs, electronic dance music, and even folk music from diverse cultural backgrounds. The specific styles achievable depend on the training data used to develop the model; models trained on large, diverse datasets tend to exhibit greater stylistic versatility. However, even specialized models trained on a single genre can demonstrate impressive stylistic nuances within that genre, such as replicating the unique characteristics of a particular composer or musical period.

AI-Generated Music Fidelity Across Genres

The fidelity of AI-generated music varies significantly across different genres. In genres with relatively simple harmonic structures and predictable rhythmic patterns, such as some forms of electronic music, AI models often achieve high fidelity, producing outputs that are difficult to distinguish from human-created music. However, in more complex genres, such as classical music or jazz, where improvisation and nuanced emotional expression play crucial roles, the fidelity of AI-generated music may be lower.

This is partly due to the limitations of current AI algorithms in capturing the subtleties of human musical expression and the complexity of musical structures in these genres. Furthermore, biases in the training data can lead to AI models favoring certain styles or musical elements over others, resulting in a less diverse or representative output. For instance, a model trained primarily on Western classical music might struggle to generate authentic-sounding music from non-Western traditions.

Technical Approaches to Achieving Stylistic Diversity

Several technical approaches contribute to the stylistic diversity of AI-generated music. One crucial approach is the use of large and diverse datasets for training. These datasets can include millions of audio examples representing a wide range of musical styles, instruments, and cultural backgrounds. The more diverse the training data, the more versatile the resulting AI model is likely to be.

Another key approach is the use of advanced neural network architectures, such as generative adversarial networks (GANs) and transformers. GANs, for example, involve two competing neural networks: a generator that creates music and a discriminator that evaluates its authenticity. This competitive process helps the generator learn to produce increasingly realistic and diverse musical outputs. Transformers, known for their ability to process sequential data effectively, have also been successfully applied to music generation, allowing for the modeling of long-range dependencies and complex musical structures.

Furthermore, techniques like style transfer allow AI models to learn and apply the stylistic characteristics of one piece of music to another, effectively creating variations or reinterpretations of existing compositions in different styles.

Factors Influencing Style Generation

The generation of diverse musical styles by AI is not a random process; it’s heavily influenced by a complex interplay of parameters and the data it’s trained on. Understanding these factors is crucial for controlling and predicting the output of AI music generation systems. This section delves into the key elements that shape the stylistic outcome of AI-generated music.

The style of music an AI generates is directly determined by the data it has been exposed to during its training phase. This data encompasses various musical features, including melody, harmony, rhythm, instrumentation, and overall structure. The parameters used during the generation process further refine the stylistic expression, allowing for a degree of control over the final output.

Parameter Influence on Genre

The following table illustrates how various parameters impact the generated musical genre. The examples provided are illustrative and the limitations highlight the challenges in achieving perfect control.

| Parameter | Genre Impact | Example | Limitation |

|---|---|---|---|

| Tempo | Influences the perceived energy and mood. Faster tempos often associate with energetic genres like pop or rock, while slower tempos might suggest genres like ballads or ambient music. | A tempo of 120 bpm might lead to a pop song, while a tempo of 60 bpm could result in a slow ballad. | Tempo alone doesn’t define a genre; other parameters are equally crucial. A fast tempo doesn’t automatically make a piece of music heavy metal. |

| Instrumentation | Significantly shapes the timbre and overall sonic character. The presence of specific instruments strongly suggests certain genres. | The use of synthesizers, drum machines, and vocoders might point towards electronic dance music (EDM), while acoustic guitars and strings suggest folk or classical music. | The same instrument can be used across multiple genres. For instance, a guitar can be used in both rock and flamenco. |

| Harmonic Progression | Dictates the underlying harmonic structure and greatly influences the mood and genre. Major keys often convey happiness, while minor keys can evoke sadness or drama. | Simple, repetitive progressions might suggest pop or folk, while complex, chromatic progressions could indicate jazz or classical music. | Harmonic progressions alone are insufficient to define a genre. Many genres share similar harmonic patterns. |

| Rhythm | Determines the rhythmic feel and groove, strongly influencing the genre. Complex polyrhythms are common in Afrobeat, while simpler, repetitive rhythms are characteristic of many pop songs. | A driving, syncopated rhythm might suggest funk or hip-hop, while a straightforward, four-on-the-floor beat is common in many dance genres. | Rhythm alone is not a definitive genre identifier; other musical elements must be considered in conjunction. |

Role of Training Data in Stylistic Range

The training data forms the foundation upon which an AI music generator builds its stylistic understanding. The diversity and quality of this data directly determine the breadth and depth of the generated music’s stylistic range. A model trained on a vast and diverse dataset encompassing various genres and eras will exhibit a wider stylistic range than one trained on a limited dataset.

For example, an AI trained primarily on classical music will likely generate compositions with classical characteristics, such as complex harmonies, counterpoint, and formal structures. In contrast, an AI trained on a diverse dataset including classical, jazz, pop, and electronic music will be capable of generating music across a wider spectrum of styles, potentially blending elements from different genres.

Another example would be an AI trained solely on the works of a single composer, such as Bach. The output would heavily reflect Bach’s style, including his characteristic counterpoint and harmonic language. This limited dataset would severely restrict the AI’s ability to generate music in other styles.

Experiment: Training Data Subsets and Genre Generation

To demonstrate the impact of specific training data subsets, an experiment could be designed focusing on the generation of jazz music. Three different models would be trained:

- Model A: Trained on a large dataset of diverse jazz subgenres (e.g., bebop, swing, cool jazz, fusion).

- Model B: Trained on a smaller dataset focusing solely on bebop jazz.

- Model C: Trained on a dataset combining bebop and classical music.

After training, each model would be prompted to generate a jazz composition. The resulting compositions would then be analyzed for stylistic characteristics, comparing their adherence to bebop conventions, harmonic complexity, rhythmic patterns, and overall melodic structure. This comparison would reveal how the different training data subsets influence the generated music’s adherence to a specific jazz subgenre (bebop) and its potential fusion with other styles (classical music in Model C’s case).

Creative Control and User Interaction

AI music generation tools are rapidly evolving, offering users unprecedented levels of creative control. Moving beyond simple genre selection, these platforms empower users to shape the very essence of the music, influencing its stylistic nuances and structural elements with a degree of precision previously unattainable. This control is achieved through a combination of intuitive interfaces and sophisticated algorithms that respond to user input.Users can directly manipulate various aspects of the generated music to achieve their desired aesthetic.

This ranges from selecting specific instruments and tempos to adjusting the harmonic complexity and rhythmic patterns. The level of customization varies across different platforms, but the general trend is toward increasingly granular control, allowing for the creation of truly unique and personalized musical experiences.

Methods for Controlling AI-Generated Music Style and Genre

Several methods facilitate user control over the style and genre of AI-generated music. Many platforms offer pre-set genre options, ranging from classical and jazz to electronic dance music and hip-hop. Beyond these basic choices, however, users can often fine-tune the output through a variety of parameters. These might include selecting specific instruments or instrument families, adjusting the tempo and time signature, specifying the desired key and mode, and controlling the overall dynamic range.

More advanced tools may allow for manipulation of harmonic progressions, rhythmic structures, and even melodic contours. For example, a user might choose a “blues” genre but then specify a faster tempo and a more percussive rhythmic feel, resulting in a unique blend of blues and perhaps funk or rock elements.

Blending and Hybridizing Musical Styles with AI

AI tools excel at blending and hybridizing existing musical styles, creating novel genre combinations that would be difficult or impossible to achieve through traditional methods. The ability to manipulate individual musical elements independently allows for seamless integration of disparate styles. For instance, an AI system could combine the melodic structure of a classical sonata with the rhythmic complexity of Afrobeat, or the harmonic language of jazz with the textural richness of ambient music.

Examples of such novel genre combinations could include “Classical Trap,” which blends the sophisticated harmonies of classical music with the rhythmic drive and electronic elements of trap music, or “Ambient Flamenco,” fusing the ethereal soundscapes of ambient music with the passionate and rhythmic intensity of flamenco. The possibilities are virtually limitless.

User-Defined Style Parameters Beyond Standard Genre Classifications

The potential for user-defined style parameters extends far beyond standard genre classifications. Users can input more abstract parameters, such as “dark and melancholic,” “energetic and uplifting,” or “eerie and mysterious,” and the AI will attempt to translate these qualitative descriptions into specific musical characteristics. This opens up exciting possibilities for generating music that evokes specific emotions or atmospheres. Furthermore, some systems allow users to upload their own musical samples, allowing them to directly influence the generated music’s style by providing a specific sonic palette or thematic material.

This could involve uploading a favorite riff, a specific drum pattern, or even a complete musical composition, which the AI then uses as a basis for generating new and related material. This personalized approach ensures the AI’s output is highly tailored to the user’s unique preferences and creative vision.

Evaluation of AI-Generated Music: AI’s Ability To Generate Diverse Musical Styles And Genres

The evaluation of AI-generated music presents a unique challenge, requiring a framework that considers both its technical achievements and its artistic merit. Unlike human composition, where intentionality and emotional expression are often readily apparent, AI-generated music necessitates a more nuanced approach to assessment, focusing on its ability to emulate specific styles and its capacity to evoke emotional responses in listeners.

This evaluation must also acknowledge the evolving nature of AI music generation, with ongoing improvements in algorithms and data sets continuously shaping the quality and diversity of the output.Assessing the quality of AI-generated music requires a multi-faceted approach that moves beyond simple comparisons to human-composed works. It needs to incorporate elements of both objective and subjective evaluation, taking into account technical proficiency, stylistic consistency, and emotional impact.

Comparative Analysis: AI vs. Human Composition in Jazz

A comparative analysis of AI-generated jazz music against human-composed jazz reveals both striking similarities and significant differences. The following points highlight key aspects of this comparison:

- Similarities: AI can effectively replicate the harmonic structures, rhythmic patterns, and instrumental textures characteristic of jazz. For example, an AI trained on a large dataset of Miles Davis’s work might accurately reproduce his use of modal jazz harmonies and his distinctive trumpet phrasing. Both AI and human-composed jazz can exhibit improvisation, though the nature of this improvisation differs significantly.

- Differences: Human jazz musicians often infuse their music with personal experiences, emotions, and unique stylistic nuances that are difficult for AI to replicate. While AI can generate technically proficient jazz solos, they often lack the expressive phrasing, emotional depth, and improvisational spontaneity of a seasoned human musician. The “soul” or “feeling” present in human jazz is often absent in AI-generated counterparts, even if the technical aspects are comparable.

Evaluation Rubric for AI-Generated Music, AI’s ability to generate diverse musical styles and genres

A robust rubric for evaluating AI-generated music should incorporate both objective and subjective criteria. The following rubric provides a framework for assessing stylistic authenticity and artistic merit:

| Criterion | Excellent (5 points) | Good (4 points) | Fair (3 points) | Poor (2 points) | Unacceptable (1 point) |

|---|---|---|---|---|---|

| Stylistic Authenticity | Accurately reflects the chosen genre’s conventions and characteristics; highly convincing imitation. | Mostly reflects genre conventions; minor inconsistencies. | Shows some aspects of the chosen genre; noticeable inconsistencies. | Limited adherence to genre conventions; significant inconsistencies. | Fails to reflect the chosen genre’s characteristics. |

| Technical Proficiency | Technically flawless; masterful execution of musical elements. | Technically proficient; minor technical flaws. | Technically adequate; some noticeable flaws. | Technically flawed; frequent errors. | Technically inept; numerous significant errors. |

| Emotional Impact | Evokes strong and appropriate emotions in the listener; highly engaging. | Evokes some emotion; reasonably engaging. | Evokes limited emotion; somewhat engaging. | Evokes little or no emotion; not engaging. | Fails to evoke any emotional response. |

| Originality/Creativity | Demonstrates originality within the stylistic constraints; unexpected and inventive elements. | Shows some originality; mostly predictable. | Limited originality; highly predictable. | Lacks originality; entirely predictable. | Completely unoriginal and derivative. |

Perceptual Aspects of AI-Generated Music

Human listeners perceive stylistic coherence and emotional impact in AI-generated music in diverse ways, often depending on their familiarity with the genre and their expectations. For example, a listener experienced in classical music might appreciate the technical precision of an AI-generated symphony but find it lacking in the emotional depth and narrative arc typically found in human-composed works. Conversely, a listener less familiar with classical music might find the AI-generated piece technically impressive and even emotionally moving, without necessarily discerning the subtle nuances missing from a human composition.

The perception of stylistic coherence often depends on the AI’s training data and its ability to seamlessly blend different musical elements. A lack of coherence might manifest as jarring transitions or inconsistencies in style, potentially leading to a negative listening experience. Emotional impact, on the other hand, is highly subjective and depends on individual preferences and interpretations, though certain musical features, such as dynamics and melodic contour, are generally recognized as contributing to emotional expression.

Future Directions and Challenges

The rapid advancement of AI in music generation presents exciting possibilities, but also significant hurdles. While current AI models can convincingly mimic existing styles, the true potential lies in their ability to create entirely new musical landscapes and explore uncharted sonic territories. However, achieving truly diverse and authentic representations of all musical styles, while navigating ethical concerns, remains a significant challenge.The potential for AI to generate entirely novel musical styles is immense.

Current algorithms, primarily based on deep learning techniques, excel at pattern recognition and extrapolation. By feeding these algorithms vast datasets of diverse musical styles, they can learn the underlying structures and rules governing melody, harmony, rhythm, and timbre. This learning process could lead to the creation of entirely new musical languages, combining elements from disparate sources in unexpected and innovative ways.

Imagine an AI blending the complex rhythmic structures of West African drumming with the microtonal harmonies of Indonesian gamelan music, resulting in a genre previously unimaginable. This generative process could accelerate musical evolution, potentially leading to breakthroughs in musical expression.

Novel Musical Style Generation

AI’s capacity to synthesize novel musical styles hinges on its ability to transcend simple imitation and engage in genuine creative exploration. Current models often rely on statistical probabilities, generating music based on patterns observed in training data. True novelty, however, requires a leap beyond statistical prediction – a capacity for genuine originality and conceptual innovation. Researchers are exploring techniques such as generative adversarial networks (GANs) and reinforcement learning to push the boundaries of AI-generated music, encouraging more unpredictable and surprising outputs.

The development of more sophisticated AI models capable of understanding and manipulating musical concepts at a higher level of abstraction is crucial for achieving this goal. For example, an AI might be trained to understand musical concepts like “tension” and “release” and use this understanding to generate music with specific emotional impact, going beyond simply mimicking existing patterns.

Challenges in Achieving Diverse and Authentic Representation

Achieving truly diverse and authentic representation of all musical styles presents a significant challenge. The quality of AI-generated music is heavily dependent on the quality and diversity of the training data. Biases present in existing datasets can lead to skewed or incomplete representations of certain musical traditions. For example, if a dataset heavily favors Western classical music, the resulting AI model may struggle to accurately generate music from other cultures.

Furthermore, accurately capturing the nuances of human musical expression – the subtle inflections, emotional depth, and cultural context – remains a significant technical hurdle. The complexity of musical expression, encompassing both technical proficiency and emotional depth, requires a level of understanding that current AI models are only beginning to approach. Overcoming these limitations requires the development of more sophisticated algorithms and the careful curation of diverse and representative training datasets.

Ethical Considerations in AI Music Generation

The use of AI in music generation raises significant ethical considerations, particularly concerning copyright and cultural appropriation. The question of ownership of AI-generated music remains largely unresolved. If an AI is trained on copyrighted material, does the resulting music inherit the same copyright protections? Similarly, the use of AI to generate music in specific styles raises concerns about cultural appropriation.

If an AI is trained on traditional music from a specific culture, does the use of this AI to generate new music constitute an ethical appropriation of that culture’s artistic heritage? Addressing these concerns requires careful consideration of intellectual property rights, cultural sensitivity, and the development of ethical guidelines for the use of AI in music generation. Transparency in the training data used and acknowledgment of the cultural origins of the generated music are crucial steps in mitigating these risks.

Furthermore, collaboration with artists and cultural communities is essential to ensure respectful and responsible use of AI in music creation.

Conclusion

The capacity of AI to generate diverse musical styles and genres represents a significant leap forward in music technology. While challenges remain in achieving truly authentic representation and addressing ethical concerns, the potential for creative exploration and innovation is undeniable. The future of music creation may well involve a collaborative partnership between human artists and AI, resulting in new sounds and forms of musical expression never before imagined.