Assessing the creative potential of AI in music production unveils a fascinating intersection of technology and artistry. This exploration delves into AI’s current capabilities in composition, arrangement, mixing, and mastering, comparing its output to human-created music. We’ll examine various AI algorithms, propose ideal workflows integrating AI tools, and analyze the creative output of different platforms, highlighting both strengths and weaknesses.

The ethical considerations and commercial viability of AI-generated music will also be addressed, considering its impact on human musicians and the future of the music industry.

This analysis will move beyond simple technical descriptions to explore the deeper implications of AI’s role in shaping musical creativity, examining its potential to augment human expression, generate novel musical styles, and personalize the listening experience. We will consider both the exciting possibilities and the potential challenges presented by this rapidly evolving field.

Defining AI’s Role in Music Production

Artificial intelligence is rapidly transforming music production, offering both creative tools and enhanced efficiency. While not yet capable of replacing human creativity entirely, AI assists musicians and producers at various stages of the creative process, from initial composition to final mastering. This section will delve into the specific roles AI currently plays and its impact on the sonic landscape.AI’s capabilities in music production are steadily advancing.

Currently, AI excels in automating tasks, offering creative suggestions, and even generating entirely novel musical ideas. However, the level of human intervention required varies depending on the task and the desired outcome.

AI Capabilities in Music Production Tasks

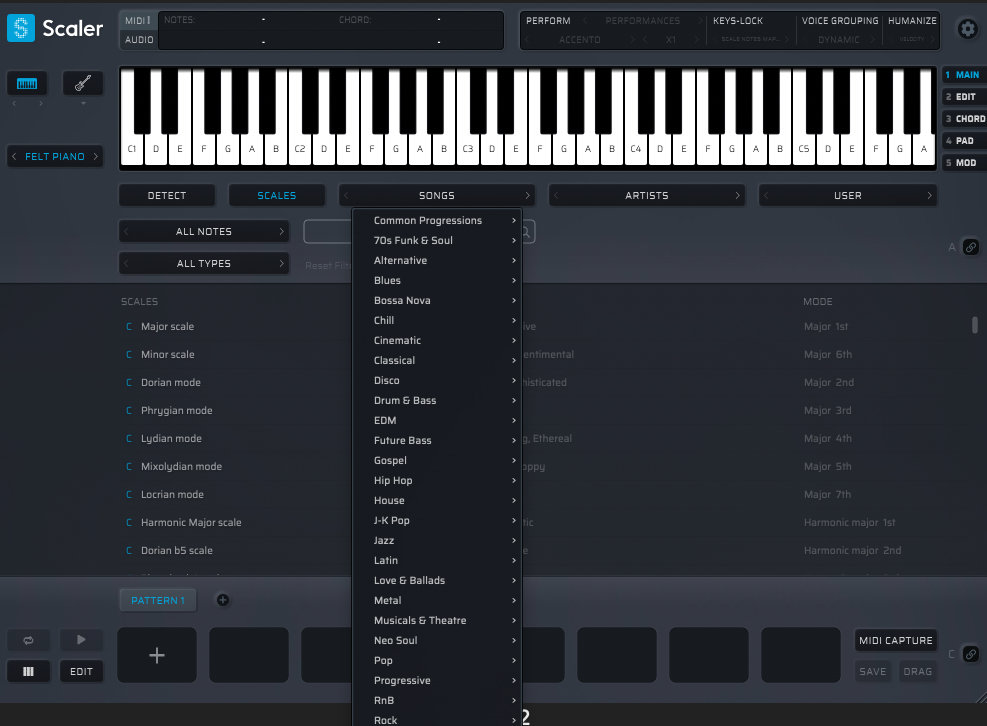

AI algorithms are increasingly utilized across various stages of music production. In composition, AI can generate melodies, harmonies, and rhythms based on input parameters or learned musical styles. Arrangement tools powered by AI can suggest optimal instrument placements, transitions, and dynamic changes. AI also aids in mixing by suggesting EQ settings, compression levels, and other parameters to achieve a balanced and polished sound.

Finally, mastering tools can optimize loudness, dynamics, and overall sonic characteristics for various playback environments.

Comparison of AI-Generated and Human-Composed Music

A key difference between AI-generated and human-composed music often lies in the emotional depth and narrative coherence. While AI can produce technically proficient and stylistically consistent music, it may lack the nuanced emotional expression and storytelling capabilities of human composers. For example, an AI might generate a technically perfect pop song, adhering to all the conventions of the genre, but it might lack the subtle emotional shifts and unexpected twists that make a human-composed song memorable.

In terms of specific musical elements, AI-generated music might exhibit predictable harmonic progressions or rhythmic patterns, whereas human compositions often incorporate more surprising and unpredictable elements. The melodic contour and phrasing might also differ; human composers often prioritize expressiveness and emotional impact in their melodic choices, while AI might prioritize adherence to stylistic rules.

AI Algorithms in Music Production

Several types of AI algorithms are employed in music production. Generative Adversarial Networks (GANs) are used to create new musical content by pitting two neural networks against each other: a generator that creates music and a discriminator that judges its authenticity. Recurrent Neural Networks (RNNs), particularly Long Short-Term Memory (LSTM) networks, excel at processing sequential data like musical notes and are often used for tasks like melody generation and style transfer.

Other algorithms, such as Markov chains and hidden Markov models, are also utilized for simpler tasks like generating rhythmic patterns or chord progressions. The choice of algorithm depends on the specific task and the desired level of creativity and control.

Hypothetical AI-Assisted Music Production Workflow

A typical music production workflow could be significantly enhanced by AI tools. A producer might begin by using an AI to generate a basic melody and harmonic structure based on a desired genre or mood. Then, the producer could refine this composition using a digital audio workstation (DAW), adding instruments, arranging sections, and editing individual notes. AI-powered mixing tools could assist in balancing the levels of individual tracks, ensuring a consistent sound across different frequencies.

Finally, AI-driven mastering tools could optimize the final mix for various playback environments, preparing it for release. Throughout this process, the human producer retains creative control, using AI as a powerful assistant to accelerate the workflow and explore new creative possibilities. This collaborative approach leverages the strengths of both human creativity and AI’s computational power.

Evaluating the Creative Output of AI Music Systems: Assessing The Creative Potential Of AI In Music Production

The assessment of AI’s creative potential in music hinges on evaluating the originality, artistic merit, and limitations of its generated output. This involves analyzing both the technical achievements and the subjective artistic impact of AI-composed and produced music. While AI can generate technically proficient music, judging its creativity requires a nuanced understanding of what constitutes artistic merit in the context of AI-driven creation.

Examples of Original and Artistically Meritorious AI-Generated Music

Several AI music systems have produced outputs demonstrating originality and artistic merit. For instance, Amper Music has created unique soundtracks for various media projects, showcasing its ability to adapt to specific stylistic requirements while maintaining a degree of creative flair. Jukebox, developed by OpenAI, has generated songs that evoke the styles of specific artists, demonstrating a capacity for stylistic imitation and creative variation.

These examples highlight AI’s potential to not only generate technically sound music but also to explore different musical styles and create pieces with unique characteristics. The originality lies not only in the novelty of the compositions themselves but also in the AI’s ability to combine and manipulate existing musical elements in unexpected ways.

AI’s Expansion of Creative Boundaries in Music Composition and Production

AI has pushed creative boundaries by facilitating novel approaches to music composition and production. One notable area is the exploration of unconventional sound palettes and textures. AI algorithms can generate sounds and timbres that are difficult or impossible to produce using traditional instruments or techniques, opening up new avenues for sonic exploration. Furthermore, AI can automate complex tasks such as mixing and mastering, allowing musicians to focus on higher-level creative decisions.

This automation empowers musicians to experiment more freely, pushing the boundaries of what’s possible within their chosen genre or style. The speed at which AI can generate variations and explore different musical possibilities also accelerates the creative process significantly.

Limitations of Current AI Music Systems in Emotional Expression and Storytelling

Despite advancements, current AI music systems have limitations in conveying genuine emotional depth and crafting compelling narratives within their musical outputs. While AI can mimic stylistic elements associated with certain emotions, it often lacks the nuanced understanding of human emotion necessary to create truly moving or impactful music. Similarly, AI struggles with constructing coherent narratives within a musical piece.

The lack of lived experience and subjective interpretation limits the AI’s ability to translate complex human emotions and experiences into a musically compelling form. This limitation points to the crucial role of human creativity and emotional intelligence in music creation, aspects that remain challenging to replicate in current AI systems.

Comparative Analysis of AI Music Generation Platforms

A comparative analysis of different AI music generation platforms reveals varying strengths and weaknesses. The following table provides a summary:

| Platform Name | Strengths | Weaknesses | Example Output Description |

|---|---|---|---|

| Amper Music | Ease of use, wide range of styles, customizable parameters | Limited creative control, potential for formulaic outputs | A dynamically generated soundtrack for a corporate video, featuring upbeat and motivational melodies. |

| Jukebox (OpenAI) | Ability to mimic specific artist styles, generation of full songs | Output quality can be inconsistent, potential for copyright infringement | A song reminiscent of a particular artist’s style, showcasing unique melodic and harmonic progressions. |

| AIVA | Strong in classical and cinematic music, sophisticated orchestration | Can sound somewhat generic at times, less versatile in non-classical genres | An epic orchestral score with complex harmonies and dynamic changes, suitable for a film or video game. |

| Soundful | Focus on user-friendly interface, intuitive workflow | Limited stylistic range compared to other platforms | Simple, royalty-free music suitable for background use in videos or podcasts. |

The Impact of AI on Human Musicians and Composers

The integration of artificial intelligence into music production presents a paradigm shift, impacting the roles and creative processes of human musicians and composers. While concerns about AI replacing human artists are valid, a more nuanced perspective reveals a potential for powerful collaboration and augmentation of human creativity. This section will explore the collaborative possibilities, the ways AI enhances human artistic expression, and the ethical implications arising from this technological advancement.AI’s role in music creation is not about replacing human artists but rather about providing them with new tools and possibilities.

AI systems can handle tedious tasks, freeing up human musicians to focus on the more nuanced aspects of their craft, fostering a more efficient and potentially more expressive workflow. This collaborative model, where humans and AI work in tandem, represents a significant shift in the creative landscape.

AI and Human Collaboration in Music Production

The potential for collaboration between human musicians and AI music systems is vast. AI can act as a sophisticated “instrument” or a creative partner, offering suggestions, generating variations, or even composing entire sections of a piece. For example, a composer might use AI to generate a range of melodic ideas based on a specific harmonic structure, then select and refine those ideas, incorporating them into their overall composition.

The AI could also handle tasks such as orchestration, mixing, and mastering, allowing the human composer to focus on the conceptual and emotional aspects of the music. This collaborative approach leverages the strengths of both human creativity and AI’s computational power, resulting in a synergistic creative process.

AI as an Augmentation of Human Creativity

AI tools can significantly augment human creativity rather than replace it. They offer new avenues for exploration and experimentation, pushing the boundaries of musical expression. Imagine a songwriter struggling with a particular lyrical theme. An AI system could analyze existing lyrics, identify patterns and stylistic choices, and suggest alternative phrasing or rhyme schemes, sparking new ideas and overcoming creative blocks.

Similarly, a composer could use AI to generate novel harmonic progressions or rhythmic patterns, leading to unexpected and innovative musical outcomes. The AI acts as a catalyst, expanding the possibilities available to the human artist, rather than dictating the creative direction.

Ethical Considerations in AI Music Creation and Copyright

The use of AI in music creation raises important ethical considerations, particularly concerning copyright and ownership. If an AI system generates a musical work, who owns the copyright? Is it the developer of the AI, the user who prompted the system, or the AI itself? These questions are complex and lack definitive answers in current legal frameworks.

Furthermore, the potential for AI to mimic the style of existing artists raises concerns about plagiarism and the exploitation of creative works. Clear guidelines and legal frameworks are necessary to address these issues, ensuring fairness and protecting the rights of both human artists and AI developers. Establishing transparent authorship attribution methods for AI-assisted music is crucial for maintaining the integrity of the creative process.

Scenario: A Successful Human-AI Collaboration

Consider a scenario where a renowned composer, known for her complex orchestral works, collaborates with an AI music system. The composer, let’s call her Anya Petrova, provides the AI with a conceptual framework for a new symphony, outlining the emotional arc and desired instrumentation. The AI, in turn, generates a range of melodic and harmonic possibilities based on Anya’s input, experimenting with different textures and instrumental combinations.

Anya then selects and refines the AI’s suggestions, integrating them into her overall composition. The AI also assists with orchestration, ensuring that the musical ideas are effectively realized across the various instrumental sections. The final symphony is a product of both Anya’s artistic vision and the AI’s computational capabilities, a seamless blend of human creativity and technological innovation.

Anya retains authorship, with the AI acknowledged as a collaborative tool in the creation process. The resulting symphony is both innovative and deeply personal, showcasing the power of human-AI synergy in artistic expression.

Future Trends and Potential of AI in Music

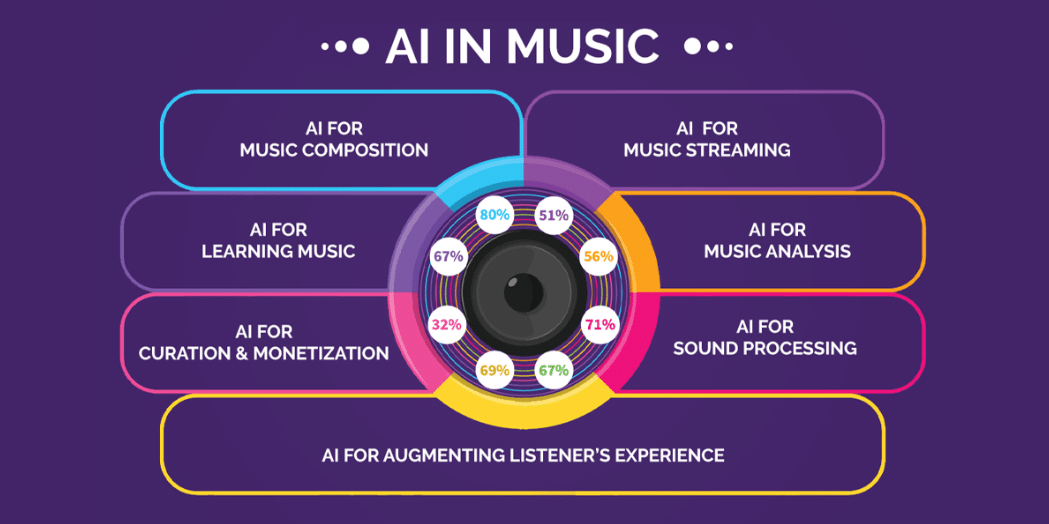

The integration of artificial intelligence into music production is rapidly evolving, promising a future where technology and artistry converge in unprecedented ways. We can anticipate significant advancements in AI’s capabilities, leading to a transformation of how music is created, experienced, and consumed. This section explores these future trends, focusing on technological progress, genre evolution, personalized listening experiences, and a conceptual visualization of AI’s role in the music industry.AI’s influence on music production will be profound.

We’re moving beyond simple tasks like auto-tuning and beat generation.

Technological Advancements in AI Music Production

Future advancements will likely center on more sophisticated algorithms capable of understanding and replicating musical nuances with greater accuracy. This includes improved generative models that can create complete musical pieces with greater emotional depth and stylistic consistency, moving beyond simple pattern recognition to genuine musical composition. We can expect to see breakthroughs in AI’s ability to understand and respond to human input, allowing for more intuitive and collaborative workflows between human musicians and AI systems.

For example, an AI might be able to learn a musician’s style and preferences, then generate musical ideas that complement their own compositions, acting as a highly skilled and adaptable creative partner. The development of more powerful and efficient hardware will also play a crucial role, allowing for the processing of increasingly complex musical data and the creation of more intricate and realistic soundscapes.

AI’s Influence on Musical Genres and Styles

AI has the potential to significantly influence the evolution of musical genres and styles. By analyzing vast datasets of existing music, AI algorithms can identify patterns and trends, leading to the creation of entirely new genres that blend elements of existing styles in unexpected and innovative ways. Imagine an AI system combining the rhythmic complexity of Afrobeat with the melodic structures of classical Indian music, creating a genre previously unimaginable.

This process could accelerate the rate of musical innovation, fostering a more diverse and dynamic musical landscape. Moreover, AI could personalize the creation of music within existing genres, allowing artists to explore subtle variations and experiment with unique sonic textures, ultimately pushing the boundaries of established styles.

Personalized Music Listening Experiences, Assessing the creative potential of AI in music production

The future of music listening is deeply intertwined with AI-powered personalization. AI algorithms can analyze individual listening habits, preferences, and emotional responses to create customized playlists and recommendations. This goes beyond simple algorithmic suggestions; imagine AI composing music specifically tailored to an individual’s mood, activity, or even physiological state. This level of personalization could transform the music listening experience, making it more engaging and meaningful for each listener.

Furthermore, AI could facilitate the creation of interactive musical experiences, where listeners can influence the direction and evolution of a musical piece in real-time, creating a unique and dynamic listening journey for every individual. Spotify’s personalized playlists are a rudimentary example of this trend, but future iterations will offer significantly more sophisticated and individualized experiences.

Conceptual Visualization: The AI Music Ecosystem

The visualization depicts a vibrant, interconnected network representing the future AI music ecosystem. At the center is a radiant sphere, symbolizing the core AI system, pulsating with light and energy, representing the constantly evolving algorithms. From this sphere, tendrils of light extend outwards, connecting to various nodes representing human musicians, composers, and listeners. Each node glows with a unique color, representing the individual’s creative style or musical preference.

The connections between the nodes represent the collaborative relationships between humans and AI, illustrating the seamless integration of technology and artistry. Surrounding the network is a swirling nebula of sound waves, representing the vast and ever-expanding universe of musical possibilities unlocked by AI. The overall effect is one of dynamic interplay, highlighting the collaborative nature of the future of music creation and consumption, where AI acts as a powerful tool in the hands of human artists, empowering them to create and share music in ways previously unimaginable.

Assessing the Commercial Viability of AI-Generated Music

The burgeoning field of AI music production presents a compelling proposition for the music industry, promising both significant cost reductions and the creation of novel sonic landscapes. However, the commercial viability of AI-generated music hinges on a complex interplay of factors, including production costs, market identification, monetization strategies, and the inherent challenges of navigating copyright and intellectual property. A thorough assessment is crucial to understand its true potential.The integration of AI into music production offers a compelling cost-benefit analysis.

Traditional music production often involves substantial expenses related to studio time, musician fees, and mixing/mastering costs. AI, on the other hand, can automate many of these processes, reducing labor costs significantly. While initial investment in AI software and hardware might be substantial, the long-term savings from reduced human input can be substantial, particularly for large-scale projects or repetitive tasks like composing background music.

This efficiency translates into higher profit margins and faster turnaround times.

Cost and Benefit Comparison of AI and Traditional Music Production

AI-driven music production presents a spectrum of advantages over traditional methods. Reduced labor costs are a primary driver, allowing for the creation of music at a fraction of the cost. Furthermore, AI can generate vast amounts of musical content quickly, opening up opportunities for personalized music experiences and on-demand composition. However, it’s crucial to acknowledge that the initial investment in AI software and the ongoing maintenance costs should be considered.

Moreover, the potential for human oversight and refinement remains necessary for high-quality output, requiring a balance between automation and human creativity. The success of AI-driven music production will depend on effectively managing this balance.

Potential Markets and Applications for AI-Generated Music

AI-generated music finds application across various sectors. The film and video game industries are prime examples. AI can rapidly generate diverse musical scores tailored to specific scenes or game mechanics, reducing production time and costs. Similarly, advertising agencies can utilize AI to create custom jingles and background music for campaigns, adapting the music to target demographics and brand identities.

Furthermore, personalized music experiences, where AI generates unique compositions based on user preferences, are emerging as a lucrative market. Companies like Amper Music have already demonstrated success in this area, providing royalty-free music for various applications.

Challenges in Monetizing and Distributing AI-Generated Music

Monetizing and distributing AI-generated music present significant hurdles. Copyright and ownership issues remain complex. Determining the copyright holder—the AI developer, the user who inputs parameters, or the platform hosting the music—requires legal clarification. Moreover, the potential for AI to replicate existing musical styles raises concerns about plagiarism and fair use. Effective distribution strategies must address these issues, possibly involving licensing agreements that address the unique ownership challenges of AI-created content.

The industry needs to establish clear guidelines and legal frameworks to facilitate the smooth monetization of AI-generated music.

Hypothetical Business Model for AI-Driven Music Production

A hypothetical company specializing in AI-driven music production, “SonicAI,” could operate on a subscription-based model. Clients—film studios, game developers, or advertising agencies—would subscribe to access a library of AI-generated music or commission custom compositions. SonicAI could offer various tiers of service, ranging from basic access to a large library of royalty-free tracks to bespoke compositions with human refinement.

Revenue would be generated through subscription fees and customized composition fees, ensuring profitability while providing diverse service options to cater to varying client needs. This model would leverage the efficiency of AI while maintaining a human element to address quality and creative control.

Ultimate Conclusion

The integration of AI into music production presents a paradigm shift, offering both unprecedented creative opportunities and significant ethical considerations. While AI currently excels in specific tasks, its capacity for emotional depth and narrative storytelling remains a key area for development. Ultimately, the future likely involves a collaborative model, where human creativity and AI’s computational power synergistically push the boundaries of musical expression.

The successful navigation of copyright issues and the development of sustainable business models will be crucial to realizing the full commercial potential of AI-generated music.