Best practices for building AI-driven big data pipelines are crucial for success in today’s data-rich world. Effectively harnessing the power of AI requires a robust, scalable, and secure pipeline capable of handling vast amounts of diverse data. This involves careful consideration of data ingestion, transformation, feature engineering, model training, deployment, monitoring, and security—all within a framework designed for optimal performance and reliability.

Understanding these best practices is key to unlocking the full potential of AI within your organization.

From choosing the right ingestion tools to implementing effective monitoring systems, each stage of the pipeline presents unique challenges and opportunities. This guide explores the key considerations at each stage, providing practical advice and real-world examples to help you build high-performing AI-driven big data pipelines that deliver actionable insights and drive business value. We’ll delve into strategies for handling various data types, optimizing performance, ensuring data quality, and mitigating security risks, equipping you with the knowledge to build a truly effective solution.

Data Ingestion Best Practices

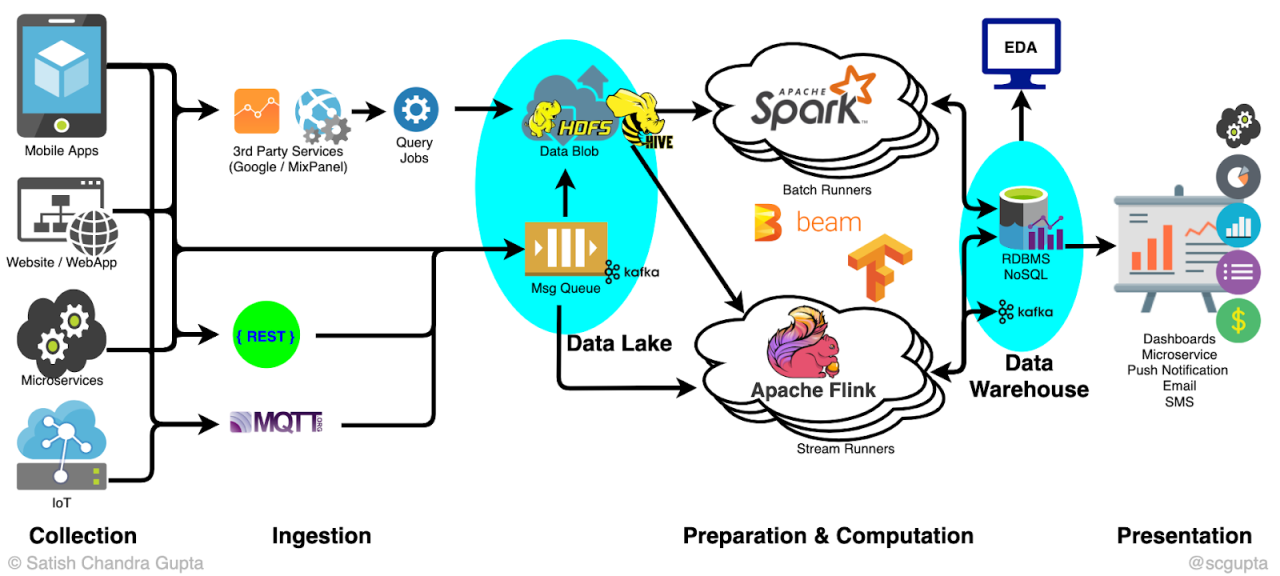

Building robust and efficient AI-driven big data pipelines hinges critically on effective data ingestion. A well-designed ingestion framework ensures the timely and accurate delivery of diverse data sources into your analytical environment, ultimately impacting the quality and reliability of your AI models. This section explores key best practices for data ingestion, focusing on framework design, data quality assurance, and technology selection.

Designing a Robust Data Ingestion Framework

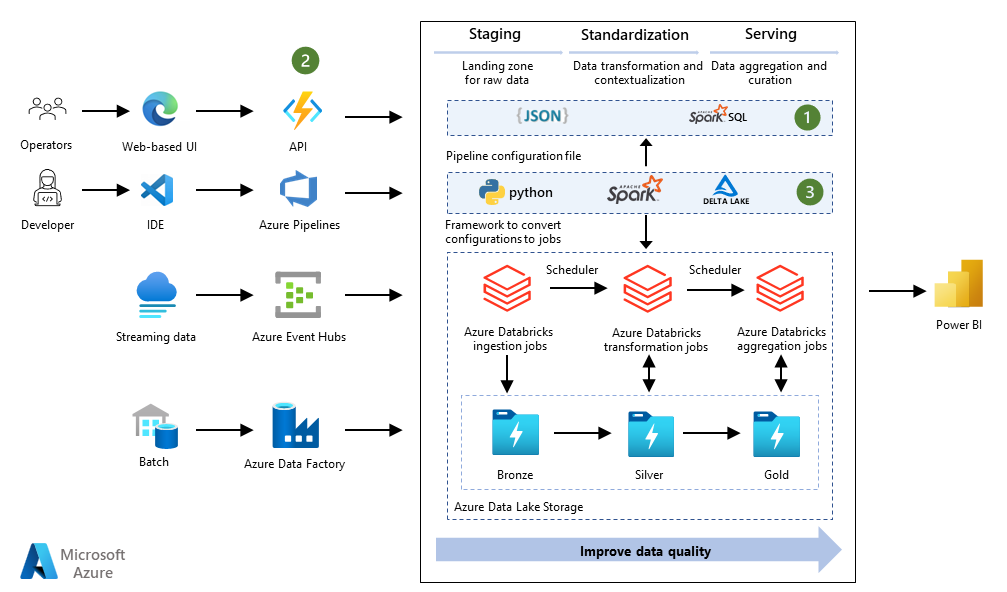

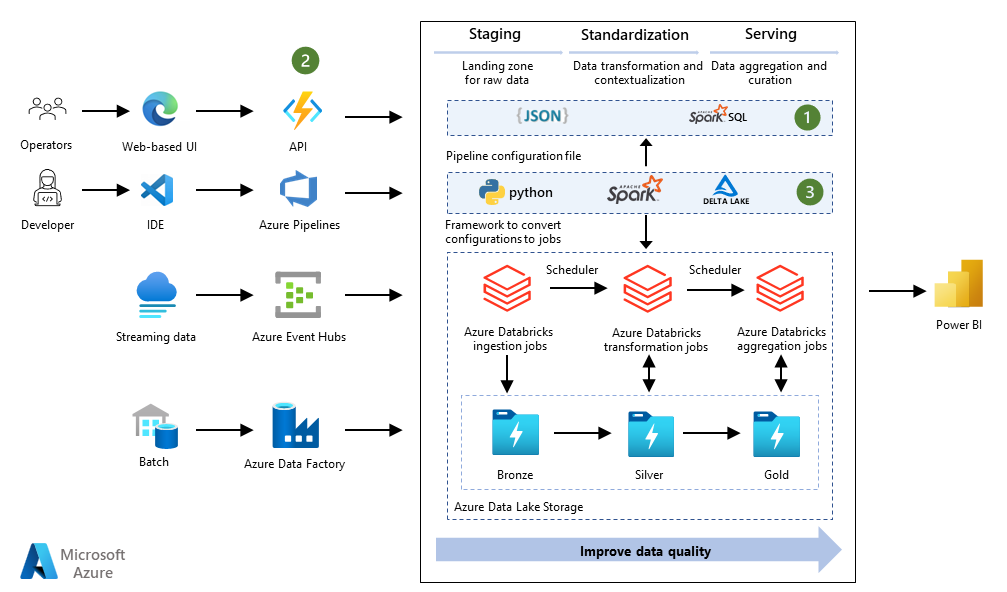

A robust data ingestion framework must accommodate the inherent variability of big data sources. This includes structured data from relational databases, semi-structured data from JSON or XML files, and unstructured data like text documents or images. The framework should be modular, allowing for the easy addition of new data sources and transformations as needed. Consider using a layered architecture, separating ingestion tasks (data extraction, transformation, and loading – ETL) from processing and storage.

This modularity simplifies maintenance, enhances scalability, and allows for independent optimization of individual components. Error handling and logging mechanisms are crucial for monitoring the health of the pipeline and identifying potential issues early. Implementing mechanisms for data provenance—tracking the origin and transformations of each data element—is essential for data governance and auditing.

Ensuring Data Quality and Consistency During Ingestion

Data quality is paramount for the success of any AI project. During ingestion, several strategies can ensure data consistency and accuracy. Data validation should be performed at each stage of the ingestion process, checking for data type consistency, completeness, and adherence to defined business rules. Data cleansing techniques, such as handling missing values, removing duplicates, and correcting inconsistencies, are vital.

Data transformation might involve converting data formats, standardizing units, or enriching data with external sources. Implementing data profiling tools can help identify potential data quality issues early in the process. Regular data quality checks and monitoring, combined with automated alerts for anomalies, contribute to proactive issue management.

Data Ingestion Tools and Technologies

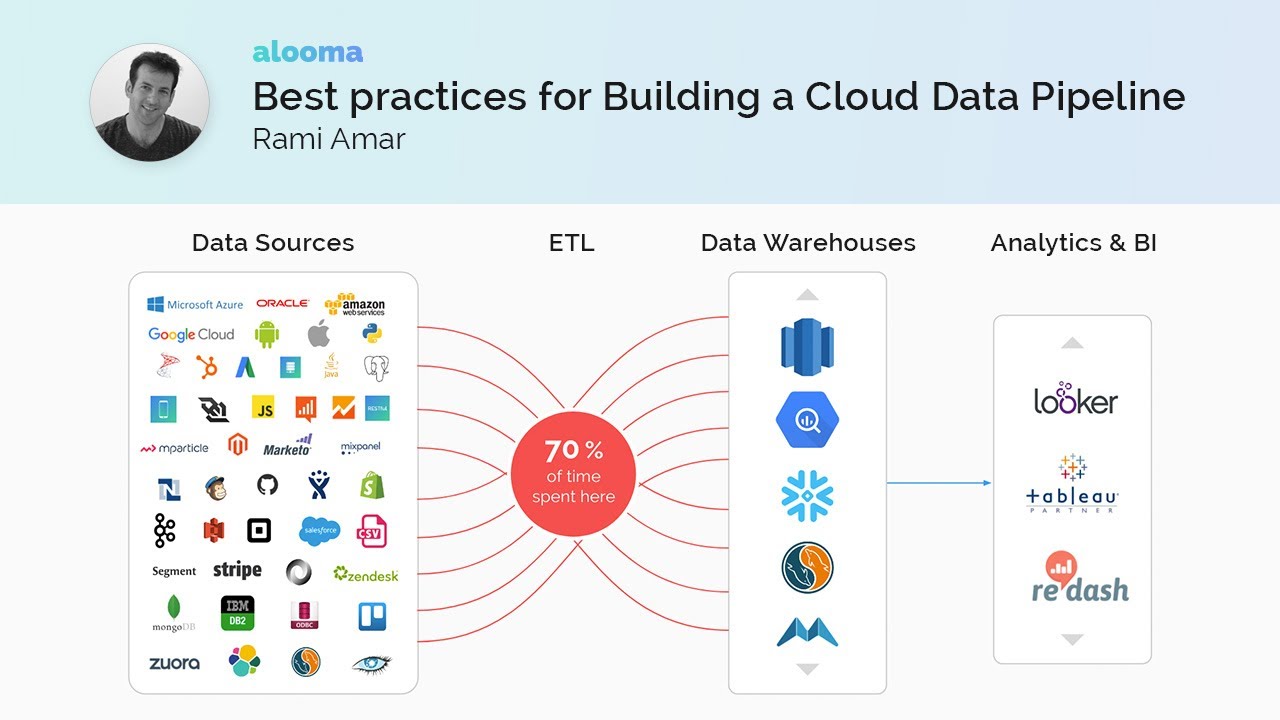

Several tools and technologies facilitate efficient data ingestion. Apache Kafka excels as a high-throughput, distributed streaming platform, ideal for real-time data ingestion. Its fault tolerance and scalability make it suitable for handling massive data volumes. However, its complexity can pose challenges for less experienced users. Apache Spark, a powerful distributed computing engine, offers versatility for batch and streaming data processing.

Its rich ecosystem of libraries supports various data formats and transformations. While highly scalable, it requires significant computational resources. Cloud-based data warehousing services, such as Snowflake or Google BigQuery, provide managed solutions for data ingestion and storage. They offer ease of use and scalability, but can be more expensive than self-managed solutions. The choice depends on factors like data volume, velocity, variety, and budget constraints.

Performance Characteristics of Data Ingestion Methods

The following table compares the performance characteristics of three common data ingestion methods: batch processing with Apache Spark, real-time streaming with Apache Kafka, and cloud-based data warehousing with Snowflake. Note that these values are illustrative and can vary significantly depending on the specific implementation, hardware, and data characteristics.

| Ingestion Method | Throughput (records/second) | Latency (seconds) | Scalability |

|---|---|---|---|

| Apache Spark (Batch) | 10k – 100k | Minutes to hours | High |

| Apache Kafka (Streaming) | 100k – 1M+ | Milliseconds to seconds | Very High |

| Snowflake (Cloud Warehouse) | 1k – 100k | Seconds to minutes | High |

Data Transformation and Preprocessing

Data transformation and preprocessing are critical steps in building robust AI-driven big data pipelines. These processes prepare raw data for consumption by machine learning models, significantly impacting model accuracy and performance. Effective data transformation involves cleaning, transforming, and preparing the data to address inconsistencies, handle missing values, and extract relevant features. This stage is crucial for ensuring the quality and reliability of the insights derived from the AI model.

The transformation process involves a series of steps designed to improve data quality and suitability for AI model training. This includes handling missing values, dealing with outliers and noisy data, and performing feature engineering to create relevant input variables for the model. The choice of techniques depends heavily on the nature of the data and the specific requirements of the AI model being used.

For example, a deep learning model might be more tolerant of noisy data than a simpler linear regression model.

Data Cleaning

Data cleaning focuses on identifying and correcting or removing inaccurate, incomplete, irrelevant, duplicated, or improperly formatted data. This may involve handling missing values through imputation (replacing missing values with estimated values) or removal of incomplete records. Outliers, data points significantly different from the rest, are often addressed through techniques like winsorization (capping values at a certain percentile) or trimming (removing extreme values).

Noisy data, containing random errors or inconsistencies, can be smoothed using techniques like moving averages or median filtering. For instance, in a dataset of customer purchase history, inconsistencies in date formats or missing purchase amounts would need to be addressed during this stage.

Data Transformation Techniques

Several transformation techniques are used to improve data quality and model performance. Normalization scales data to a specific range, such as between 0 and 1, preventing features with larger values from dominating the model. Standardization transforms data to have a mean of 0 and a standard deviation of 1, useful when dealing with data with different scales and distributions.

Discretization converts continuous variables into categorical variables, which can be beneficial for certain models. For example, converting age into age brackets (e.g., 18-25, 26-35, etc.) is a form of discretization. Another common technique is encoding categorical variables into numerical representations using techniques like one-hot encoding or label encoding.

Handling Missing Data

Missing data is a common challenge in big data. Strategies for handling missing data include imputation methods like mean imputation (replacing missing values with the mean of the column), median imputation (using the median), or more sophisticated methods like k-Nearest Neighbors imputation (predicting missing values based on similar data points). Alternatively, missing data can be removed entirely, but this should be done cautiously as it may lead to a significant loss of information.

The choice of method depends on the amount of missing data, the nature of the data, and the impact on model performance. For example, in a medical dataset, missing values for critical parameters may require careful consideration and possibly specialized imputation techniques.

Outlier Detection and Treatment

Outliers can significantly skew the results of AI models. Detection methods include box plots, scatter plots, and statistical methods like Z-score calculation. Treatment strategies include removal, transformation (e.g., logarithmic transformation), or winsorization. For example, in a dataset of house prices, a house priced significantly higher than others in the same area might be considered an outlier and warrant further investigation or removal.

The choice of method depends on the nature of the outliers and the impact on the model’s predictive power.

Feature Engineering

Feature engineering is the process of selecting, extracting, and transforming relevant features from raw data to improve model performance. This might involve creating new features from existing ones (e.g., calculating ratios or interactions between variables), selecting a subset of the most relevant features using techniques like feature selection algorithms, or transforming features to improve their suitability for the model (e.g., applying polynomial transformations).

For example, in a dataset of customer data, features like purchase frequency and total spending could be combined to create a new feature representing customer value. Effective feature engineering is crucial for building accurate and efficient AI models.

Feature Engineering and Selection

Feature engineering and selection are critical steps in building effective AI-driven big data pipelines. These processes significantly impact the performance and accuracy of machine learning models by transforming raw data into a format suitable for model training and optimizing the feature set for improved predictive power. Careful consideration of these steps is crucial for maximizing the value derived from big data analytics.

Effective feature engineering involves creating new features from existing ones or transforming existing features to improve model performance. This can include techniques like creating interaction terms, polynomial features, or applying domain-specific transformations. Feature selection, on the other hand, involves identifying the most relevant subset of features to use in the model, reducing dimensionality and improving model efficiency and interpretability. The interplay between these two processes is key to building robust and accurate AI models.

Feature Scaling and Normalization

Feature scaling and normalization are essential preprocessing steps that address the issue of differing scales and ranges among features in a dataset. Features with larger values can disproportionately influence the model’s learning process, leading to biased results. Scaling transforms features to a common range, while normalization transforms features to have a specific distribution (e.g., standard normal distribution). Common scaling techniques include min-max scaling (scaling to a range between 0 and 1) and standardization (centering the data around a mean of 0 and a standard deviation of 1).

Normalization techniques include z-score normalization and unit vector normalization. For example, in a dataset predicting house prices, features like square footage and number of bedrooms have vastly different scales. Scaling these features to a comparable range prevents the model from being overly influenced by the larger-valued feature (square footage). The choice of scaling or normalization method depends on the specific algorithm and data characteristics; for instance, algorithms like k-nearest neighbors and support vector machines often benefit from standardization.

Feature Selection Methods

Several feature selection methods exist, each with strengths and weaknesses. These methods can be broadly categorized into filter, wrapper, and embedded methods. Filter methods use statistical measures (e.g., correlation, chi-squared test) to rank features independently of the chosen model. Wrapper methods evaluate subsets of features based on model performance, often using techniques like recursive feature elimination. Embedded methods integrate feature selection into the model training process itself, as seen in regularization techniques like L1 and L2 regularization (LASSO and Ridge regression, respectively).

For example, using a filter method like correlation analysis might reveal that certain features are highly correlated with the target variable, indicating their importance. A wrapper method like recursive feature elimination might iteratively remove features with the least impact on model performance. The choice of method depends on factors like dataset size, computational resources, and model complexity.

Using an inappropriate method can lead to either an overly complex model (too many features) or an underperforming model (too few features).

Feature Engineering Techniques for Different AI Models

The effectiveness of different feature engineering techniques varies across different AI model types. For example, linear regression models generally benefit from simpler features and may be negatively impacted by highly complex or non-linear features. In contrast, deep learning models, with their ability to learn complex non-linear relationships, can often benefit from more intricate feature engineering, including interactions and polynomial features.

Consider a scenario predicting customer churn: for a linear regression model, straightforward features like customer age and average purchase value might suffice. However, a deep learning model might benefit from engineered features like purchase frequency, recency of purchase, and interactions between these variables to capture more complex patterns indicative of churn. The choice of feature engineering techniques should always be informed by the capabilities and limitations of the chosen model.

Feature Engineering and Selection Process Flowchart

A flowchart illustrating the feature engineering and selection process might appear as follows:[Imagine a flowchart here. The flowchart would start with “Raw Data,” then branch to “Data Cleaning & Preprocessing,” followed by “Feature Engineering” (with sub-processes like feature creation, transformation, and scaling/normalization). The next step would be “Feature Selection” (with sub-processes like filter, wrapper, and embedded methods). Finally, the flowchart would end with “Selected Features for Model Training”.

Arrows would connect each step, indicating the flow of the process.]

Model Training and Deployment

Efficient model training and deployment are critical for realizing the full potential of AI-driven big data pipelines. This involves not only selecting the right algorithms and infrastructure but also establishing robust processes for versioning, monitoring, and continuous improvement. A well-defined deployment strategy ensures models transition smoothly from development to production, delivering consistent and reliable predictions.Effective model training within a big data pipeline necessitates careful consideration of computational resources, data scaling strategies, and algorithm selection.

The chosen algorithm should align with the specific problem and data characteristics. Techniques like distributed training across multiple machines are often essential for handling large datasets. Furthermore, rigorous hyperparameter tuning and validation are crucial to optimize model performance and prevent overfitting. Deployment strategies should consider factors like latency requirements, scalability needs, and the overall pipeline architecture.

Model Versioning and Management, Best practices for building AI-driven big data pipelines

Model versioning is a crucial aspect of managing the evolution of AI models within a big data pipeline. It allows for tracking changes, comparing performance across different versions, and reverting to previous iterations if necessary. A robust versioning system should record metadata, including training data parameters, algorithm configurations, and performance metrics. This detailed record provides valuable insights for debugging, reproducibility, and ongoing model improvement.

Strategies like using Git for code and dedicated model registries for storing trained models are common practices. For example, a team might maintain versions v1.0, v1.1, and v2.0 of a fraud detection model, each with its corresponding training data and performance benchmarks. This ensures traceability and facilitates easy rollback if a newer version proves less effective.

Model Performance Monitoring and Evaluation

Continuous monitoring and evaluation of model performance in a production setting are paramount. This involves tracking key metrics such as accuracy, precision, recall, and F1-score, alongside latency and resource utilization. Real-time monitoring systems provide immediate alerts if performance degrades below acceptable thresholds. This early detection allows for prompt intervention, preventing significant disruptions. Regular performance evaluations, comparing model outputs against ground truth data, are essential for identifying potential issues like concept drift—where the relationship between input features and the target variable changes over time—or data quality problems.

For instance, a model predicting customer churn might experience a drop in accuracy if customer behavior changes unexpectedly. Continuous monitoring would flag this decline, prompting an investigation and potential model retraining.

Deploying an AI Model to a Cloud-Based Environment

Deploying an AI model to a cloud-based environment involves several steps. First, the trained model needs to be packaged appropriately, often in a containerized format like Docker. This ensures consistent execution across different environments. Next, the model is deployed to a cloud platform such as AWS SageMaker, Google Cloud AI Platform, or Azure Machine Learning. These platforms offer managed services for model hosting, scaling, and monitoring.

The deployment process usually involves configuring the necessary infrastructure, including compute resources and network settings. Once deployed, the model can be accessed via APIs, enabling integration with other systems and applications. For instance, a deployed image recognition model might be integrated into a mobile application, providing real-time object identification capabilities. Robust error handling and logging mechanisms are crucial during this stage to ensure smooth operation and facilitate troubleshooting.

Pipeline Monitoring and Maintenance

Building a robust AI-driven big data pipeline requires more than just efficient data ingestion, transformation, and model deployment. Continuous monitoring and proactive maintenance are crucial for ensuring the pipeline’s reliability, accuracy, and overall performance. A well-designed monitoring system provides early warnings of potential problems, allowing for timely intervention and preventing costly downtime or inaccurate insights.A comprehensive monitoring strategy encompasses several key areas, from tracking key performance indicators (KPIs) to implementing mechanisms for real-time data quality checks and proactive pipeline updates.

This section delves into these essential aspects, providing practical strategies for maintaining a high-performing and reliable AI big data pipeline.

KPI Tracking and Alerting

Effective pipeline monitoring begins with defining and tracking relevant KPIs. These metrics provide a quantitative assessment of the pipeline’s health and performance. Examples include data ingestion rate, data transformation speed, model training time, prediction latency, and model accuracy. A centralized dashboard, potentially leveraging tools like Grafana or Prometheus, should visualize these KPIs in real-time, enabling quick identification of anomalies.

Automated alerts should be configured to notify relevant personnel when KPIs deviate significantly from established baselines, triggering immediate investigation and remediation. For instance, a sudden drop in data ingestion rate could indicate a problem with the data source or ingestion infrastructure, while a decrease in model accuracy might signal the need for retraining or model recalibration.

Real-time Data Quality Monitoring

Maintaining data quality is paramount for the reliability of AI-driven insights. Real-time data quality monitoring involves continuously validating the data at various stages of the pipeline. This includes checks for data completeness, consistency, accuracy, and validity. Techniques like schema validation, data profiling, and anomaly detection algorithms can be employed. For example, a real-time data quality monitoring system might detect an unexpected spike in null values in a specific field, indicating a potential data source issue.

Similarly, it could flag inconsistencies between different data sources, prompting a deeper investigation into data integration processes. Immediate alerts and automated remediation strategies, such as data cleansing or fallback mechanisms, are essential for minimizing the impact of data quality issues.

Pipeline Maintenance and Updates

Maintaining and updating the big data pipeline is an ongoing process requiring a structured approach. Regular pipeline reviews should be scheduled to assess performance, identify areas for improvement, and address emerging challenges. Version control systems (e.g., Git) are essential for tracking changes to the pipeline code and configuration. Automated testing and continuous integration/continuous deployment (CI/CD) pipelines are crucial for ensuring that updates are deployed reliably and without disrupting the pipeline’s operation.

Furthermore, a robust documentation strategy, including clear descriptions of pipeline components, data schemas, and operational procedures, facilitates easier maintenance and troubleshooting. Regular retraining of models with updated data is also a key aspect of pipeline maintenance, ensuring the model remains accurate and relevant over time.

Failure Points and Mitigation Strategies

Predicting and mitigating potential failure points is critical for ensuring pipeline resilience. The following table Artikels some common failure points and corresponding mitigation strategies:

| Failure Point | Mitigation Strategy | Failure Point | Mitigation Strategy |

|---|---|---|---|

| Data Source Outage | Implement redundant data sources and failover mechanisms. | Data Transformation Errors | Robust error handling and data validation at each transformation step. |

| Infrastructure Failure (e.g., cloud outages) | Utilize highly available cloud infrastructure and disaster recovery plans. | Model Performance Degradation | Regular model retraining and monitoring of model KPIs. |

| Security Breaches | Implement robust security measures, including access control, encryption, and intrusion detection systems. | Data Loss or Corruption | Regular backups and data versioning. |

Security and Privacy Considerations: Best Practices For Building AI-driven Big Data Pipelines

Building secure and private AI-driven big data pipelines is paramount. Data breaches can lead to significant financial losses, reputational damage, and legal repercussions. Implementing robust security measures from the outset is crucial, not an afterthought. This section details best practices for safeguarding sensitive information throughout the entire pipeline lifecycle.Data Encryption and Access Control Mechanisms are fundamental components of a secure big data pipeline.

These mechanisms work in tandem to limit unauthorized access and protect data confidentiality, even in the event of a breach.

Data Encryption Methods

Effective data encryption involves employing strong encryption algorithms across various stages of the pipeline. Data at rest should be encrypted using robust methods like AES-256. Data in transit should be secured using protocols like TLS/SSL. Furthermore, consider implementing techniques such as homomorphic encryption for processing sensitive data without decryption, preserving privacy even during computation. The choice of encryption method depends on the sensitivity of the data and the specific requirements of the application.

For example, a healthcare organization handling patient data would require more stringent encryption compared to a retail company analyzing customer purchase patterns.

Access Control Implementation

Implementing granular access control is vital for limiting access to sensitive data based on the principle of least privilege. This involves assigning roles and permissions to users and systems, allowing only authorized entities to access specific data and perform designated actions. Role-Based Access Control (RBAC) and Attribute-Based Access Control (ABAC) are common approaches. RBAC uses predefined roles to manage permissions, while ABAC uses attributes to define access policies, offering more fine-grained control.

For instance, a data scientist might have read and write access to training data but only read access to production data. A system administrator might have broader privileges, but this should be strictly audited and monitored.

Compliance Regulations

Adherence to relevant data privacy regulations is non-negotiable. Organizations must comply with regulations like the General Data Protection Regulation (GDPR) in Europe, the California Consumer Privacy Act (CCPA) in California, and other regional or national laws. These regulations dictate how personal data should be handled, stored, and protected. GDPR, for example, requires organizations to obtain explicit consent for data processing, provide data subjects with access to their data, and implement appropriate security measures to prevent data breaches.

Non-compliance can result in hefty fines and legal action.

Minimizing Data Breach Risk and Ensuring Data Integrity

Proactive measures are essential to mitigate the risk of data breaches. Regular security audits and penetration testing help identify vulnerabilities. Implementing robust intrusion detection and prevention systems provides an additional layer of protection. Data loss prevention (DLP) tools can monitor data movement and prevent sensitive information from leaving the organization’s control. Maintaining data integrity throughout the pipeline is achieved through checksums, hashing algorithms, and version control.

These mechanisms ensure data accuracy and prevent unauthorized modifications. For example, using blockchain technology can create an immutable record of data transformations, providing a high level of data integrity assurance. A multi-layered security approach, encompassing these measures, is crucial for effective data protection.

Scalability and Performance Optimization

Building a robust AI-driven big data pipeline requires careful consideration of scalability and performance. A pipeline that functions flawlessly with a small dataset may struggle significantly as data volume increases and user demand grows. Proactive optimization strategies are crucial to ensure consistent performance and prevent bottlenecks. This section details key strategies for achieving optimal scalability and performance.

Optimizing the performance and scalability of a big data pipeline involves a multifaceted approach. This encompasses efficient data handling, leveraging appropriate distributed computing frameworks, and designing a pipeline architecture that can gracefully adapt to increasing data volumes and user requests. Key considerations include data partitioning, parallel processing, and resource allocation strategies. Ignoring these aspects can lead to significant performance degradation and ultimately pipeline failure under heavy load.

Efficient Data Handling for Large Volumes

Handling massive datasets efficiently is paramount. Techniques like data partitioning, compression, and optimized data formats play a crucial role. Partitioning divides the data into smaller, manageable chunks, allowing for parallel processing. Compression reduces storage space and network transfer times, significantly improving performance. Choosing appropriate data formats, such as Parquet or ORC, which offer columnar storage and efficient compression, can dramatically reduce processing time compared to traditional row-oriented formats like CSV.

For instance, a pipeline processing terabytes of data in CSV format might take days to complete, whereas the same pipeline using Parquet could complete in hours.

Distributed Computing Frameworks: Spark and Hadoop

Several distributed computing frameworks are available for processing big data. Apache Spark and Apache Hadoop are two prominent examples, each with its strengths and weaknesses. Hadoop, employing the MapReduce paradigm, excels in batch processing of massive datasets. It is robust and fault-tolerant, making it suitable for long-running, offline processing tasks. However, it can be slower than Spark for iterative algorithms and interactive queries.

Spark, on the other hand, utilizes in-memory computation, providing significantly faster processing speeds for iterative algorithms and interactive analytics. Spark’s ability to handle both batch and streaming data makes it a versatile choice for many AI-driven pipelines. The optimal choice depends on the specific requirements of the pipeline, considering factors such as data volume, processing speed, and the nature of the analytical tasks.

For real-time analytics or iterative machine learning models, Spark’s speed advantage is crucial. For large-scale batch processing with high fault tolerance, Hadoop might be more suitable.

Pipeline Design for Scalability

Designing a scalable pipeline requires a modular and adaptable architecture. Microservices architecture allows for independent scaling of individual pipeline components. This means that only the components handling the most data or experiencing high demand need to be scaled, optimizing resource utilization. Employing message queues, such as Kafka, decouples pipeline stages, allowing for asynchronous processing and improved fault tolerance.

This ensures that even if one component fails, the entire pipeline doesn’t grind to a halt. Furthermore, leveraging cloud-based infrastructure, such as AWS EMR or Google Dataproc, allows for elastic scaling, automatically adjusting resources based on demand. This eliminates the need for manual intervention and ensures optimal resource utilization during peak loads. For example, a pipeline processing user interactions on a social media platform can dynamically scale up during peak hours and scale down during off-peak hours, optimizing cost and performance.

Last Recap

Building effective AI-driven big data pipelines requires a holistic approach that considers every stage of the process, from data ingestion to model deployment and ongoing maintenance. By carefully addressing data quality, security, scalability, and performance, organizations can unlock the transformative power of AI to gain valuable insights and drive strategic decision-making. Remember that continuous monitoring, adaptation, and optimization are vital for long-term success, ensuring your pipeline remains robust and efficient as your data needs evolve.

Implementing these best practices will not only improve the efficiency and accuracy of your AI models but also contribute to a more robust and sustainable data infrastructure.