AI algorithms for efficient big data processing and storage are revolutionizing how we manage the ever-growing deluge of digital information. The sheer volume, velocity, and variety of big data present unprecedented challenges for traditional processing and storage methods. This exploration delves into the innovative AI-powered solutions transforming this landscape, examining diverse algorithms, their strengths and weaknesses, and their real-world applications across various industries.

We will analyze how AI optimizes storage, predicts future needs, and ultimately enhances the efficiency and effectiveness of big data management.

From deep learning models predicting future storage requirements to machine learning algorithms optimizing data compression, AI is no longer a futuristic concept but a crucial component of modern big data infrastructure. This analysis will equip you with a comprehensive understanding of the current state-of-the-art, emerging trends, and the transformative potential of AI in reshaping the future of big data.

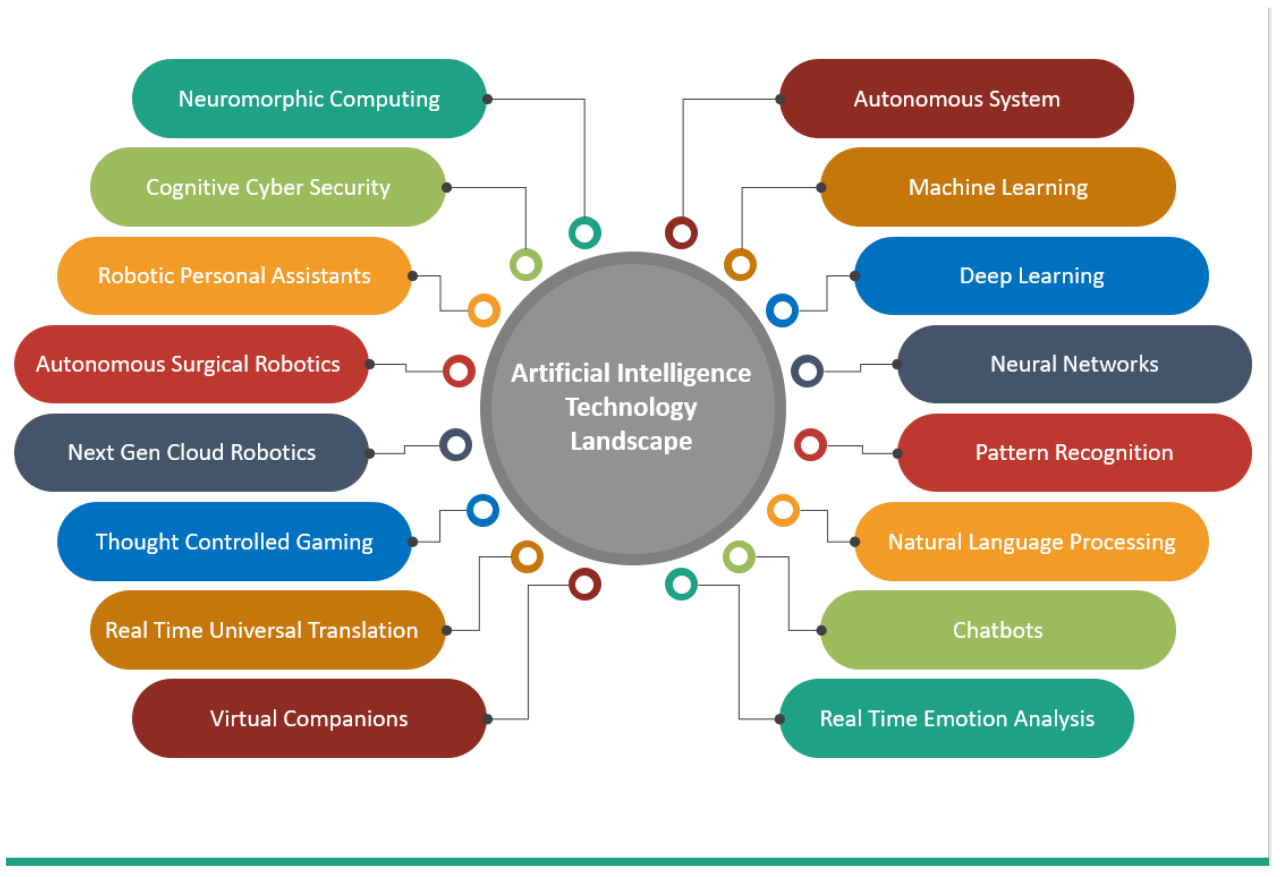

Introduction to AI Algorithms for Big Data

The exponential growth of data across various sectors presents significant challenges in processing and storage. Traditional data management techniques struggle to cope with the sheer volume, velocity, and variety of big data, leading to bottlenecks in analysis, increased costs, and delayed insights. The limitations extend to issues like data heterogeneity, requiring complex transformations and integrations, and the need for real-time or near real-time processing capabilities, exceeding the capabilities of conventional systems.AI offers a powerful toolkit to address these challenges.

Its ability to learn from data, identify patterns, and make predictions allows for the development of more efficient and scalable solutions for big data management. AI algorithms can automate complex tasks, optimize resource allocation, and extract valuable insights from massive datasets far more effectively than traditional methods. This leads to faster processing times, reduced storage costs, and improved decision-making capabilities.

Real-World Applications of AI in Big Data Management

AI is already transforming big data management across numerous industries. For example, in the financial sector, AI-powered fraud detection systems analyze vast transaction datasets to identify suspicious patterns and prevent fraudulent activities in real-time. This involves machine learning algorithms that learn from historical fraud data to identify anomalies and predict future occurrences, significantly improving security and reducing financial losses.

Similarly, in healthcare, AI algorithms are used to analyze medical images, patient records, and genomic data to assist in diagnosis, personalize treatment plans, and accelerate drug discovery. The analysis of large-scale patient data allows for the identification of trends and patterns that would be impossible to detect manually, leading to more effective and personalized healthcare. In the retail sector, AI-driven recommendation engines analyze customer purchasing behavior to suggest relevant products, leading to increased sales and improved customer satisfaction.

These systems process enormous amounts of customer data, including browsing history, purchase records, and demographic information, to create personalized experiences. Finally, in logistics and supply chain management, AI algorithms optimize transportation routes, predict demand, and manage inventory levels, improving efficiency and reducing costs. Predictive models, trained on historical data and real-time information, can anticipate disruptions and optimize resource allocation, resulting in significant cost savings and improved service levels.

Specific AI Algorithms for Big Data Processing: AI Algorithms For Efficient Big Data Processing And Storage

Big data processing necessitates algorithms capable of handling massive datasets with diverse characteristics. Several AI techniques, each with its strengths and weaknesses, are employed for this purpose. The choice of algorithm depends heavily on the specific data characteristics and the desired outcome. This section compares and contrasts key AI algorithms, analyzing their performance across different dimensions.

Machine learning (ML) and deep learning (DL) represent the core AI approaches used for big data processing. While both leverage data to learn patterns and make predictions, they differ significantly in their architecture and capabilities. Other techniques, such as evolutionary algorithms and graph-based algorithms, also play a crucial role in specific big data tasks.

Machine Learning Algorithms for Big Data

Machine learning encompasses a broad range of algorithms suitable for various big data tasks. These algorithms are generally less computationally intensive than deep learning methods, making them viable for resource-constrained environments. However, their performance can be limited by the complexity of the data and the need for feature engineering. Common ML algorithms used in big data include linear regression, logistic regression, support vector machines (SVMs), decision trees, and random forests.

These algorithms excel in tasks like prediction, classification, and anomaly detection. For example, logistic regression can be used to predict customer churn based on their historical behavior, while random forests can be applied to identify fraudulent transactions.

Deep Learning Algorithms for Big Data

Deep learning algorithms, a subset of machine learning, utilize artificial neural networks with multiple layers to extract complex features from raw data. Their ability to learn intricate patterns makes them particularly effective for unstructured data like images, text, and audio. However, deep learning models require significant computational resources and large datasets for training, making them less suitable for smaller datasets or resource-limited environments.

Convolutional Neural Networks (CNNs) are widely used for image processing and object recognition, while Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks are effective for sequential data analysis, such as natural language processing. For instance, CNNs can be used for image analysis in medical imaging, while LSTMs can be used for sentiment analysis of customer reviews.

Comparison of AI Algorithms for Big Data Processing

The following table compares the computational complexity and resource requirements of various AI algorithms used for big data processing. Note that these are general estimates and can vary significantly based on specific implementation and data characteristics.

| Algorithm | Computational Complexity | Data Volume Scalability | Resource Requirements |

|---|---|---|---|

| Linear Regression | Relatively low | Good | Low to moderate |

| Support Vector Machines (SVMs) | Moderate to high (depending on kernel) | Good with appropriate kernel | Moderate to high |

| Random Forests | Moderate | Good | Moderate |

| Convolutional Neural Networks (CNNs) | High | Good with appropriate architecture | Very high |

| Recurrent Neural Networks (RNNs) | High | Moderate | Very high |

AI Algorithms for Big Data Storage

The exponential growth of data necessitates innovative approaches to storage management. Traditional methods struggle to handle the volume, velocity, and variety of big data, leading to increased costs and reduced efficiency. AI algorithms offer a powerful solution, enabling intelligent optimization of storage resources and minimizing operational overhead. By leveraging machine learning and deep learning techniques, organizations can significantly improve their big data storage infrastructure.

AI-Driven Compression Techniques

Advanced compression algorithms, informed by AI, dynamically adapt to the characteristics of the data being stored. Unlike traditional methods that apply a fixed compression ratio, AI-powered compression analyzes the data’s structure and patterns to identify redundancies and inefficiencies. This allows for higher compression ratios without significant loss of data fidelity. For instance, an AI algorithm might identify recurring sequences in genomic data and apply specialized compression techniques to these sequences, achieving significantly higher compression rates than general-purpose algorithms.

Another example is in image and video storage, where AI can identify and compress less important visual elements, optimizing storage without significantly impacting image or video quality. These adaptive techniques result in significant cost savings in storage and bandwidth.

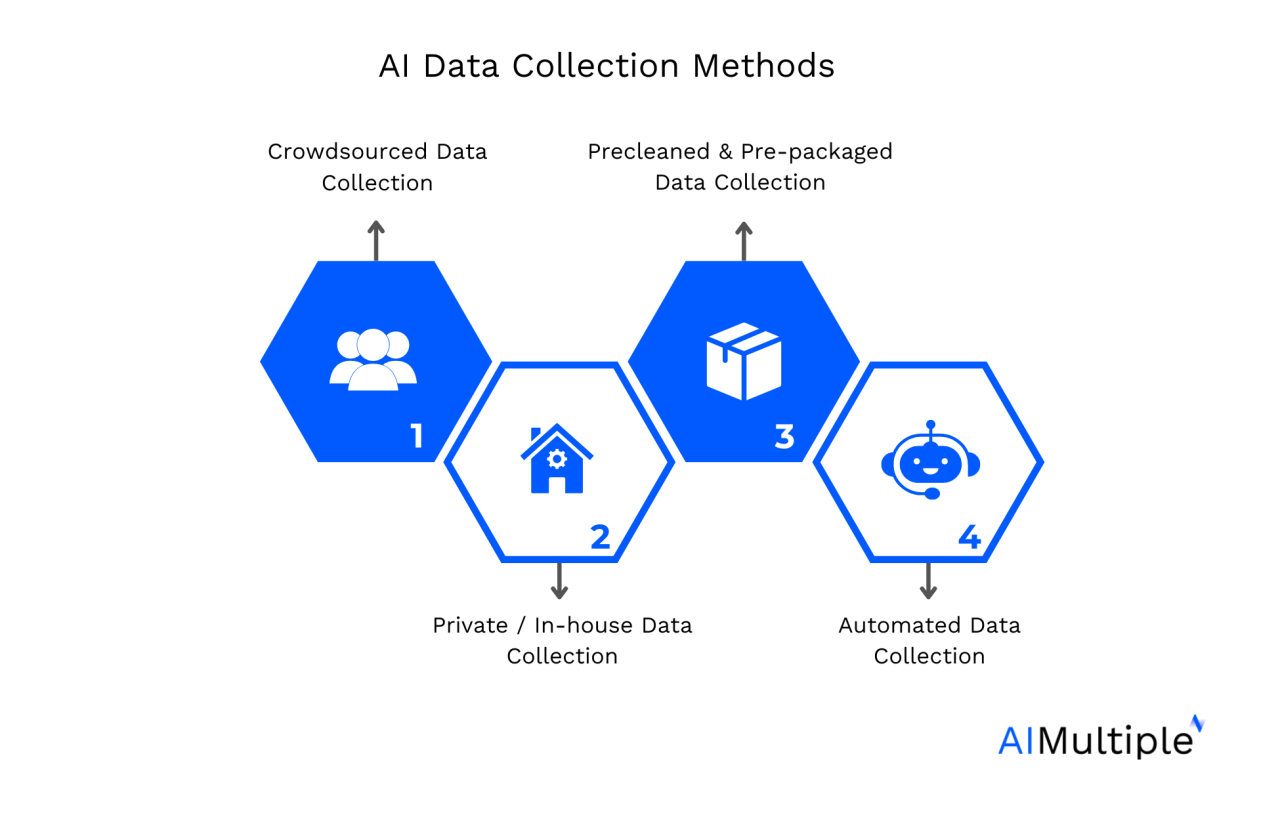

AI-Powered Data Deduplication

Data deduplication aims to eliminate redundant copies of data, freeing up valuable storage space. AI enhances this process by intelligently identifying and eliminating duplicates, even those that are not exact copies but share significant similarities. This is particularly beneficial for unstructured data like text and images, where subtle variations can mask identical underlying information. AI algorithms can employ techniques like hashing and similarity analysis to identify near-duplicate data, which would be missed by traditional deduplication methods.

A practical example is in cloud storage services, where AI-powered deduplication can significantly reduce storage costs for users storing large volumes of similar files, such as backups or media assets.

AI in Distributed Storage Systems

AI plays a crucial role in managing distributed storage systems, which are critical for handling big data’s scale and complexity. AI algorithms can optimize data placement across multiple nodes, balancing load and minimizing latency. They can predict future storage needs based on historical data and user behavior, proactively scaling resources to avoid bottlenecks. For example, an AI system might detect a sudden increase in data uploads from a specific region and automatically allocate more storage capacity to that region, preventing performance degradation.

Furthermore, AI can improve fault tolerance by intelligently replicating data across different nodes, ensuring data availability even in the event of hardware failures. This intelligent resource allocation and proactive management minimizes operational costs and maximizes system reliability.

Hypothetical System Architecture for AI-Driven Big Data Storage

A hypothetical system architecture would integrate several AI components. A central AI engine would collect metadata and usage patterns from the storage system. This data would be fed into machine learning models responsible for predicting storage needs, optimizing compression algorithms, and managing data placement across nodes. Another component would handle data deduplication, employing deep learning techniques to identify near-duplicate data.

The system would also include a feedback loop, allowing the AI engine to continuously learn and refine its predictions and strategies based on real-time system performance data. This closed-loop system ensures continuous optimization and adaptation to evolving data patterns and user demands. The system would also incorporate automated alerts and reporting to inform administrators of potential issues or resource constraints.

Predicting Storage Needs and Optimizing Resource Allocation

AI algorithms can analyze historical data usage patterns, current growth trends, and predicted future data volumes to accurately forecast storage needs. This allows for proactive resource allocation, preventing storage shortages and optimizing cost efficiency. For instance, an e-commerce company can use AI to predict peak storage demands during shopping holidays, ensuring sufficient capacity to handle the increased traffic and data volume.

Similarly, a social media platform can use AI to forecast storage requirements based on user growth and content creation rates, proactively scaling its infrastructure to meet future needs. This predictive capability minimizes the need for reactive scaling, which is often more expensive and less efficient.

Performance Evaluation and Optimization

Efficient AI algorithms for big data require rigorous performance evaluation and optimization strategies. Understanding key performance indicators (KPIs) and employing effective optimization techniques are crucial for ensuring the scalability, reliability, and cost-effectiveness of these systems. This section details key KPIs, their measurement, and strategies for improving algorithmic performance in big data environments.

Effective performance evaluation necessitates a multi-faceted approach, focusing on both processing speed and resource utilization. Analyzing these metrics allows for the identification of bottlenecks and the implementation of targeted optimization strategies.

Key Performance Indicators for AI in Big Data, AI algorithms for efficient big data processing and storage

Several key performance indicators (KPIs) are essential for evaluating the efficiency of AI-powered big data processing and storage systems. These metrics provide insights into various aspects of performance, allowing for targeted optimization efforts.

- Processing Time: The total time taken to complete a specific task, such as training a model or querying a database. This can be further broken down into individual stages of the process for detailed analysis.

- Throughput: The amount of data processed per unit of time. High throughput indicates efficient data handling capabilities.

- Resource Utilization (CPU, Memory, I/O): Monitoring CPU usage, memory consumption, and I/O operations helps identify resource bottlenecks that limit performance. This data can be visualized using performance monitoring tools.

- Accuracy and Precision: For machine learning models, accuracy and precision are crucial KPIs reflecting the model’s performance in making correct predictions. These metrics are often calculated using confusion matrices and other statistical measures.

- Scalability: The ability of the system to handle increasing amounts of data and processing demands without significant performance degradation. This is typically assessed by gradually increasing the data volume and monitoring performance metrics.

- Cost-Effectiveness: The overall cost of processing and storing the data, considering factors such as computational resources, storage space, and energy consumption. This is crucial for maintaining a financially sustainable big data infrastructure.

Measuring and Analyzing KPIs

Measuring and analyzing KPIs involves employing appropriate tools and techniques to collect and interpret performance data. This data-driven approach guides optimization efforts.

- Performance Monitoring Tools: Tools like Prometheus, Grafana, and Datadog provide real-time monitoring and visualization of resource utilization and other KPIs. These tools can generate alerts when performance thresholds are exceeded.

- Profiling Tools: Profiling tools such as cProfile (Python) or gprof (C/C++) identify performance bottlenecks within the AI algorithms by pinpointing computationally expensive sections of the code.

- Statistical Analysis: Statistical methods are used to analyze the collected data, identifying trends and correlations between different KPIs. Regression analysis, for example, can be used to model the relationship between data volume and processing time.

- A/B Testing: Comparing different algorithm implementations or parameter settings through A/B testing allows for a quantitative evaluation of their relative performance. This approach provides empirical evidence for optimization decisions.

Strategies for Performance Optimization

Several strategies can be employed to optimize the performance of AI algorithms in big data environments. These strategies address various aspects of performance, from algorithmic improvements to infrastructure enhancements.

- Algorithm Selection: Choosing appropriate algorithms based on the specific data characteristics and task requirements is crucial. For example, using linear regression instead of a complex neural network when appropriate can significantly improve processing speed without sacrificing accuracy.

- Data Preprocessing: Efficient data cleaning, transformation, and feature engineering can significantly reduce the computational burden on the AI algorithms. Techniques such as dimensionality reduction and feature selection can improve performance while preserving accuracy.

- Parallel and Distributed Processing: Leveraging parallel and distributed computing frameworks like Spark or Hadoop allows for processing large datasets in parallel, significantly reducing processing time. This distributes the workload across multiple machines.

- Hardware Acceleration: Utilizing specialized hardware such as GPUs or FPGAs can accelerate computationally intensive tasks, particularly in deep learning applications. GPUs, for example, are highly efficient at matrix multiplications, a core operation in many AI algorithms.

- Model Compression: Techniques such as pruning, quantization, and knowledge distillation can reduce the size and complexity of AI models, leading to faster processing and reduced memory requirements. This allows for deployment on resource-constrained devices.

- Caching and Indexing: Implementing caching mechanisms and efficient indexing strategies can significantly reduce data access time, improving the overall performance of the system. This is especially beneficial for frequently accessed data.

Future Trends and Research Directions

The field of AI for big data processing and storage is rapidly evolving, driven by the ever-increasing volume, velocity, and variety of data generated globally. New algorithms and architectures are constantly emerging, promising significant improvements in efficiency, scalability, and performance. Understanding these trends is crucial for organizations seeking to leverage the full potential of their data assets.Emerging trends are reshaping the landscape of AI for big data management.

These advancements promise to address current limitations and unlock new possibilities in data analysis and decision-making. The integration of these technologies will likely lead to more sophisticated and efficient big data systems.

Quantum Computing’s Impact on AI-Driven Big Data Management

Quantum computing holds the potential to revolutionize AI-driven big data management. Classical algorithms struggle with the computational complexity of analyzing massive datasets, especially when dealing with intricate relationships and patterns. Quantum algorithms, however, leverage the principles of quantum mechanics to perform computations exponentially faster than their classical counterparts. This speed advantage could enable the development of more powerful AI models capable of handling far larger datasets and uncovering previously undetectable insights.

For instance, quantum machine learning algorithms could significantly accelerate the training of complex models used in fraud detection or personalized medicine, where processing vast amounts of data is essential. Furthermore, quantum computing could optimize data storage and retrieval by enabling the development of highly efficient quantum databases. While still in its early stages, the potential impact of quantum computing on big data management is immense.

Anticipated Evolution of AI in Big Data Management (Visual Representation)

Imagine a graph charting the evolution of AI in big data management over the next 5-10 years. The X-axis represents time, and the Y-axis represents key performance indicators such as processing speed, storage efficiency, and analytical capabilities. The graph begins at a point representing current technologies, showing relatively slow processing speeds, limited storage capacity, and basic analytical capabilities. Over the next five years, the graph shows a steep upward trajectory.

This reflects the increasing adoption of advanced AI algorithms like graph neural networks and federated learning, leading to faster processing, improved storage efficiency through techniques like AI-driven compression, and more sophisticated analytical capabilities, enabling the extraction of deeper insights from data. In the latter half of the decade, the curve continues to rise, but at an even steeper angle, representing the potential impact of quantum computing.

This section of the graph demonstrates a significant leap in processing speed, storage capacity, and analytical power, driven by quantum algorithms and quantum-enhanced data structures. The graph concludes with a point significantly higher than the starting point, illustrating the transformative potential of AI and quantum computing in revolutionizing big data management. This visual representation highlights the exponential growth expected in the field, driven by both incremental improvements and disruptive technological advancements.

For example, the processing of genomic data, currently a major computational bottleneck in personalized medicine, could become significantly faster and more efficient, enabling more precise and timely treatments.

Case Studies

The following case studies illustrate the practical application of AI algorithms in addressing big data challenges across diverse industries. These examples demonstrate the tangible benefits achieved through the intelligent processing and storage of vast datasets. Each case highlights specific AI techniques, the challenges overcome, and the resulting improvements in efficiency and insights.

Netflix’s Recommendation System

Netflix utilizes a sophisticated recommendation system to personalize user experiences and improve content discovery. This system processes massive amounts of user data, including viewing history, ratings, and search queries. The core algorithms employed include collaborative filtering, content-based filtering, and deep learning models like recurrent neural networks (RNNs). These algorithms work together to predict user preferences and suggest relevant movies and TV shows.

The Netflix recommendation system successfully addressed the challenge of information overload, enabling users to easily discover content tailored to their individual tastes. This resulted in increased user engagement and retention, ultimately contributing to significant revenue growth.

The challenges involved handling the sheer volume and velocity of user data, ensuring real-time recommendations, and maintaining the accuracy of predictions despite the constant influx of new content. The results included a significant increase in user engagement, a higher customer lifetime value, and the ability to effectively promote new content to relevant audiences.

Fraud Detection in Financial Services

Financial institutions leverage AI algorithms to detect fraudulent transactions in real-time. Companies like Visa and Mastercard employ machine learning techniques such as anomaly detection, support vector machines (SVMs), and deep learning models to analyze transaction data and identify suspicious patterns. This involves processing vast datasets encompassing transaction details, user profiles, and location information.

By implementing AI-driven fraud detection, financial institutions significantly reduced financial losses due to fraudulent activities while improving the overall security of their systems.

The challenges included dealing with imbalanced datasets (where fraudulent transactions are far less frequent than legitimate ones), handling high-velocity data streams, and ensuring the accuracy and explainability of the detection models. The results included a significant reduction in fraudulent transactions, improved customer trust, and streamlined regulatory compliance.

Precision Medicine in Healthcare

The healthcare industry is increasingly using AI to analyze patient data for personalized medicine. Hospitals and research institutions are employing machine learning algorithms such as deep learning and natural language processing (NLP) to analyze patient medical records, genomic data, and clinical trial results. This enables the development of more effective and targeted treatments based on individual patient characteristics.

AI-powered precision medicine improves treatment outcomes by tailoring therapies to individual patients’ needs, leading to better health outcomes and reduced healthcare costs.

The challenges included ensuring data privacy and security, handling the complexity and heterogeneity of medical data, and validating the clinical effectiveness of AI-driven predictions. The results include improved diagnostic accuracy, personalized treatment plans, accelerated drug discovery, and reduced healthcare costs through more efficient resource allocation.

Epilogue

In conclusion, the integration of AI algorithms is not merely an enhancement but a necessity for efficient big data processing and storage. The ability to leverage AI for predictive analytics, optimized resource allocation, and automated data management represents a significant leap forward in handling the complexities of big data. As AI technology continues to evolve, particularly with the emergence of quantum computing, we can anticipate even more sophisticated and efficient solutions, unlocking unprecedented opportunities for data-driven insights and innovation across all sectors.