Best practices for integrating AI and big data in business are crucial for leveraging the transformative power of these technologies. Successfully merging AI and big data isn’t simply about acquiring the latest tools; it’s a strategic undertaking demanding careful planning, execution, and ongoing refinement. This guide delves into the key steps, from defining clear business objectives and ensuring data quality to selecting the right AI models and addressing ethical considerations.

We’ll explore practical strategies for integration, monitoring, and fostering the necessary talent to maximize the return on your investment in AI and big data.

This exploration covers the entire lifecycle, from initial strategy and data acquisition to model deployment, ongoing monitoring, and ethical considerations. We’ll examine practical challenges and solutions, offering a roadmap for organizations seeking to harness the combined power of AI and big data for competitive advantage. This isn’t just about technical implementation; it’s about aligning AI and big data initiatives with overall business goals to drive tangible results.

Defining Business Objectives for AI and Big Data Integration

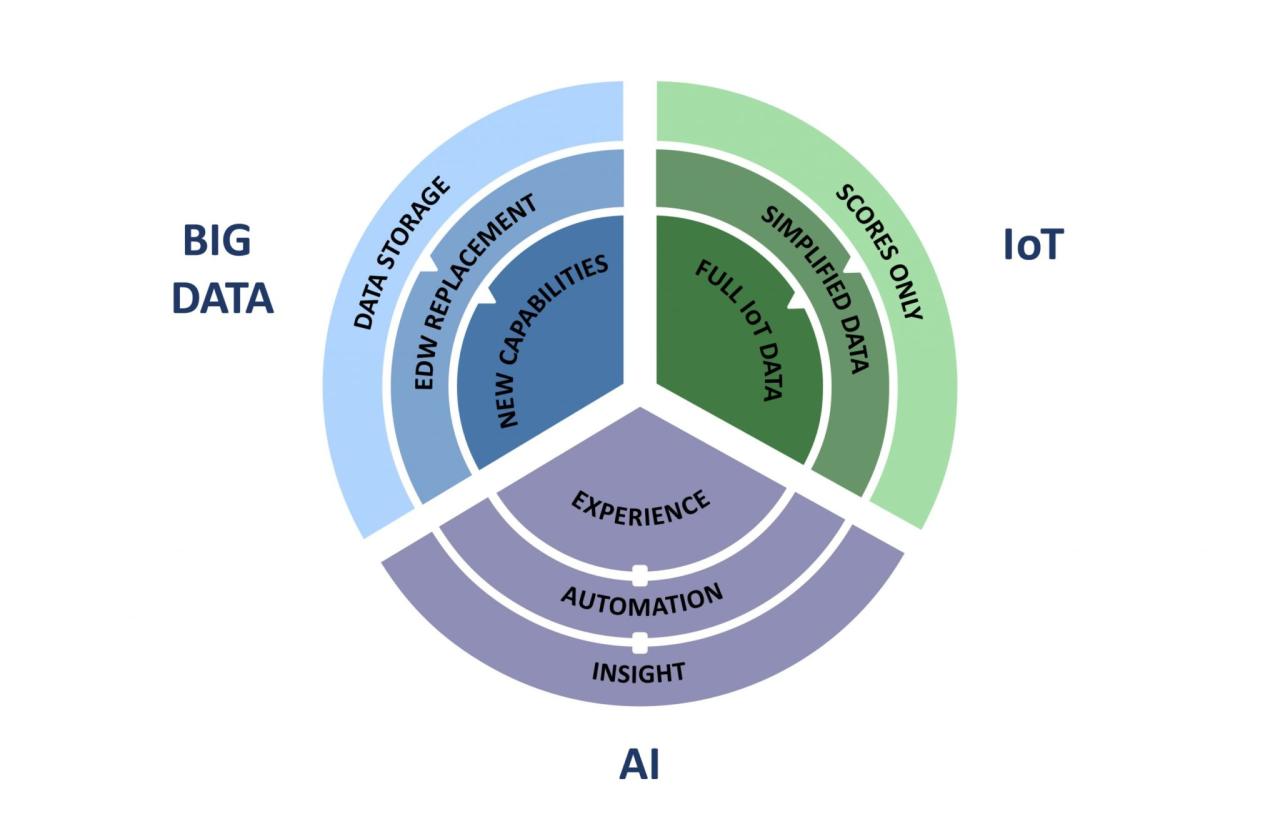

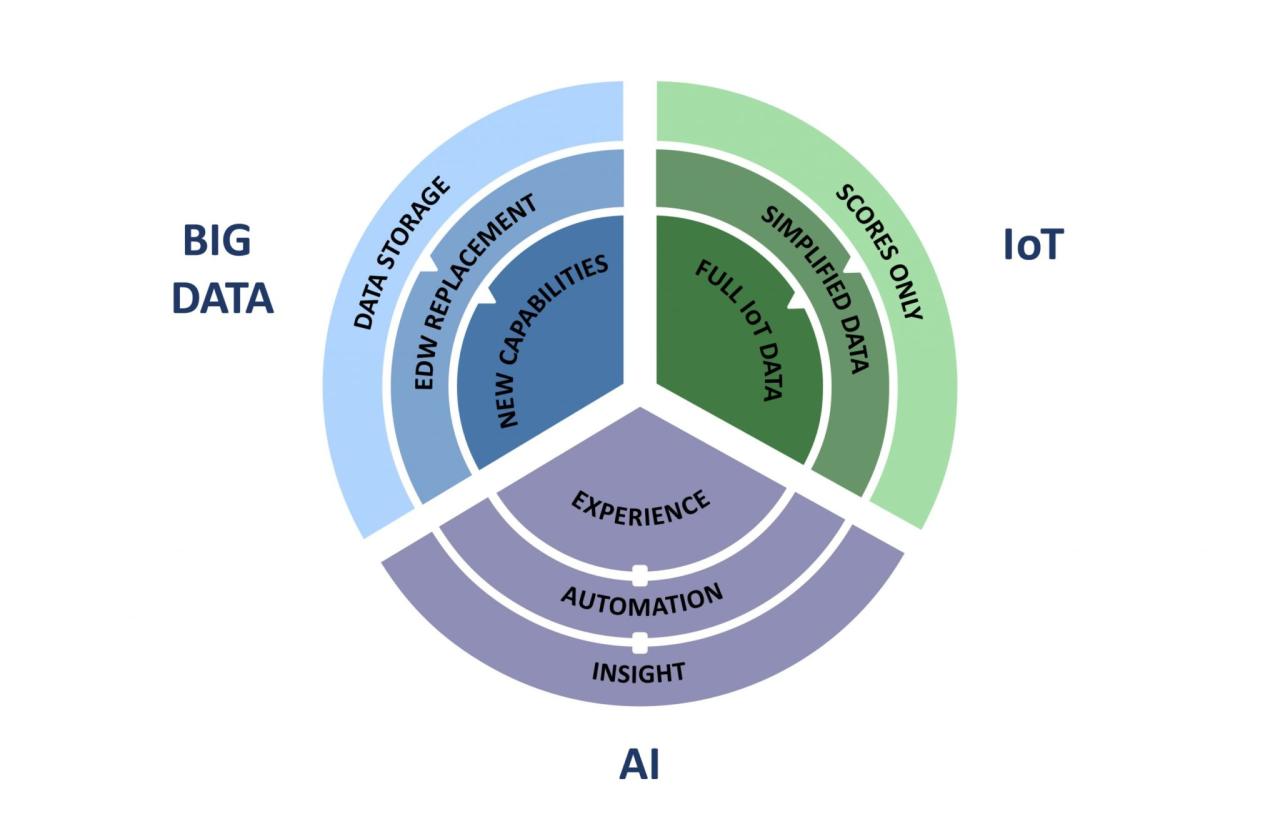

Successfully integrating AI and big data requires a clear understanding of the desired business outcomes. Without well-defined objectives, the implementation risks becoming a costly exercise with minimal return on investment. A strategic approach necessitates aligning AI and big data initiatives with overarching business goals, ensuring that the technology serves as a catalyst for growth and improved efficiency.Effective integration of AI and big data translates to tangible improvements across various business functions.

This section will explore three distinct business goals achievable through such integration, outlining the relevant KPIs and their alignment with broader business strategies.

Improved Customer Experience and Retention

Enhancements in customer experience and increased customer retention are significant business goals directly addressable through AI and big data integration. By analyzing vast customer data sets – including purchase history, website interactions, and customer service interactions – businesses can develop highly personalized experiences. AI algorithms can predict customer churn, enabling proactive interventions to retain valuable customers. For instance, a retail company might use AI to identify customers at risk of churning and offer them targeted discounts or loyalty programs.

Key Performance Indicators (KPIs) for measuring success in this area include:

- Customer Satisfaction Score (CSAT): Measures overall customer happiness and satisfaction with products and services.

- Net Promoter Score (NPS): Gauges customer loyalty and willingness to recommend the business to others.

- Customer Churn Rate: Tracks the percentage of customers who stop using a product or service over a given period.

- Customer Lifetime Value (CLTV): Predicts the total revenue a customer will generate throughout their relationship with the business.

Alignment with Business Strategy:

Improving CSAT, NPS, and CLTV directly contributes to increased revenue and profitability, core elements of most business strategies. Reducing churn rate minimizes customer acquisition costs and protects revenue streams. These KPIs are easily tracked and provide valuable insights into the effectiveness of AI and big data initiatives in enhancing customer experience and loyalty.

Optimized Operational Efficiency

AI and big data can significantly optimize operational efficiency across various business functions. For example, predictive maintenance in manufacturing using sensor data and machine learning algorithms can minimize downtime and reduce maintenance costs. Similarly, AI-powered chatbots can automate customer service inquiries, freeing up human agents to focus on more complex issues. This leads to streamlined workflows and reduced operational expenses.

Key Performance Indicators (KPIs) for measuring success in this area include:

- Downtime Reduction: Measures the decrease in operational interruptions due to equipment failure or other issues.

- Cost Savings: Tracks the reduction in operational expenses resulting from automation and optimization.

- Process Cycle Time: Measures the time taken to complete a specific business process, such as order fulfillment or invoice processing.

- Employee Productivity: Assesses the efficiency and output of employees through metrics like tasks completed per unit of time.

Alignment with Business Strategy:

Reducing downtime, cutting operational costs, and improving process efficiency directly contribute to increased profitability and competitiveness. Increased employee productivity translates to higher output and better resource allocation, supporting overall business growth objectives.

Improved Decision-Making and Predictive Analytics

AI and big data provide the capability to analyze massive datasets, identify trends, and make data-driven predictions. This empowers businesses to make more informed decisions across all areas, from marketing and sales to product development and risk management. For example, a financial institution might use AI to detect fraudulent transactions or predict market trends.

Key Performance Indicators (KPIs) for measuring success in this area include:

- Accuracy of Predictions: Measures the correctness of AI-driven forecasts, such as sales projections or risk assessments.

- Improved Decision-Making Speed: Tracks the reduction in time required to make critical business decisions.

- Return on Investment (ROI) of AI initiatives: Quantifies the financial benefits derived from AI-driven insights and actions.

- Reduction in Errors: Measures the decrease in mistakes due to improved data-driven decision-making.

Alignment with Business Strategy:

Accurate predictions, faster decision-making, and higher ROI contribute directly to improved profitability and competitive advantage. Reducing errors minimizes losses and improves overall business performance. These KPIs demonstrate the value of AI and big data in driving strategic decision-making and achieving key business objectives.

Data Acquisition and Preparation

Successfully integrating AI and big data hinges on the quality and relevance of the data used to train models. This section details the crucial steps involved in acquiring, preparing, and governing your data for optimal AI performance. A robust data pipeline ensures that your AI initiatives are built on a solid foundation, leading to more accurate predictions and better business outcomes.Data acquisition and preparation involves a multi-stage process that begins with identifying relevant data sources and culminates in a structured, clean dataset ready for model training.

Careful consideration of data quality, governance, and compliance is paramount throughout this process. Neglecting these steps can lead to inaccurate models, biased results, and ultimately, project failure.

Identifying and Collecting Relevant Data Sources

Identifying suitable data sources requires a thorough understanding of the business problem you are trying to solve with AI. This involves pinpointing the key variables that influence the outcome and determining where that data resides. Sources might include internal databases (CRM, ERP, transactional systems), external APIs (weather data, market trends), or publicly available datasets (government statistics, research publications). Each source must be evaluated for its relevance, reliability, and accessibility.

Data collection methods will vary depending on the source; this could involve database queries, API calls, web scraping, or manual data entry. The process should be meticulously documented to ensure traceability and reproducibility.

Data Preprocessing: Handling Missing Values and Outliers

Once data is collected, it rarely arrives in a perfectly usable format. Preprocessing involves transforming raw data into a suitable format for AI model training. This crucial step significantly impacts model accuracy and performance. A common challenge is handling missing values. Strategies include imputation (replacing missing values with estimated values based on other data points), deletion (removing rows or columns with missing data), or using algorithms specifically designed to handle missing data.

Outliers, data points significantly deviating from the norm, can also skew model results. Techniques for outlier detection and treatment include using statistical methods (e.g., z-scores, IQR), visual inspection (scatter plots, box plots), or employing robust algorithms less sensitive to outliers.

Data Governance Framework

A robust data governance framework is essential to maintain data quality, ensure compliance with regulations (e.g., GDPR, CCPA), and promote trust in AI-driven decisions. This framework should define roles and responsibilities, data quality standards, data security protocols, and processes for data access and management. Regular data audits and quality checks are crucial for identifying and addressing data issues proactively.

Documentation is key; this includes data dictionaries, metadata descriptions, and procedures for data handling. Transparency and accountability are crucial elements of a successful data governance framework.

Example Data Sources and Treatment

| Data Source | Data Type | Cleaning Method | Validation Check |

|---|---|---|---|

| Customer Relationship Management (CRM) System | Customer demographics, purchase history | Imputation for missing values (e.g., mean/median for numerical data, mode for categorical data); outlier removal using IQR | Data type consistency checks, range checks, uniqueness constraints |

| Website Analytics Platform | Website traffic, user behavior | Data transformation (e.g., log transformation for skewed data); outlier removal using z-scores | Data completeness checks, consistency checks across different data points |

| Social Media API | Social media posts, sentiment analysis | Data cleaning (e.g., removing irrelevant characters, handling missing values by removing entries); data normalization (e.g., stemming, lemmatization) | Data integrity checks, consistency checks across different social media platforms |

| External Market Research Database | Market trends, competitor analysis | Data standardization (e.g., converting units, currency); outlier analysis and handling | Data source verification, consistency with other data sources |

AI Model Selection and Development

Choosing the right AI model is crucial for successful big data integration. The selection process depends heavily on the specific business problem, the nature of the data, and the desired outcome. A poorly chosen model can lead to inaccurate predictions, wasted resources, and ultimately, project failure. This section explores the key considerations in AI model selection and the development process.

AI algorithms are diverse, each with strengths and weaknesses. The optimal choice hinges on a careful evaluation of the business objective and data characteristics. For instance, predictive modeling tasks might benefit from algorithms like linear regression, logistic regression, or decision trees, while anomaly detection might be better served by techniques such as One-Class SVM or isolation forests. The following sections detail the process of selecting and developing these models.

AI Algorithm Comparison for Business Problems

Different AI algorithms are suited to different business problems. Predictive modeling, for example, aims to forecast future outcomes based on historical data. This often involves regression algorithms (linear, logistic, polynomial) for continuous outcomes or classification algorithms (decision trees, support vector machines, random forests, naive Bayes) for categorical outcomes. Anomaly detection, on the other hand, focuses on identifying unusual patterns or outliers.

Algorithms like One-Class SVM, isolation forests, and local outlier factor (LOF) are frequently used for this purpose. Consider a fraud detection system: a classification algorithm might be used to predict whether a transaction is fraudulent or legitimate, while an anomaly detection algorithm could identify unusual spending patterns that warrant further investigation. Similarly, in predictive maintenance, regression algorithms might predict the remaining useful life of a machine, while anomaly detection could pinpoint unusual vibrations or temperature readings suggesting impending failure.

Factors Influencing AI Model Selection

Selecting the appropriate AI model involves considering several key factors beyond the type of business problem. Accuracy, interpretability, and scalability are particularly important.

- Accuracy: The model’s ability to correctly predict or classify data. High accuracy is generally desired, but it should be balanced against other factors like interpretability and computational cost.

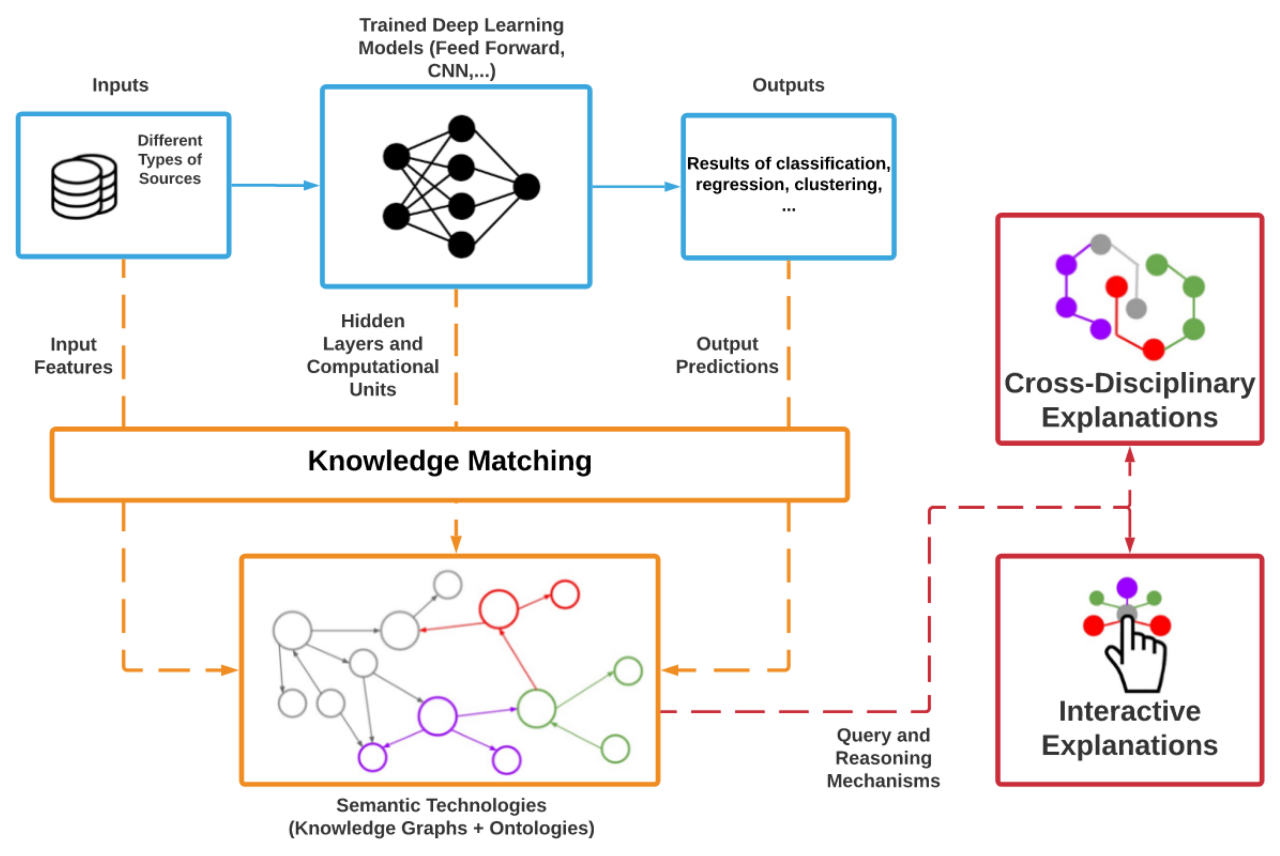

- Interpretability: The extent to which the model’s decisions can be understood. Some models, like linear regression, are highly interpretable, while others, like deep neural networks, are often considered “black boxes.” Interpretability is crucial in situations where understanding the reasons behind predictions is vital, such as in loan applications or medical diagnosis.

- Scalability: The model’s ability to handle large datasets and high volumes of data efficiently. This is particularly important for businesses dealing with massive amounts of data, as a scalable model can process data quickly and accurately without compromising performance.

AI Model Training, Validation, and Deployment

Developing and deploying an AI model involves a structured process. This typically includes data splitting, model training, validation, and deployment into a production environment.

- Data Splitting: The initial dataset is divided into training, validation, and testing sets. The training set is used to train the model, the validation set is used to tune hyperparameters and prevent overfitting, and the testing set is used to evaluate the final model’s performance on unseen data.

- Model Training: The chosen algorithm is trained on the training dataset. This involves feeding the data to the algorithm and allowing it to learn patterns and relationships. The process involves iterative adjustments to the model’s parameters to minimize error.

- Model Validation: The trained model is evaluated on the validation set to assess its performance and identify potential issues like overfitting. Hyperparameters are tuned based on the validation results to optimize performance.

- Model Deployment: Once the model performs satisfactorily on the validation set, it is deployed into a production environment. This may involve integrating the model into existing business systems or creating a new application to utilize its predictions.

Integrating AI with Existing Business Systems

Successfully integrating AI models into existing business infrastructure is crucial for realizing the full potential of AI-driven insights. This involves careful planning, robust technical implementation, and ongoing monitoring to ensure seamless data flow and accurate results. A phased approach, starting with pilot projects, is often the most effective strategy.Integrating AI models, particularly machine learning algorithms, with existing Enterprise Resource Planning (ERP) systems and databases requires a strategic approach that considers both technical and organizational factors.

The goal is to leverage the existing data infrastructure while seamlessly incorporating AI’s predictive and analytical capabilities to enhance decision-making and operational efficiency.

Best Practices for Integrating AI Models with ERP Systems and Databases

Effective integration requires a well-defined strategy. Key steps include identifying suitable data sources within the ERP system, ensuring data quality and consistency, and selecting appropriate AI models based on the specific business problem. Furthermore, establishing clear communication channels between the AI development team and the ERP system administrators is vital for successful implementation. Finally, robust monitoring and evaluation mechanisms should be in place to track performance and make necessary adjustments.

Potential Challenges in Integrating AI and Methods to Overcome Them

Several challenges can hinder the successful integration of AI into existing business systems. Data silos, for instance, can limit the availability of comprehensive data sets needed for training effective AI models. Legacy systems, with their often outdated architectures, can pose significant integration hurdles. Furthermore, ensuring data security and compliance with relevant regulations is paramount. Overcoming these challenges requires a combination of data integration strategies, such as ETL (Extract, Transform, Load) processes, and the adoption of modern, scalable data architectures.

Employing robust security protocols and adhering to data governance frameworks are essential to address security and compliance concerns. For example, a company might use a data lakehouse architecture to combine structured and unstructured data from various sources, addressing data silos. They could also implement a phased migration strategy for legacy systems, prioritizing the integration of AI with the most critical business functions first.

Data Flow Between AI Models and Business Systems

The following flowchart illustrates the typical data flow between AI models and business systems.[Imagine a flowchart here. The flowchart would begin with “ERP System and Databases” as a rectangle. An arrow would point to a rectangle labeled “Data Extraction and Preprocessing”. Another arrow would lead to a rectangle labeled “AI Model Training and Deployment”. From there, arrows would branch out to “Model Predictions/Insights” and “Feedback Loop”.

The “Model Predictions/Insights” arrow points to a rectangle labeled “Business Systems Integration (e.g., updating ERP system, generating reports)”. The “Feedback Loop” arrow connects back to “Data Extraction and Preprocessing”, representing the iterative nature of AI model refinement.]The flowchart demonstrates the cyclical nature of AI model deployment and refinement. Data is extracted from the ERP system, preprocessed, used to train and deploy the AI model, which then generates predictions.

These predictions are integrated back into the business systems, providing valuable insights for decision-making. The feedback loop allows for continuous model improvement based on real-world performance. For instance, a sales forecasting model integrated with an ERP system might initially predict sales with a certain degree of error. Feedback from actual sales data is then used to refine the model, leading to more accurate predictions over time.

Ensuring Ethical Considerations and Data Privacy

The ethical deployment of AI and big data is paramount for business success and maintaining public trust. Ignoring ethical implications can lead to reputational damage, legal repercussions, and a loss of customer confidence. This section details strategies for mitigating ethical risks and ensuring data privacy compliance.The integration of AI and big data introduces several ethical considerations, primarily revolving around bias, fairness, and privacy.

Algorithmic bias, often stemming from biased training data, can perpetuate and amplify existing societal inequalities. Fairness in AI requires actively addressing these biases to ensure equitable outcomes for all users. Simultaneously, the vast quantities of data collected necessitate robust data protection measures to comply with regulations and protect individual privacy rights.

Bias Mitigation and Fairness in AI

Addressing bias in AI requires a multi-faceted approach. Firstly, careful selection and curation of training data are crucial. This involves identifying and removing potentially biased data points and ensuring the dataset represents the diversity of the population it will impact. Techniques like data augmentation can help balance underrepresented groups. Secondly, employing algorithmic fairness metrics during model development allows for the assessment and mitigation of bias.

These metrics quantify fairness across different demographic groups, enabling developers to identify and rectify disparities. Finally, ongoing monitoring and auditing of deployed AI systems are essential to detect and correct any emerging biases. For example, a loan application algorithm trained on historical data might disproportionately reject applications from certain demographic groups if historical lending practices themselves were biased.

By actively monitoring the algorithm’s outputs and comparing them to fairness metrics, such biases can be identified and addressed through model retraining or algorithmic adjustments.

Data Privacy and Regulatory Compliance

Data privacy is a critical ethical consideration in the age of big data. Compliance with regulations such as the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the US is mandatory. These regulations establish strict guidelines for data collection, storage, processing, and sharing. Implementing robust data governance frameworks is essential.

This includes establishing clear data usage policies, implementing data encryption and anonymization techniques, and providing individuals with control over their data, including the right to access, correct, and delete their information. Furthermore, organizations must conduct regular data privacy impact assessments to identify and mitigate potential risks. Failure to comply with these regulations can result in significant fines and reputational damage.

For instance, a company failing to properly secure customer data under GDPR could face fines of up to €20 million or 4% of annual global turnover, whichever is higher.

Addressing Ethical Concerns and Risks

A proactive approach to ethical risk management is vital. This involves establishing an ethical framework that guides AI development and deployment. This framework should define ethical principles, establish clear responsibilities, and Artikel procedures for addressing ethical dilemmas. Regular ethical reviews of AI projects throughout their lifecycle are crucial. This allows for the identification and mitigation of potential ethical concerns before they escalate into significant problems.

Transparency in AI systems is also crucial. Explainable AI (XAI) techniques can help make AI decision-making processes more understandable, increasing trust and accountability. Finally, establishing an independent ethics board or committee to oversee AI activities can provide an objective perspective and ensure ethical considerations are prioritized. This board could review proposed AI projects, assess potential risks, and provide guidance on ethical best practices.

This proactive approach can help prevent ethical breaches and maintain public trust.

Monitoring and Evaluation

Successfully integrating AI and big data requires a robust monitoring and evaluation framework. Continuous assessment ensures optimal performance, identifies areas for improvement, and ultimately maximizes the return on investment. This involves tracking key metrics, regularly evaluating the effectiveness of AI-driven solutions, and implementing a proactive plan for continuous system improvement.Effective monitoring and evaluation go beyond simply checking if a system is running.

It’s about understanding how well the AI is performing its intended function, identifying biases, and ensuring the system aligns with evolving business needs. This iterative process allows for course correction, preventing costly mistakes and maximizing the value of your AI and big data investments.

Key Performance Indicators (KPIs) for AI Models and Big Data Infrastructure

Monitoring the performance of AI models and the underlying big data infrastructure is crucial. A range of key performance indicators (KPIs) should be tracked to provide a comprehensive understanding of system health and effectiveness. These metrics should be tailored to the specific AI application and business objectives.

- Model Accuracy: This measures how well the AI model predicts or classifies data. For example, in a fraud detection system, accuracy would reflect the percentage of correctly identified fraudulent transactions. Low accuracy may indicate a need for model retraining or refinement.

- Precision and Recall: These metrics are particularly relevant for classification problems. Precision measures the proportion of correctly identified positive cases among all cases identified as positive. Recall measures the proportion of correctly identified positive cases among all actual positive cases. A high precision and recall indicate a well-performing model. For example, in a spam filter, high precision means fewer legitimate emails are flagged as spam, and high recall means fewer spam emails slip through.

- F1-Score: This is the harmonic mean of precision and recall, providing a single metric that balances both. It’s useful when both precision and recall are important, such as in medical diagnosis where both false positives and false negatives are costly.

- Data Latency: This refers to the time it takes for data to be processed and for the AI model to generate results. High latency can negatively impact user experience and business operations. For example, in a real-time recommendation system, high latency leads to slow loading times and reduced user engagement.

- Infrastructure Uptime: This measures the percentage of time the big data infrastructure is operational. High uptime is essential for ensuring the continuous operation of AI systems. Downtime can result in significant losses in productivity and revenue.

- Resource Utilization: This tracks the consumption of computational resources (CPU, memory, storage) by the AI system. Monitoring resource utilization helps identify bottlenecks and optimize resource allocation. For instance, monitoring CPU usage can help identify if the system needs more powerful hardware or if the model needs optimization for better performance.

Evaluating the Effectiveness of AI-Driven Solutions

Evaluating the effectiveness of AI-driven solutions involves a systematic process of comparing predicted outcomes against actual results. This evaluation should be based on predefined metrics and should incorporate both quantitative and qualitative assessments.The process typically involves:

- Defining success metrics: Before deploying an AI solution, clearly define the key metrics that will be used to evaluate its success. These metrics should be aligned with the overall business objectives.

- Collecting and analyzing data: Gather data on the performance of the AI solution, comparing predicted outcomes with actual results. This data should be collected consistently over time to track performance trends.

- Interpreting results: Analyze the collected data to determine whether the AI solution is meeting the predefined success metrics. Identify any areas where the solution is underperforming.

- Making adjustments: Based on the evaluation results, make necessary adjustments to the AI model, the data pipeline, or the business processes. This might involve retraining the model, improving data quality, or changing how the AI system is integrated into workflows.

Continuous Monitoring and Improvement Plan

A continuous monitoring and improvement plan is essential for ensuring the long-term success of AI systems. This plan should Artikel specific monitoring tasks, their frequency, and the responsible parties.The following is an example of such a plan:

- Daily Monitoring:

- Check infrastructure uptime and resource utilization.

- Monitor data ingestion rates and data quality.

- Weekly Monitoring:

- Review model accuracy and other key performance indicators.

- Analyze system logs for errors and anomalies.

- Monthly Monitoring:

- Conduct a comprehensive evaluation of the AI solution’s effectiveness.

- Review and update the monitoring plan as needed.

- Quarterly Monitoring:

- Perform a more in-depth analysis of model performance, exploring potential biases and areas for improvement.

- Assess the overall impact of the AI solution on business outcomes.

Talent and Skills Development

Successfully integrating AI and big data requires a workforce possessing a unique blend of technical and business acumen. Building this capability necessitates a strategic approach to talent acquisition, training, and retention, ensuring the organization has the expertise to leverage these technologies effectively and sustainably. Ignoring this crucial aspect can significantly hinder the success of any AI and big data initiative.The successful implementation of AI and big data initiatives hinges on a skilled workforce capable of navigating the complexities of these technologies.

This requires a multi-faceted strategy encompassing recruitment, training, and retention, focusing on both technical proficiency and business understanding. A lack of skilled personnel can lead to project delays, implementation failures, and ultimately, a poor return on investment.

Key Skills and Expertise for AI and Big Data Implementation, Best practices for integrating AI and big data in business

Organizations need professionals with a diverse skill set, spanning data science, engineering, and business domains. This includes data scientists proficient in machine learning algorithms, data engineers adept at building and maintaining data pipelines, and business analysts capable of interpreting data insights and translating them into actionable strategies. Furthermore, strong project management skills are crucial for overseeing the complex and iterative nature of AI and big data projects.

Specific skills include expertise in programming languages like Python and R, cloud computing platforms such as AWS and Azure, database management systems, and data visualization tools. A strong understanding of statistical modeling and machine learning techniques, including deep learning, is also paramount. Finally, experience with big data processing frameworks like Hadoop and Spark is highly valuable.

Strategies for Recruiting, Training, and Retaining Employees

Attracting top talent in the competitive AI and big data field requires a proactive approach. This involves offering competitive salaries and benefits, creating a positive and innovative work environment, and emphasizing opportunities for professional development and growth. Targeted recruitment campaigns focused on universities with strong data science programs and industry events can help identify and attract qualified candidates.

Collaborating with universities on research projects and offering internships provides a pipeline of skilled graduates. Retention strategies should focus on providing ongoing training and development opportunities, fostering a culture of collaboration and learning, and offering challenging and rewarding projects. Mentorship programs can help junior employees develop their skills and advance their careers, enhancing employee satisfaction and retention.

Regular performance reviews and feedback sessions provide opportunities for growth and address any skill gaps promptly.

Developing a Training Program for Upskilling Existing Employees

Upskilling existing employees is a cost-effective way to build the necessary expertise. A comprehensive training program should incorporate both theoretical and practical components, including workshops, online courses, and hands-on projects. The program should be tailored to the specific needs of the organization and the roles of the employees involved. For example, training for business analysts might focus on data interpretation and visualization, while training for data engineers might focus on cloud computing and big data technologies.

Utilizing a blended learning approach—combining online courses with in-person workshops and mentorship—can maximize learning effectiveness and accommodate diverse learning styles. Regular assessments and feedback throughout the training program ensure employees are progressing and acquiring the necessary skills. Access to online resources, industry conferences, and professional certifications can further enhance employee development. Finally, creating a culture of continuous learning and encouraging employees to explore new technologies and techniques is essential for maintaining a competitive edge.

Security and Infrastructure

Integrating AI and big data effectively requires a robust security framework and a powerful infrastructure capable of handling the computational demands of model training and deployment. Failure to address these aspects can lead to significant financial losses, reputational damage, and legal repercussions. Data breaches, cyberattacks, and system failures can compromise sensitive information and disrupt business operations, undermining the very benefits AI and big data are intended to provide.The importance of a secure and scalable infrastructure cannot be overstated.

AI models, particularly deep learning models, require significant computing power, storage capacity, and network bandwidth. The infrastructure must be designed to handle the volume and velocity of data processed, ensuring efficient model training and reliable deployment. Furthermore, the infrastructure needs to be resilient to failures, minimizing downtime and ensuring business continuity.

Data Security Measures

Protecting sensitive data used in AI and big data initiatives is paramount. A multi-layered approach is essential, encompassing technical, administrative, and physical security controls. This involves implementing robust access control mechanisms, data encryption both in transit and at rest, regular security audits, and comprehensive incident response plans. For example, a financial institution using AI for fraud detection must ensure that customer financial data is protected through encryption and access controls, preventing unauthorized access and data breaches.

Infrastructure Requirements for AI

Robust infrastructure is crucial for successful AI implementation. This includes high-performance computing (HPC) clusters for model training, scalable storage solutions for managing large datasets, and a reliable network infrastructure to facilitate data transfer and model deployment. For instance, a retail company leveraging AI for personalized recommendations needs a scalable infrastructure to handle the large volume of customer data and efficiently train and deploy recommendation models.

Consideration must also be given to cloud-based solutions versus on-premises infrastructure, weighing factors such as cost, scalability, and security.

Security Measures Against Data Breaches and Cyberattacks

A comprehensive security strategy is crucial to mitigate the risks of data breaches and cyberattacks. This involves several key measures:

- Data Encryption: Encrypting data both in transit and at rest using strong encryption algorithms (e.g., AES-256) protects data from unauthorized access even if a breach occurs.

- Access Control: Implementing robust access control mechanisms, such as role-based access control (RBAC), limits access to sensitive data based on user roles and responsibilities.

- Regular Security Audits: Conducting regular security audits and penetration testing helps identify vulnerabilities and weaknesses in the system before they can be exploited by attackers.

- Intrusion Detection and Prevention Systems (IDPS): Deploying IDPS helps detect and prevent malicious activities, such as unauthorized access attempts and data exfiltration.

- Incident Response Plan: Having a well-defined incident response plan ensures a coordinated and effective response to security incidents, minimizing damage and downtime.

- Employee Training: Educating employees about security best practices, such as phishing awareness and password management, reduces the risk of human error leading to security breaches.

- Data Loss Prevention (DLP): Implementing DLP tools helps prevent sensitive data from leaving the organization’s control, whether intentionally or unintentionally.

Final Wrap-Up: Best Practices For Integrating AI And Big Data In Business

Successfully integrating AI and big data requires a holistic approach that considers strategic planning, data quality, model selection, ethical implications, and ongoing monitoring. By focusing on these best practices, businesses can unlock the transformative potential of these technologies, driving innovation, improving efficiency, and gaining a competitive edge. Remember, this journey is iterative; continuous improvement and adaptation are key to realizing the full benefits of AI and big data integration.