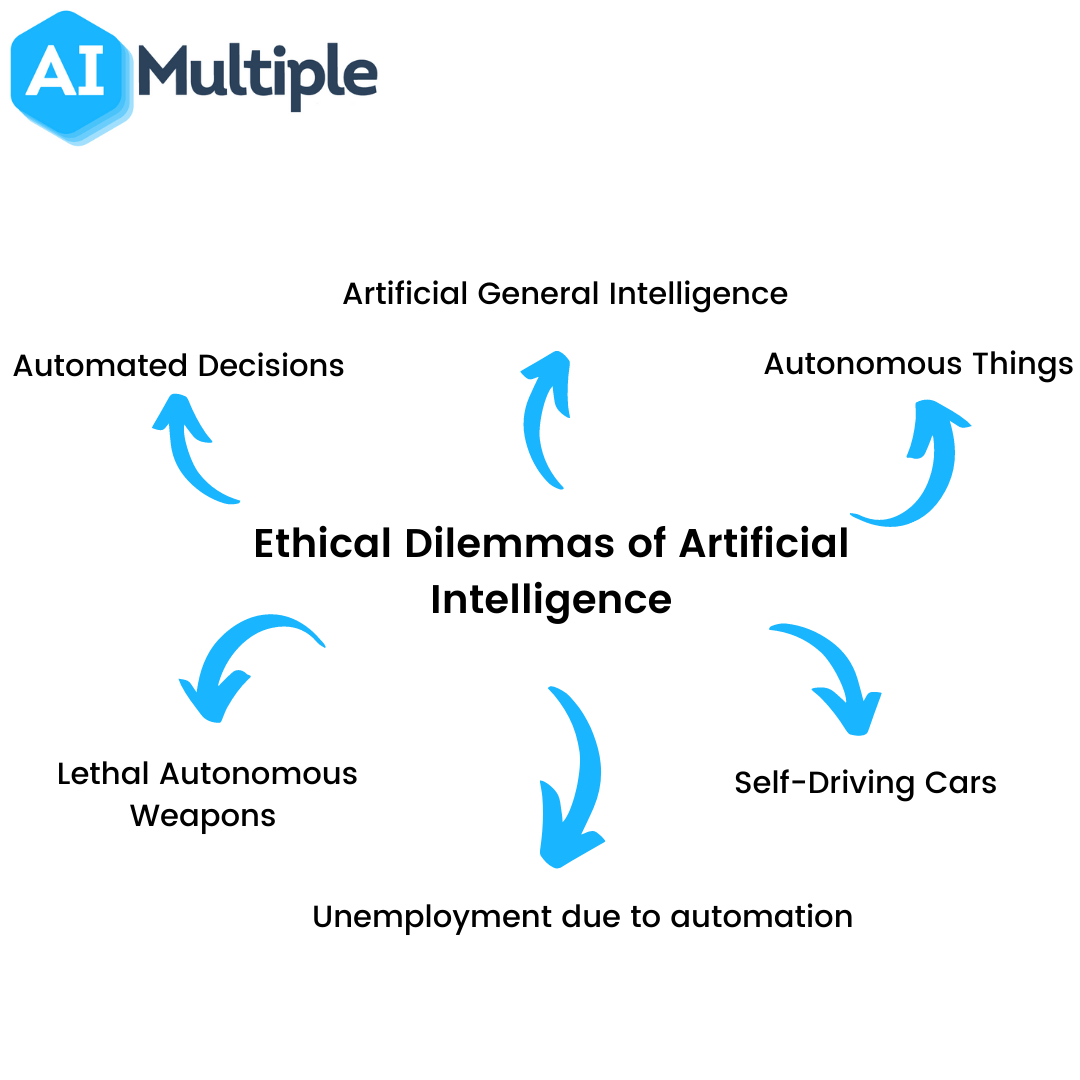

The ethical considerations of AI-generated design content are rapidly becoming a critical area of discussion. As artificial intelligence increasingly permeates the design world, creating stunning visuals and innovative solutions, we must grapple with the complex ethical implications. From copyright infringement and algorithmic bias to environmental impact and the displacement of human designers, the challenges are multifaceted and demand careful consideration.

This exploration delves into the key ethical dilemmas, offering insights into navigating this transformative technological landscape responsibly.

This discussion will examine the legal complexities surrounding AI-generated design ownership, explore the potential for bias and discrimination in AI design tools, and analyze the impact of this technology on the design profession itself. We’ll also consider the crucial role of transparency and explainability in AI design processes, and investigate the environmental footprint of AI-driven design. Ultimately, the goal is to foster a responsible and ethical approach to the integration of AI in design, ensuring its benefits are harnessed while mitigating potential harms.

Copyright and Ownership of AI-Generated Designs

The burgeoning field of AI-generated design presents significant legal challenges, particularly concerning copyright and ownership. The traditional understanding of copyright, rooted in human authorship, struggles to accommodate the output of algorithms trained on vast datasets. This ambiguity creates uncertainty for designers, businesses, and legal systems alike, necessitating a careful examination of the current legal landscape and potential future developments.

Legal Complexities Surrounding Copyright Ownership of AI-Generated Designs

The core issue revolves around the definition of “authorship.” Copyright law typically protects original works of authorship fixed in a tangible medium of expression. However, when an AI, lacking independent thought and intent, generates a design, the question of who, if anyone, holds the copyright becomes complex. Some jurisdictions might argue that the programmer or the owner of the AI system holds the copyright, while others might suggest that AI-generated works are not eligible for copyright protection altogether.

This lack of clarity creates a significant risk for those using AI design tools, as the ownership of the generated assets could be contested. The absence of a clear legal framework also inhibits the widespread adoption and investment in AI design technologies.

Scenarios of Copyright Infringement by AI-Generated Designs

AI models are trained on massive datasets of existing designs, images, and other creative works. If the AI system is not properly trained or if its output closely resembles a copyrighted work without sufficient transformation, it can lead to copyright infringement. For example, an AI trained primarily on images of a specific artist’s style might inadvertently generate designs that are substantially similar to that artist’s copyrighted works, even if the AI wasn’t explicitly instructed to replicate them.

This scenario highlights the crucial need for robust mechanisms to prevent AI systems from creating derivative works that infringe on existing copyrights. Furthermore, the scale at which AI can generate designs exponentially increases the potential for widespread infringement.

Legal Frameworks Addressing AI-Generated Design Ownership

Currently, no universally accepted legal framework exists to definitively address the copyright ownership of AI-generated designs. However, various legal approaches are being explored. Some jurisdictions are considering amendments to existing copyright laws to explicitly address AI-generated works, perhaps by assigning ownership to the user or the developer of the AI system, based on criteria such as the level of user input or the AI’s level of autonomy.

Other jurisdictions are adopting a wait-and-see approach, allowing case law to develop and shape the legal landscape. The development of a comprehensive international legal framework is crucial to ensure consistency and predictability across borders. Existing intellectual property laws, like those surrounding patents and trade secrets, may also play a role in protecting specific aspects of AI-generated designs, though these may not offer the same scope of protection as copyright.

Hypothetical Legal Case: Ownership Dispute of an AI-Generated Design

| Plaintiff | Defendant | Claim | Evidence |

|---|---|---|---|

| ArtCo, a design firm | AI Designs Inc., the developer of an AI design software | Copyright infringement and ownership dispute over a design generated using AI Designs Inc.’s software. ArtCo claims ownership based on their input and creative direction. | ArtCo’s design briefs, communication logs detailing creative direction given to the AI, expert testimony on the level of human input in the design process, and evidence of the design’s commercial use by ArtCo. |

Bias and Discrimination in AI Design Tools

AI design tools, while offering incredible potential for efficiency and creativity, are not immune to the biases present in the data they are trained on. These biases, often subtle and unintentional, can significantly impact the generated content, perpetuating and even amplifying existing societal inequalities. Understanding these biases and developing strategies for mitigation is crucial for ensuring fairness and inclusivity in the design world.AI design algorithms learn from vast datasets of existing designs, images, and text.

If these datasets overrepresent certain demographics or perspectives while underrepresenting others, the resulting AI will likely reflect these imbalances. For example, an AI trained primarily on images of Western architecture may generate designs that consistently favor European styles, neglecting or misrepresenting architectural traditions from other parts of the world. This isn’t simply a matter of aesthetic preference; it reflects a deeper bias that marginalizes diverse cultural expressions.

Examples of Bias Perpetuating Societal Inequalities

The biases embedded in AI design tools can manifest in various ways, leading to the perpetuation of societal inequalities. For instance, an AI tasked with generating images of professionals might overwhelmingly produce images of men in suits, reflecting and reinforcing gender stereotypes in the workplace. Similarly, an AI designing websites might consistently prioritize designs that cater to users with specific physical abilities, overlooking the needs of individuals with disabilities.

This can lead to exclusionary design practices, hindering accessibility and inclusivity for a significant portion of the population. Consider an AI-generated marketing campaign that predominantly features light-skinned individuals, thereby excluding and potentially alienating a large segment of the target audience. These are not isolated incidents but indicative of a broader issue requiring careful consideration.

Strategies for Mitigating Bias in AI Design Tools, The ethical considerations of AI-generated design content

Addressing bias in AI design tools requires a multi-faceted approach. Firstly, careful curation of training datasets is paramount. This involves actively seeking out and incorporating diverse data sources to ensure a balanced representation of different demographics, cultures, and perspectives. Secondly, employing techniques like data augmentation can help increase the diversity of the training data. This might involve generating synthetic data to fill in gaps or using existing data to create variations that represent underrepresented groups.

Thirdly, incorporating human oversight in the design process is crucial. Human designers can review and adjust AI-generated outputs, identifying and correcting any instances of bias that might have slipped through. Finally, employing algorithmic fairness techniques, such as adversarial debiasing, can help minimize biases within the algorithms themselves.

Ethical Responsibilities of Developers in Creating Unbiased AI Design Tools

Developers bear a significant ethical responsibility in creating unbiased AI design tools. Their actions directly impact the fairness and inclusivity of the design process and its outcomes. Ignoring these considerations can have serious consequences, leading to the perpetuation of harmful stereotypes and the exclusion of marginalized communities. It is not enough to simply create a functional tool; developers must actively strive to create tools that are ethical and equitable.

- Prioritize diverse and representative training datasets.

- Employ data augmentation techniques to address data imbalances.

- Implement rigorous testing and evaluation procedures to identify and mitigate bias.

- Incorporate human oversight in the design process to review and correct AI outputs.

- Utilize algorithmic fairness techniques to minimize biases in algorithms.

- Promote transparency and accountability in the development and deployment of AI design tools.

- Engage with diverse stakeholders to ensure that the needs and perspectives of all users are considered.

Transparency and Explainability in AI Design Processes

The increasing use of AI in design raises crucial questions about transparency and explainability. Understanding how AI arrives at its design choices is vital for trust, accountability, and responsible innovation. A lack of transparency can hinder user acceptance, limit opportunities for improvement, and potentially lead to unforeseen biases or errors. This section explores the importance of transparency in AI design, the challenges in achieving it, and various methods for enhancing explainability.

The Importance of Transparency in Understanding AI-Generated Design Content

Transparency in AI design processes allows designers and users to understand the rationale behind the AI’s suggestions. This understanding is critical for several reasons. First, it fosters trust. When users understand the process, they are more likely to accept and utilize the AI’s output. Second, it enables effective collaboration.

Designers can use transparency to identify areas where the AI excels and where human intervention is necessary. Third, it facilitates debugging and improvement. By analyzing the AI’s decision-making process, developers can identify and correct biases or flaws in the algorithm. Finally, transparency is essential for accountability. If the AI makes a mistake, understanding its reasoning helps determine the cause and prevent future errors.

Without transparency, AI design tools become “black boxes,” hindering progress and raising concerns about potential misuse.

Challenges in Achieving Full Transparency in AI Design Tool Decision-Making Processes

Achieving complete transparency in AI design tools presents significant challenges. Many AI models, particularly deep learning models, operate with immense complexity. Their decision-making processes often involve intricate interactions between numerous parameters and layers, making it difficult to pinpoint the exact reasons behind a specific design choice. Furthermore, the sheer volume of data used to train these models can make it computationally expensive and impractical to fully trace the influence of individual data points on the final output.

Data privacy concerns also restrict the extent to which the training data and internal model workings can be openly shared. The inherent “non-linearity” of many AI algorithms further complicates the task of explaining their outputs in a clear and concise manner. Finally, the lack of standardized methods for explaining AI decision-making hinders the development of universally accepted metrics for evaluating transparency.

Methods for Increasing the Explainability of AI-Generated Designs

Several methods can improve the explainability of AI-generated designs. One approach involves developing more interpretable AI models. This involves designing models whose internal workings are easier to understand and whose decisions can be readily traced. Another approach focuses on post-hoc explainability techniques, which analyze the AI’s output to infer the underlying reasoning. These techniques might involve visualizing the AI’s attention patterns, highlighting the features that most influenced its decision, or generating textual explanations that describe the design choices.

Furthermore, providing users with tools to interact with and manipulate the AI’s parameters can enhance transparency, allowing them to understand how different inputs affect the output. Finally, clear and accessible documentation explaining the AI’s capabilities, limitations, and underlying assumptions is crucial for building user trust and promoting responsible use.

Comparison of Approaches to Ensuring Transparency in AI Design Systems

| Approach | Advantages and Disadvantages |

|---|---|

| Interpretable AI Models | Advantages: Easier to understand the reasoning; simpler debugging and bias detection. Disadvantages: May sacrifice accuracy for interpretability; limited applicability to complex tasks. |

| Post-hoc Explainability Techniques | Advantages: Applicable to a wide range of models; can provide insights even for complex models. Disadvantages: Explanations may be incomplete or inaccurate; interpretation can be subjective. |

| Interactive Design Tools | Advantages: Allows users to explore the design space and understand the AI’s behavior; enhances user control and engagement. Disadvantages: Requires careful design to avoid overwhelming users; may not be suitable for all users. |

| Comprehensive Documentation | Advantages: Provides crucial context for understanding the AI’s capabilities and limitations; promotes responsible use. Disadvantages: Requires significant effort to create and maintain; may not be sufficient for complete transparency. |

The Impact of AI on the Design Profession: The Ethical Considerations Of AI-generated Design Content

The integration of artificial intelligence (AI) into the design process is rapidly transforming the industry, presenting both opportunities and challenges for human designers. While concerns about job displacement are valid, the reality is more nuanced, suggesting a shift in roles and skillsets rather than outright replacement. AI’s impact will redefine the designer’s function, demanding adaptability and a focus on uniquely human skills.

Potential Displacement of Human Designers

The automation potential of AI design tools raises legitimate concerns about job displacement. AI can generate variations of designs quickly and efficiently, potentially reducing the need for human designers in tasks involving repetitive or formulaic design elements. However, complete replacement is unlikely in the near future. The most impactful AI tools currently serve as assistants, augmenting rather than replacing human designers.

For example, AI can rapidly generate multiple logo options based on specified parameters, but the final selection, refinement, and conceptualization still require human judgment and creative vision. The extent of displacement will depend on the specific design field and the rate of AI tool development and adoption. While some entry-level design positions may be affected, roles requiring complex problem-solving, strategic thinking, and nuanced creative direction are less susceptible to automation.

New Roles and Skills for Designers

The evolving landscape necessitates designers acquire new skills to thrive. Instead of solely focusing on execution, designers will need to master AI tool management, data analysis, and prompt engineering to effectively leverage AI capabilities. Understanding the limitations and biases of AI algorithms is crucial for ensuring ethical and responsible design outcomes. Furthermore, human-centered design principles, user research, and strategic thinking will become even more vital as designers focus on guiding and refining AI-generated outputs.

New roles like “AI Design Strategist” or “AI Design Integrator” might emerge, emphasizing the oversight and human direction of AI-driven design processes.

AI Augmenting Human Creativity and Improving Design Workflows

AI tools can significantly enhance design workflows and augment human creativity. By automating tedious tasks like image resizing, color palette generation, and initial design iterations, AI frees designers to focus on higher-level creative tasks, such as conceptualization, storytelling, and user experience design. For example, AI-powered tools can quickly generate multiple design options, allowing designers to explore a wider range of possibilities and potentially discover innovative solutions they might not have considered otherwise.

Moreover, AI can provide valuable data-driven insights into user preferences and trends, informing design decisions and enhancing the effectiveness of design solutions.

Collaborative Project: Human Designer and AI Design Tool

Consider a project to redesign a company website. A human designer, Sarah, is tasked with leading the project. She uses an AI design tool, “DesignSpark,” to assist. Sarah defines the project goals, target audience, and brand guidelines. She then uses DesignSpark to generate several initial website layouts based on her input.

DesignSpark also analyzes competitor websites and identifies design trends relevant to the project. Sarah reviews the AI-generated layouts, providing feedback and refining the designs based on her creative vision and strategic understanding of the client’s needs. She uses DesignSpark to test different color palettes and typography options, iterating until she achieves a visually appealing and user-friendly design that aligns with the brand identity.

Finally, Sarah ensures the website’s accessibility and functionality, tasks beyond the current capabilities of AI design tools. In this scenario, Sarah’s expertise in user experience, strategic design thinking, and brand management guides the AI’s technical capabilities, resulting in a superior design outcome. DesignSpark accelerates the process, explores a wider design space, and provides data-driven insights, while Sarah provides the creative direction, critical evaluation, and human touch.

Environmental Considerations of AI-Generated Design

The burgeoning field of AI-generated design offers exciting possibilities for creativity and efficiency, but its environmental impact is a crucial consideration. The energy-intensive processes involved in training and running AI models, coupled with the manufacturing implications of the designs they produce, raise significant sustainability concerns. Addressing these concerns is vital for responsible innovation in this rapidly evolving sector.

Energy Consumption of AI Design Models

Training large AI design models requires immense computational power, leading to substantial energy consumption. This energy demand stems from the massive datasets used for training, the complex algorithms involved, and the powerful hardware (GPUs) needed to process them. For example, training a single state-of-the-art image generation model can consume the equivalent energy of several homes for an entire year.

This high energy consumption contributes to greenhouse gas emissions, impacting the environment negatively. The operational phase, while less energy-intensive than training, still requires considerable computational resources to generate designs, adding to the overall environmental footprint. Ongoing research focuses on more energy-efficient algorithms and hardware to mitigate this impact.

Environmental Impact of AI-Generated Design Manufacturing

The environmental consequences extend beyond the digital realm. AI-generated designs often translate into physical products, and their manufacturing processes contribute significantly to environmental impact. For instance, an AI-generated design for a plastic product will have a different environmental footprint than one designed for sustainable bamboo. The choice of materials, manufacturing techniques, transportation, and end-of-life disposal all influence the overall environmental burden.

Consider the example of AI-designed furniture: using recycled materials and minimizing waste in the manufacturing process can significantly reduce the carbon footprint compared to using virgin materials and generating substantial waste. The lifecycle assessment of AI-generated designs, therefore, becomes crucial for responsible manufacturing.

Strategies for Minimizing the Environmental Footprint of AI Design Tools and Processes

Minimizing the environmental impact of AI design necessitates a multi-pronged approach. This includes developing more energy-efficient algorithms, utilizing renewable energy sources for data centers powering AI training, and optimizing the design process itself to reduce material usage and waste. Exploring alternative hardware solutions, such as neuromorphic computing, which mimics the human brain’s energy efficiency, offers promising avenues for future development.

Furthermore, focusing on the design for disassembly and recyclability of AI-generated products significantly reduces the environmental burden at the end of their lifecycle. Adopting a circular economy approach, prioritizing reuse and recycling, is crucial.

Incorporating Sustainable Design Principles into AI-Generated Content

Sustainable design principles can be effectively integrated into AI-generated content by incorporating specific considerations throughout the design process. This requires careful consideration of material selection, energy efficiency, and lifecycle impact.

- Prioritizing the use of recycled and sustainably sourced materials.

- Optimizing designs for minimal material usage and reduced waste generation.

- Designing for durability and longevity to extend product lifespan.

- Incorporating design for disassembly and recyclability for easier end-of-life management.

- Considering the transportation and logistics aspects to minimize environmental impact.

- Utilizing AI to optimize energy efficiency in the final product design.

- Implementing life cycle assessment tools within the AI design workflow to evaluate environmental impacts.

The Role of Human Oversight in AI Design

The ethical and effective deployment of AI in design hinges critically on robust human oversight. While AI tools offer unprecedented speed and potential for innovation, their inherent limitations and potential for bias necessitate careful human guidance and control throughout the design process. Without it, the risks associated with AI-generated designs become amplified, potentially leading to harmful or unethical outcomes.

Importance of Human Oversight in Ensuring Ethical AI Design

Human oversight is paramount in ensuring AI design tools are used ethically. It serves as a crucial safeguard against biases embedded within the algorithms, preventing the perpetuation of harmful stereotypes or discriminatory practices. Furthermore, human judgment is essential in navigating complex ethical dilemmas that AI systems, lacking nuanced understanding of social context and human values, may struggle to address.

For instance, an AI might optimize a product design for maximum efficiency, disregarding potential negative environmental impacts or overlooking accessibility needs for users with disabilities – considerations a human designer is more likely to prioritize. Ultimately, human oversight guarantees accountability and ensures the alignment of AI-driven design with human values and societal well-being.

Potential Risks Associated with a Lack of Human Oversight in AI Design

The absence of human oversight in AI design processes introduces several significant risks. One major concern is the amplification of existing biases present in the training data. AI models learn from the data they are fed, and if this data reflects societal biases (e.g., gender or racial biases in image datasets), the AI will likely perpetuate and even exacerbate these biases in its generated designs.

This could manifest in the creation of products or services that unfairly disadvantage certain groups. Another risk is the lack of accountability. Without human review, it becomes difficult to determine responsibility when AI-generated designs result in unintended negative consequences, such as safety hazards or environmental damage. Furthermore, the “black box” nature of some AI algorithms can make it difficult to understand how a particular design was generated, hindering efforts to identify and correct errors or biases.

A lack of human oversight also increases the risk of producing designs that are aesthetically unappealing, impractical, or simply nonsensical, undermining the overall quality and value of the design process.

Methods for Implementing Effective Human Oversight in AI Design Processes

Implementing effective human oversight requires a multi-faceted approach. This involves carefully selecting and curating training data to minimize bias, employing explainable AI (XAI) techniques to enhance transparency in the decision-making processes of AI design tools, and establishing clear guidelines and protocols for human review and approval. Regular audits of the AI system’s performance and output are also crucial to detect and correct any emerging biases or errors.

Moreover, designers need ongoing training and education to effectively collaborate with and supervise AI design tools. This training should focus on understanding the capabilities and limitations of AI, identifying potential biases, and developing critical evaluation skills for AI-generated designs. Finally, establishing clear lines of responsibility and accountability is essential to ensure that humans remain ultimately responsible for the outcomes of AI-driven design processes.

A System for Human Review and Approval of AI-Generated Design Content

The following flowchart Artikels a system for human review and approval of AI-generated design content:

Step 1: AI Design Generation: The AI tool generates design options based on user input and specified parameters.

Step 2: Initial Human Review: A designer reviews the AI-generated options for initial feasibility, adherence to design briefs, and obvious aesthetic or functional flaws. This step aims to quickly eliminate clearly unsuitable designs.

Step 3: Bias and Ethical Assessment: A dedicated team or individual reviews the remaining designs for potential biases, ethical concerns, and compliance with relevant regulations. This step scrutinizes the design for unintended consequences and potential discriminatory outcomes.

Step 4: Refinement and Iteration: Based on the feedback from the previous steps, the designer refines the selected designs using the AI tool or through manual adjustments. This iterative process allows for human creativity and expertise to enhance the AI-generated designs.

Step 5: Final Approval: A senior designer or a designated review board provides final approval of the selected and refined design. This step ensures quality control and alignment with organizational standards.

Step 6: Documentation and Archiving: The entire design process, including AI inputs, human interventions, and approval steps, is meticulously documented and archived for transparency and accountability.

Closing Summary

The ethical considerations surrounding AI-generated design content are not merely academic exercises; they are vital for shaping a future where technology and human creativity coexist harmoniously. Addressing copyright concerns, mitigating algorithmic biases, promoting transparency, and acknowledging the environmental impact are crucial steps towards responsible innovation. By proactively engaging with these ethical challenges, designers, developers, and policymakers can ensure that AI enhances, rather than undermines, the creative process and its positive societal contributions.

The future of design hinges on our ability to navigate these complexities ethically and thoughtfully.