Challenges of implementing AI in large-scale database systems are rapidly emerging as organizations strive to leverage the power of artificial intelligence for data-driven decision-making. The sheer volume and velocity of data in modern enterprises, coupled with the computational demands of sophisticated AI models, present significant hurdles. Successfully navigating these challenges requires a strategic approach encompassing data management, infrastructure optimization, model selection, integration with existing systems, and robust security measures.

This exploration delves into the key obstacles and potential solutions, providing a comprehensive overview for navigating this complex landscape.

From efficient data ingestion and preprocessing techniques to mitigating the risks associated with data security and privacy, the path to successful AI implementation is paved with careful planning and execution. This involves not only technological considerations but also the crucial aspect of talent acquisition and development, ensuring the right expertise is in place to manage and maintain these complex systems.

The following sections will examine these challenges in detail, offering insights and strategies for overcoming them.

Data Volume and Velocity

The integration of AI into large-scale database systems presents unique challenges, particularly concerning the sheer volume and velocity of data. Massive datasets, often exceeding petabytes or even exabytes in size, demand sophisticated strategies for efficient processing to ensure AI model training and inference remain viable. The continuous influx of real-time data streams further complicates this landscape, requiring systems capable of handling high-throughput data ingestion and immediate analysis.The impact of massive datasets on AI model training and inference is multifaceted.

Larger datasets generally lead to more accurate and robust models, but training these models requires significantly more computational resources and time. Inference, or the process of using a trained model to make predictions on new data, also becomes more computationally expensive with larger datasets. Furthermore, the sheer size of the data can lead to storage bottlenecks and increased latency, hindering the overall performance of the AI system.

This necessitates efficient data management strategies to balance accuracy and performance.

Real-time Data Stream Processing for AI

Processing real-time data streams for AI applications presents significant hurdles. High-velocity data streams, such as those generated by sensor networks, financial transactions, or social media platforms, require immediate processing to extract timely insights. Traditional batch processing methods are inadequate for this task. Real-time processing necessitates the use of technologies such as Apache Kafka, Apache Flink, or Spark Streaming, which can handle high-throughput data ingestion and perform low-latency computations.

Furthermore, efficient data filtering and aggregation techniques are crucial to reduce the volume of data processed while preserving relevant information. Consider, for example, a fraud detection system that needs to analyze millions of transactions per second. Real-time processing is essential to identify and prevent fraudulent activities in a timely manner. Failure to process data in real-time could result in significant financial losses.

Data Ingestion and Preprocessing Strategy

An effective data ingestion and preprocessing strategy is paramount for efficient AI model training and inference in large databases. This strategy must address scalability and performance to handle massive datasets and high-velocity data streams. A layered approach, incorporating techniques like data staging, data cleaning, feature engineering, and data transformation, is typically employed. Data staging involves temporarily storing incoming data in a readily accessible format before further processing.

Data cleaning addresses inconsistencies, missing values, and errors. Feature engineering involves creating new features from existing ones to improve model performance. Data transformation involves converting data into a format suitable for the chosen AI model. For instance, a system might use a distributed message queue (like Kafka) for initial data ingestion, followed by a distributed processing framework (like Spark) for data cleaning and feature engineering, before loading the processed data into a database optimized for AI workloads.

Scalability is ensured by distributing the processing across multiple nodes, while performance is optimized by using efficient algorithms and data structures.

Data Partitioning and Sharding Techniques

Optimizing AI model performance often necessitates employing effective data partitioning and sharding techniques. Data partitioning divides a large dataset into smaller, manageable subsets, allowing for parallel processing. Sharding, a specific form of partitioning, distributes data across multiple database servers. Different partitioning strategies exist, including horizontal partitioning (dividing rows among tables), vertical partitioning (dividing columns among tables), and range partitioning (dividing data based on a specific attribute’s value range).

The choice of partitioning strategy depends on the specific characteristics of the data and the AI model’s requirements. For example, range partitioning might be suitable for time-series data, while hash partitioning might be appropriate for uniformly distributed data. Sharding distributes the load across multiple servers, improving scalability and reducing latency. A well-designed sharding strategy minimizes data transfer between servers, ensuring efficient model training and inference.

For instance, a recommendation system might use sharding to distribute user data across multiple servers, allowing for faster retrieval of user preferences and recommendations.

Computational Resources

Implementing AI within large-scale database systems demands substantial computational resources, significantly impacting both initial deployment costs and ongoing operational expenses. The scale of data processing required for AI algorithms, particularly deep learning models, necessitates a careful evaluation of hardware and software needs, alongside strategic resource optimization to ensure efficiency and cost-effectiveness.Hardware and Software Requirements for AI in Large-Scale Databases

Hardware Requirements

The hardware infrastructure supporting AI in large databases must handle the intensive computational demands of training and deploying AI models. This typically involves high-performance computing (HPC) clusters comprising numerous servers equipped with powerful CPUs, GPUs, and ample RAM. The number of servers and their specifications directly correlate with the size and complexity of the database and the AI models being used.

For instance, processing terabytes of data for a complex natural language processing (NLP) model might require a cluster with hundreds of servers, each containing multiple high-end GPUs with substantial VRAM. In contrast, a simpler model operating on a smaller dataset might only need a handful of servers with less powerful hardware. Storage infrastructure also plays a crucial role, requiring high-speed, high-capacity storage solutions like NVMe SSDs and distributed file systems to handle the massive data volumes.

Network infrastructure must also be robust and high-bandwidth to facilitate efficient data transfer between servers within the cluster.

Software Requirements

Beyond hardware, robust software is essential. This includes a distributed database management system (DBMS) capable of handling the scale and complexity of the data, AI frameworks like TensorFlow or PyTorch for model development and training, and specialized libraries for data preprocessing, feature engineering, and model deployment. Furthermore, robust monitoring and management tools are necessary to track resource utilization, identify bottlenecks, and ensure system stability.

The choice of software significantly influences the overall performance and cost-effectiveness of the AI implementation. Open-source options can reduce licensing costs, but may require more specialized expertise for maintenance and optimization.

Cost Implications of AI Infrastructure at Scale

Deploying and maintaining AI infrastructure for large-scale database systems represents a substantial investment. Costs include the procurement and maintenance of hardware, software licenses, cloud services (if applicable), and the salaries of specialized personnel to manage and operate the system. The initial capital expenditure for hardware can be significant, particularly for HPC clusters with numerous high-end servers. Ongoing operational expenses include power consumption, cooling, maintenance contracts, and personnel costs.

For example, a large enterprise deploying a sophisticated AI system for fraud detection might invest millions of dollars in initial infrastructure and incur hundreds of thousands of dollars annually in operational expenses. Careful planning and cost optimization are critical to managing these expenses effectively.

Optimizing Resource Utilization

Strategies for optimizing resource utilization are essential to mitigate costs and improve performance. Parallelization techniques, which involve distributing the computational workload across multiple processors or servers, are crucial for handling large datasets and complex models efficiently. Distributed computing frameworks like Apache Spark and Hadoop provide robust tools for parallelizing data processing tasks. Furthermore, techniques like model compression, quantization, and pruning can reduce the computational resources required for model inference without significantly impacting accuracy.

Efficient data management practices, including data compression and optimized query processing, also contribute to improved resource utilization. For instance, using techniques like data sharding and efficient indexing can significantly reduce the time required to access and process data, thereby optimizing resource usage.

Cloud-Based vs. On-Premise Solutions: A Cost-Benefit Analysis

The decision between cloud-based and on-premise solutions for AI deployment in large databases involves a trade-off between cost, scalability, security, and control.

| Factor | Cloud-Based | On-Premise |

|---|---|---|

| Cost | Pay-as-you-go model; potentially lower upfront costs but higher long-term expenses depending on usage. | High upfront investment in hardware and software; potentially lower long-term costs with efficient resource management. |

| Scalability | Highly scalable; easily adjust resources based on demand. | Scalability requires significant upfront planning and investment; expansion can be complex and time-consuming. |

| Security | Relies on cloud provider’s security measures; potential concerns regarding data privacy and compliance. | Greater control over security measures; potential for enhanced data protection but requires significant investment in security infrastructure. |

| Control | Limited control over infrastructure and software; dependence on cloud provider. | Complete control over infrastructure and software; greater flexibility and customization options. |

Model Complexity and Accuracy

Integrating sophisticated AI models into large-scale database systems presents significant challenges. The inherent complexity of these models, coupled with the demands of processing massive datasets, necessitates careful consideration of trade-offs between accuracy and performance. This section delves into the practical hurdles and best practices for successfully deploying AI within such environments.The primary challenge lies in balancing the desire for highly accurate models with the limitations imposed by the database system’s resources.

More complex models, while potentially offering superior predictive power, often require substantially more computational power, memory, and storage. This can lead to unacceptable inference latency, hindering real-time applications and increasing operational costs. Furthermore, the training process itself can be computationally intensive and time-consuming, demanding significant infrastructure investment.

Model Selection and Optimization for Accuracy and Performance

Effective model selection is crucial. Choosing the right model architecture (e.g., linear regression, support vector machines, deep neural networks) depends heavily on the specific task, data characteristics, and available resources. For instance, a simple linear model might suffice for tasks with linear relationships in the data, whereas a deep learning model might be necessary for complex, non-linear patterns.

Optimization techniques, such as hyperparameter tuning using methods like grid search or Bayesian optimization, are essential to fine-tune model parameters and achieve optimal performance within resource constraints. Techniques like early stopping during training can also prevent overfitting and improve generalization to unseen data. For example, a retail company might initially opt for a simpler model for product recommendation, gradually increasing complexity as data volume and computational resources allow for more sophisticated personalization.

Impact of Model Size and Complexity on Inference Latency and Resource Consumption

Larger and more complex models directly impact inference latency and resource consumption. The number of parameters in a model, its depth (in the case of neural networks), and the complexity of its architecture all contribute to increased computational demands during inference. For example, a large language model with billions of parameters will inevitably require more processing power and memory than a smaller, simpler model.

This can lead to longer response times for queries and increased strain on the database system’s infrastructure. Real-world examples include large-scale fraud detection systems where delays in processing transactions can have significant financial implications. Careful consideration of model size and complexity is crucial for maintaining acceptable performance levels.

Model Compression Techniques for Improved Inference Speed and Reduced Memory Footprint

Several techniques exist to mitigate the resource consumption issues associated with large models. Model compression aims to reduce the size of a model without significantly compromising its accuracy. These techniques include pruning, quantization, and knowledge distillation. Pruning removes less important connections or neurons from a neural network, reducing its size. Quantization reduces the precision of the model’s weights and activations, resulting in a smaller memory footprint.

Knowledge distillation trains a smaller “student” model to mimic the behavior of a larger, more accurate “teacher” model. For example, a mobile application using an AI-powered image recognition system might employ quantization to reduce the model’s size and improve inference speed on resource-constrained mobile devices. The selection of appropriate compression techniques depends on the specific model and the desired trade-off between accuracy and resource efficiency.

Integration with Existing Systems

Integrating AI models into large-scale database systems presents significant challenges, particularly when dealing with legacy systems and established workflows. The complexity arises from the need to seamlessly blend new AI functionalities with existing infrastructure while maintaining data integrity and operational efficiency. Successful integration requires careful planning, a phased approach, and a robust API architecture.The primary hurdle is often the incompatibility between the AI model’s requirements and the capabilities of legacy systems.

Older systems may lack the necessary data structures, processing power, or APIs to support the demands of modern AI algorithms. Furthermore, integrating AI often necessitates changes to existing applications, potentially requiring significant development effort and testing to ensure compatibility and stability.

Data Consistency and Integrity Management

Maintaining data consistency and integrity is paramount during AI integration. Inconsistencies can lead to inaccurate AI model predictions and unreliable results, undermining the entire purpose of implementation. Strategies to mitigate this risk include implementing robust data validation checks at various stages of the integration process, utilizing data versioning techniques to track changes and revert to previous states if necessary, and employing data quality monitoring tools to identify and address inconsistencies proactively.

For example, a financial institution integrating AI for fraud detection needs to ensure absolute accuracy in transaction data to avoid false positives or negatives. A discrepancy in a single data field could lead to significant financial losses or reputational damage. Therefore, a comprehensive data governance framework, including clear data quality standards and validation rules, is crucial.

Data Migration to an AI-Ready Environment

Migrating data from existing systems to an AI-ready environment is a critical step. This involves not only transferring the data but also transforming it into a format suitable for AI processing. This might involve data cleaning, transformation, and enrichment. A phased approach is recommended, starting with a pilot migration of a subset of the data to test the process and identify potential issues before a full-scale migration.

Consider the example of a retail company migrating its customer data to a cloud-based AI platform for personalized recommendations. The migration would involve cleaning up inconsistencies in customer addresses, standardizing data formats, and potentially enriching the data with external sources like demographic information. Careful planning and execution, including robust data validation and error handling mechanisms, are essential to ensure data integrity throughout the migration process.

API Architecture for Seamless Interaction

A well-designed API architecture is crucial for enabling seamless interaction between AI models and the database system. The API should provide a clear and consistent interface for accessing AI model functionalities, managing data exchange, and handling various requests. A RESTful API is a common choice, offering a standardized approach to building web services. The API should also incorporate features like authentication and authorization to secure access to sensitive data and AI model functionalities.

For instance, an API might expose endpoints for submitting data to the AI model for prediction, retrieving model results, and managing model configurations. Robust error handling and logging mechanisms are essential to ensure the API’s reliability and maintainability. Regular monitoring and performance testing are vital to identify and address potential bottlenecks or issues.

Data Security and Privacy

The integration of AI into large-scale database systems presents significant challenges regarding data security and privacy. The sheer volume of sensitive data processed, combined with the complex algorithms used in AI models, creates a landscape ripe for exploitation if not properly managed. Robust security measures are paramount to maintaining data integrity, confidentiality, and user trust.Data security risks associated with AI in large databases are multifaceted.

Unauthorized access, data breaches, and inference attacks are all potential threats. Malicious actors could exploit vulnerabilities in the system to steal sensitive information, manipulate AI models for malicious purposes, or even launch denial-of-service attacks. Furthermore, the very nature of AI, which often relies on analyzing vast datasets to identify patterns, can inadvertently reveal sensitive information if not carefully managed.

For example, an AI model trained on anonymized medical data might still be able to identify individual patients based on unique combinations of seemingly innocuous attributes.

Data Encryption, Access Control, and Auditing

Implementing robust data encryption is fundamental to protecting sensitive data at rest and in transit. This involves encrypting data using strong encryption algorithms, such as AES-256, and managing encryption keys securely using techniques like key rotation and hardware security modules (HSMs). Access control mechanisms, such as role-based access control (RBAC) and attribute-based access control (ABAC), should be implemented to restrict access to sensitive data based on user roles and attributes.

Regular security audits and penetration testing are crucial to identify and address vulnerabilities in the system. These audits should encompass not only the database infrastructure but also the AI models themselves, ensuring they are not susceptible to adversarial attacks or data poisoning.

Implementing Differential Privacy

Differential privacy is a powerful technique for protecting sensitive information during AI model training. It adds carefully calibrated noise to the training data, making it difficult to infer individual data points while still allowing the model to learn meaningful patterns from the overall dataset. The amount of noise added is carefully controlled using a privacy parameter (ε), which determines the trade-off between privacy and accuracy.

The smaller the ε, the stronger the privacy guarantee but the lower the accuracy of the model. Implementing differential privacy requires careful consideration of the specific AI algorithm and the sensitivity of the data being used. For example, the Laplace mechanism or the Gaussian mechanism can be employed to add noise to the query results, preserving the privacy of individual records while still providing useful aggregate statistics.

Ethical Considerations and Compliance Requirements, Challenges of implementing AI in large-scale database systems

The ethical implications of using AI with large-scale databases are profound. Bias in training data can lead to discriminatory outcomes, while the lack of transparency in AI models can make it difficult to understand and address potential harms. Compliance with data privacy regulations, such as GDPR and CCPA, is essential. This involves implementing mechanisms for data subject access requests, data portability, and the right to be forgotten.

Organizations must also establish clear data governance policies and procedures to ensure responsible use of AI and compliance with all applicable regulations. Transparency and explainability are key ethical considerations. Organizations should strive to make their AI models as transparent and explainable as possible, allowing users to understand how decisions are made and to identify and address potential biases.

This might involve techniques like LIME (Local Interpretable Model-agnostic Explanations) or SHAP (SHapley Additive exPlanations) to interpret model predictions.

Maintaining and Monitoring AI Systems

Maintaining the performance and accuracy of AI models deployed within large-scale database systems is crucial for ensuring the continued reliability and effectiveness of the entire system. Continuous monitoring and proactive management are essential to mitigate risks associated with model degradation, bias, and security vulnerabilities. This requires a robust framework encompassing performance tracking, drift detection, bias mitigation, and proactive model updates.

Effective monitoring necessitates a multi-faceted approach, integrating automated alerts with manual reviews to identify and address potential issues promptly. This includes establishing clear performance benchmarks, implementing automated anomaly detection systems, and establishing protocols for human-in-the-loop validation of model outputs. The following sections detail key aspects of this comprehensive monitoring strategy.

Performance Monitoring and Accuracy Measurement

A robust performance monitoring system is critical for maintaining the accuracy and reliability of AI models in large-scale database systems. This involves tracking a variety of key metrics to ensure the models are functioning as expected and to identify potential issues before they impact the system’s overall performance. Regular monitoring allows for timely intervention, preventing significant disruptions and maintaining data integrity.

Key monitoring metrics should include:

- Model Accuracy: Regular evaluation of the model’s prediction accuracy using appropriate metrics (e.g., precision, recall, F1-score, AUC) against a held-out test dataset. Significant drops in accuracy should trigger alerts.

- Latency: Monitoring the time taken for the model to generate predictions. High latency can indicate performance bottlenecks and impact the responsiveness of the database system.

- Throughput: Measuring the number of predictions generated per unit of time. Low throughput might suggest scalability issues or resource constraints.

- Resource Utilization: Tracking CPU usage, memory consumption, and disk I/O to identify potential resource bottlenecks and optimize system performance. This can be visualized using dashboards that display real-time usage.

- Error Rates: Monitoring the frequency and types of errors generated by the model. Unusual increases in specific error types can signal problems that require attention.

Model Drift and Bias Detection

Model drift refers to the degradation of a model’s performance over time due to changes in the underlying data distribution. Bias, on the other hand, represents systematic errors in the model’s predictions caused by skewed or incomplete training data. Detecting and addressing these issues is vital for maintaining model fairness and accuracy.

Strategies for detecting model drift and bias include:

- Regular Data Monitoring: Continuously monitor the input data for changes in distribution using statistical methods such as Kullback-Leibler divergence. Significant shifts warrant further investigation.

- Performance Monitoring on Subgroups: Assess model performance on different subgroups within the data to identify potential bias. For example, if a loan application model performs significantly worse for a specific demographic, it may indicate bias.

- Concept Drift Detection: Employ techniques like change point detection algorithms to identify when the relationship between input features and target variables changes over time.

- Bias Auditing: Regularly audit the model for bias using fairness metrics and explainability techniques to understand the model’s decision-making process and identify potential sources of bias.

Model Updates and Retraining

Maintaining the accuracy and relevance of AI models requires a proactive approach to updates and retraining. This involves establishing a clear process for identifying when retraining is necessary and executing it efficiently without disrupting the system’s operation.

A well-defined model update and retraining strategy includes:

- Scheduled Retraining: Regularly retrain models based on a predetermined schedule, even if performance hasn’t degraded significantly. This helps to maintain model relevance in the face of slow changes in data distribution.

- Triggered Retraining: Retrain models automatically when performance metrics fall below predefined thresholds or when significant concept drift is detected.

- Incremental Retraining: Utilize techniques like online learning to update the model incrementally with new data without requiring a complete retraining, reducing downtime and resource consumption.

- Version Control: Maintain version control of all models to facilitate rollback to previous versions if necessary and to track model evolution over time.

Alerting and Response to Performance Degradation or Security Breaches

A comprehensive alerting system is essential for promptly addressing performance degradation or security breaches within the AI infrastructure. This system should provide timely notifications to relevant personnel, enabling swift intervention to minimize disruption and mitigate potential risks.

Key components of an effective alerting system include:

- Threshold-based alerts: Automatically trigger alerts when key performance indicators (KPIs) exceed predefined thresholds. For instance, an alert might be generated if model accuracy drops below a certain level.

- Anomaly detection alerts: Utilize anomaly detection algorithms to identify unusual patterns in model performance or system behavior that may indicate a problem.

- Security monitoring alerts: Implement robust security monitoring to detect unauthorized access attempts, data breaches, or other security threats to the AI infrastructure.

- Escalation procedures: Establish clear escalation procedures to ensure that alerts are promptly addressed by the appropriate personnel, depending on the severity of the issue.

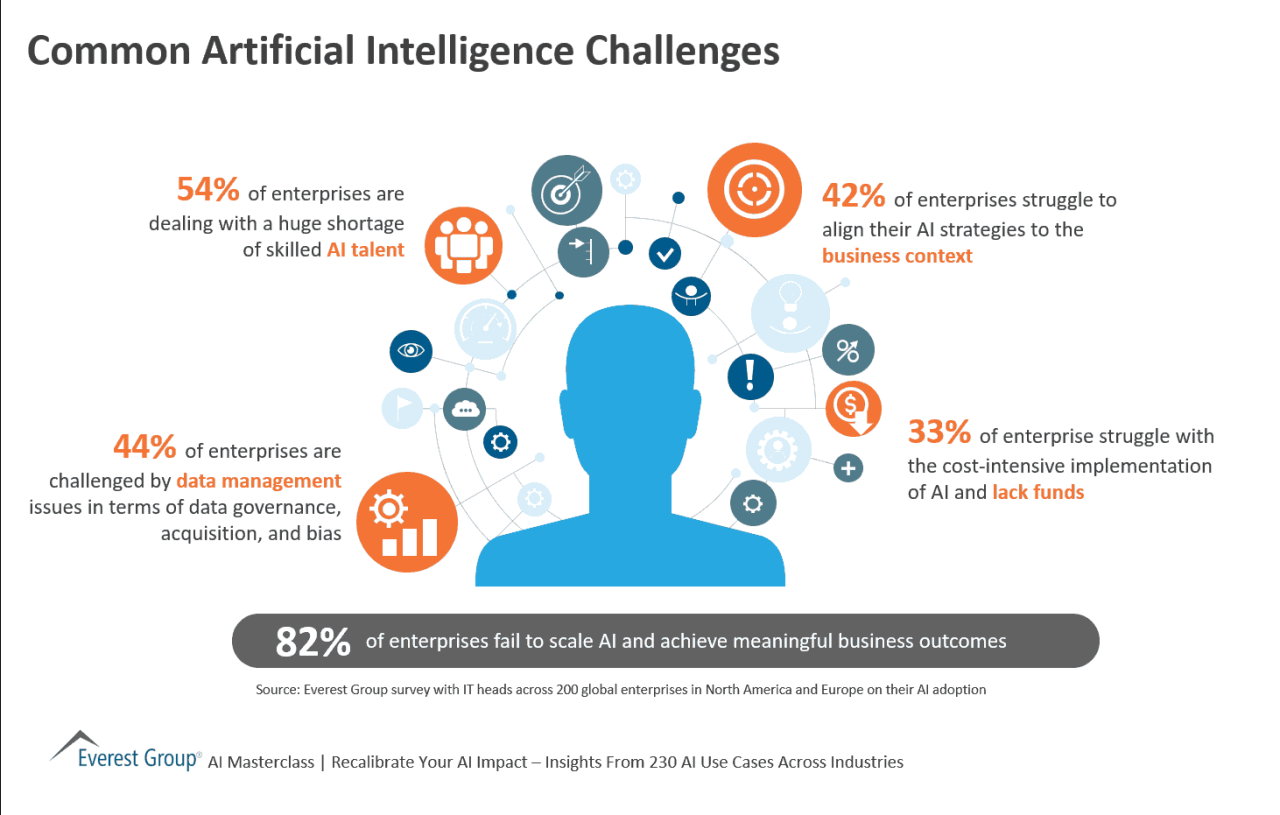

Talent and Expertise

Successfully implementing and maintaining AI systems within large-scale database environments demands a unique blend of skills and expertise. This goes beyond traditional database administration and software development, requiring a deep understanding of machine learning algorithms, data science principles, and the specific challenges posed by integrating AI into existing infrastructure. The scarcity of professionals possessing this multifaceted skillset presents a significant hurdle to widespread AI adoption in this critical area.The successful integration of AI into large-scale databases requires a multidisciplinary team.

Data scientists are needed to design, train, and evaluate machine learning models, while database administrators (DBAs) ensure the efficient operation and scalability of the underlying database infrastructure. Software engineers are crucial for integrating AI models into existing systems and developing new applications. Furthermore, domain experts with a deep understanding of the specific business context are necessary to ensure the AI system addresses the relevant problems and provides valuable insights.

The lack of individuals possessing this comprehensive skillset represents a major obstacle to overcome.

Challenges in Recruiting and Retaining AI Professionals

The competitive landscape for skilled AI professionals is incredibly intense. Companies across various sectors are vying for the same limited pool of talent, driving up salaries and benefits packages. Furthermore, the rapid pace of technological advancements in AI requires continuous learning and upskilling, demanding significant investment from both employers and employees. Attracting and retaining top AI talent necessitates offering competitive compensation, opportunities for professional development, and a stimulating work environment.

For instance, a recent survey indicated that a significant percentage of data scientists leave their positions within two years, often citing a lack of challenging projects or limited career growth opportunities as primary reasons.

Upskilling Existing Database Professionals

Upskilling existing database administrators and developers is a crucial strategy for bridging the talent gap. Targeted training programs focused on AI-related technologies can empower these professionals to contribute effectively to AI initiatives. This approach leverages existing expertise in database management and software development, building upon a solid foundation to introduce AI concepts and techniques. For example, training programs could focus on practical applications of machine learning in database optimization, anomaly detection, and predictive maintenance.

Such programs should include hands-on experience with relevant tools and technologies, allowing participants to apply newly acquired knowledge in real-world scenarios.

Essential Training Programs and Resources

Several resources are available to support the development of AI expertise. Formal education, including master’s and doctoral programs in data science and artificial intelligence, provides a strong theoretical foundation. Online courses offered by platforms like Coursera, edX, and Udacity offer flexible and accessible learning opportunities. Industry certifications, such as those offered by AWS, Google Cloud, and Microsoft Azure, demonstrate proficiency in specific AI technologies.

Furthermore, participation in workshops, conferences, and hackathons provides valuable networking opportunities and practical experience. A structured approach, combining formal education with practical training and certifications, provides a comprehensive pathway to developing the necessary AI skills. For instance, a combination of a Coursera course on deep learning and an AWS certification in machine learning would equip a DBA with the skills needed to optimize database performance using AI techniques.

Ultimate Conclusion: Challenges Of Implementing AI In Large-scale Database Systems

Integrating AI into large-scale database systems presents a formidable but ultimately rewarding challenge. While the hurdles are substantial—from managing massive datasets and optimizing computational resources to ensuring data security and cultivating the necessary expertise—the potential benefits are transformative. By carefully addressing the issues Artikeld above, organizations can unlock the power of AI to gain unprecedented insights from their data, driving innovation and achieving a significant competitive advantage.

The journey demands a proactive, strategic approach, blending technological innovation with a commitment to data governance and talent development. The rewards, however, are well worth the effort.