Ethical considerations of using AI for online money-making are paramount. The rapid integration of artificial intelligence into online financial systems presents unprecedented opportunities for income generation, but also raises significant ethical concerns. From data privacy violations and algorithmic bias to the potential for job displacement and economic inequality, the responsible development and deployment of AI in this context require careful consideration and robust ethical frameworks.

This exploration delves into the complexities of these issues, examining potential solutions and highlighting the crucial need for a balanced approach that maximizes benefits while mitigating risks.

This article examines key ethical challenges surrounding AI-powered online money-making, including data privacy and security vulnerabilities, algorithmic bias and fairness concerns, transparency and explainability issues within AI algorithms, and the broader societal impact on employment and economic equality. We will analyze the legal and ethical implications of data collection and usage, explore methods for mitigating bias, and discuss the responsibilities of developers and regulatory bodies in fostering responsible AI development.

Ultimately, the goal is to navigate the ethical minefield of AI-driven online finance, fostering innovation while prioritizing fairness and responsible practices.

Data Privacy and Security in AI-driven Money-Making Platforms: Ethical Considerations Of Using AI For Online Money-making

The proliferation of AI-powered platforms for online income generation presents significant opportunities but also raises crucial concerns regarding data privacy and security. These platforms often require extensive user data to personalize recommendations, optimize earnings strategies, and improve their algorithms. This reliance on user data creates vulnerabilities that necessitate careful consideration of both legal and ethical implications.The potential for data breaches and misuse is substantial.

AI systems, while sophisticated, are not immune to hacking attempts or malicious actors. Furthermore, the sheer volume of sensitive personal and financial information processed by these platforms increases the potential impact of any security compromise. This includes not only financial data like bank account details and transaction history but also personal information such as location data, spending habits, and even social media activity, all of which can be leveraged for identity theft or targeted advertising.

Legal and Ethical Implications of Data Collection and Use

The collection and use of user data in AI-powered money-making systems are subject to a complex web of legal and ethical considerations. Regulations like GDPR (General Data Protection Regulation) in Europe and CCPA (California Consumer Privacy Act) in the United States impose strict requirements on data collection, storage, and processing. These regulations emphasize user consent, data minimization, and the right to data access and deletion.

Ethically, the use of user data should be transparent, justifiable, and proportionate to the platform’s purpose. Any use of data beyond the explicitly stated purpose requires explicit user consent. Furthermore, algorithms should be designed and implemented to minimize bias and ensure fair treatment of all users, regardless of their demographic characteristics. Failure to comply with these legal and ethical standards can lead to significant fines, reputational damage, and erosion of user trust.

Designing a System for Anonymizing User Data

Maintaining the functionality of AI-driven online money-making tools while protecting user privacy requires sophisticated anonymization techniques. A robust system could employ differential privacy, adding carefully calibrated noise to the data to mask individual identities while preserving aggregate trends useful for AI algorithms. Furthermore, data minimization should be central to the design. Only the data strictly necessary for the platform’s operation should be collected and processed.

Federated learning, where AI models are trained on decentralized data without directly accessing the raw data, is another promising approach. This method allows for collaborative model improvement without compromising individual privacy. Finally, robust access control mechanisms and encryption protocols are crucial to prevent unauthorized access to sensitive data, even within the anonymized dataset.

Comparison of Data Encryption Methods

Several encryption methods can be used to secure user information in AI-based financial applications. The choice of method depends on factors such as security requirements, implementation complexity, and performance overhead.

| Method | Encryption Strength | Implementation Complexity | Data Size Impact |

|---|---|---|---|

| AES (Advanced Encryption Standard) | Very High (with appropriate key length) | Moderate | Small |

| RSA (Rivest-Shamir-Adleman) | High (depends on key length) | High | Moderate |

| ECC (Elliptic Curve Cryptography) | High (with appropriate key size) | Moderate | Small |

| PGP (Pretty Good Privacy) | High (depends on key length and implementation) | Moderate to High | Moderate |

Algorithmic Bias and Fairness in AI-powered Financial Systems

AI-powered systems are increasingly prevalent in online money-making platforms, offering opportunities for automation, efficiency, and personalized services. However, the use of algorithms in financial decision-making raises significant ethical concerns regarding bias and fairness. The potential for these systems to perpetuate or amplify existing societal inequalities necessitates a thorough examination of their design, implementation, and impact. Understanding the sources of bias and developing mitigation strategies are crucial to ensuring equitable access to financial opportunities for all users.

Sources of Algorithmic Bias in AI-driven Financial Systems

Algorithmic bias in AI-powered financial systems stems from various sources, often intertwined and difficult to isolate. These sources can be broadly categorized into biases present in the data used to train the algorithms, biases embedded in the algorithm’s design, and biases arising from the interaction between the algorithm and the environment in which it operates. Failing to address these sources can lead to discriminatory outcomes, hindering financial inclusion and exacerbating existing inequalities.

Ethical Implications of Biased Algorithms Impacting User Access to Financial Opportunities

Biased algorithms can significantly limit access to financial opportunities for certain groups. For example, an algorithm trained on data reflecting historical lending practices might unfairly deny loans to individuals from underrepresented communities, perpetuating a cycle of financial exclusion. This not only impacts individuals’ economic well-being but also undermines broader societal goals of economic equity and social mobility. The ethical implications extend beyond individual hardship to encompass systemic injustices and the erosion of trust in financial institutions.

The lack of transparency in algorithmic decision-making further complicates the issue, making it difficult for affected individuals to understand and challenge unfair outcomes.

Methods for Mitigating Algorithmic Bias in AI Models Related to Online Finance

Mitigating algorithmic bias requires a multi-faceted approach that addresses the sources of bias at various stages of the AI lifecycle. This includes careful data curation to ensure representative datasets, employing algorithmic fairness techniques during model development, and implementing robust monitoring and auditing mechanisms to detect and correct bias in deployed systems. Techniques such as fairness-aware machine learning, adversarial debiasing, and explainable AI can help to identify and mitigate bias.

Furthermore, promoting diversity and inclusion within the teams developing and deploying these systems is crucial to fostering a culture of ethical AI development.

Real-World Examples of Algorithmic Bias Negatively Impacting Individuals in Online Money-Making Contexts

The impact of algorithmic bias is evident in several real-world scenarios. Careful consideration of these examples highlights the urgency of addressing these issues.

- Credit Scoring Algorithms: Studies have shown that AI-powered credit scoring systems can disproportionately deny credit to individuals from minority groups, even when controlling for factors like credit history and income. This is often due to biases embedded in the training data reflecting historical lending practices that discriminated against these groups.

- Fraud Detection Systems: AI-driven fraud detection systems have been shown to flag transactions from certain demographics more frequently than others, leading to the unjust blocking of legitimate accounts and hindering access to financial services.

- Algorithmic Trading: Algorithmic trading strategies, while efficient, can perpetuate market inequalities if not carefully designed and monitored. For instance, algorithms might exploit information asymmetries or react disproportionately to news affecting specific sectors, leading to unfair outcomes for certain investors.

Transparency and Explainability in AI Financial Algorithms

The increasing reliance on AI in online financial applications necessitates a robust framework for transparency and explainability. Users need to understand how AI algorithms make decisions impacting their finances, fostering trust and accountability within these systems. Lack of transparency can lead to distrust, regulatory issues, and ultimately, the erosion of user confidence in AI-driven financial services. This section will explore best practices for achieving transparency and explainability in AI-powered financial algorithms.

Transparency and explainability are crucial for building trust and accountability in AI-powered money-making systems. Users should be able to understand the factors influencing algorithmic decisions that affect their financial well-being. This understanding allows for informed decision-making and empowers users to identify and challenge potentially unfair or biased outcomes. Furthermore, regulatory bodies often require transparency to ensure compliance and prevent the misuse of AI in finance.

Best Practices for Ensuring Transparency in AI Algorithm Decision-Making

Ensuring transparency in AI algorithm decision-making requires a multi-faceted approach. This involves not only documenting the algorithm’s functionality but also providing users with accessible and understandable explanations of its output. This goes beyond simply providing access to code; it requires clear communication tailored to the user’s level of technical understanding.

A crucial aspect of achieving transparency is thorough documentation of the AI model’s development and deployment. This includes detailing the data used for training, the model’s architecture, and the specific features it utilizes for decision-making. This documentation should be readily available to both users and regulatory bodies upon request.

The Importance of Algorithm Explainability for Building Trust and Accountability

Explainable AI (XAI) techniques are essential for building trust and accountability. These techniques aim to provide users with insights into the reasoning behind an algorithm’s decisions, allowing them to understand why a particular outcome was reached. For example, if an AI system denies a loan application, explainability allows the user to understand the factors contributing to the denial, enabling them to address those factors in future applications.

This fosters fairness and prevents arbitrary or discriminatory outcomes.

Accountability is intrinsically linked to explainability. When algorithms are opaque, it becomes difficult to assign responsibility for errors or unfair outcomes. Explainable AI enables the identification of biases or flaws in the algorithm, allowing for corrective actions and the prevention of future issues. This promotes a more responsible and ethical use of AI in finance.

Designing User Interfaces that Communicate AI Algorithm Workings

User interfaces (UIs) play a critical role in communicating the workings of AI algorithms to users. The design of these interfaces should prioritize clarity and simplicity, avoiding technical jargon and presenting information in a visually appealing and easily digestible format. For instance, a loan application system could provide a breakdown of the factors influencing the decision, using clear visualizations like bar charts to show the relative importance of each factor.

Effective UI design involves using clear and concise language, employing visual aids like charts and graphs, and providing interactive elements that allow users to explore the AI’s reasoning. The level of detail provided should be tailored to the user’s technical expertise, offering more detailed explanations for users who seek them while providing simpler summaries for those who prefer a less technical overview.

Providing multiple levels of explanation caters to a broader range of users and fosters greater transparency.

Visual Representation of a Transparent and Explainable AI Algorithm

Imagine a visual representation showing a simplified flowchart of an AI algorithm for loan applications. The flowchart begins with the user inputting their data (income, credit score, etc.). Arrows then lead to different processing blocks representing the algorithm’s steps, each clearly labeled (e.g., “Credit Score Assessment,” “Income Verification,” “Risk Assessment”). Each block displays a brief explanation of its function.

Finally, the flowchart culminates in a decision node, showing the loan approval or denial, with a clear explanation of the contributing factors based on the weighted scores from each processing block, presented visually as a bar chart showing the relative influence of each factor on the final decision. This visual representation clearly illustrates the data flow, processing steps, and the reasoning behind the algorithm’s decision, making the process transparent and easily understandable.

Responsible AI Development and Deployment in the Context of Online Income

The ethical development and deployment of AI in online money-making ventures are paramount. The potential for misuse, bias, and harm necessitates a proactive approach, encompassing both technical safeguards and robust regulatory oversight. This section explores the responsibilities of developers and companies, the role of regulatory frameworks, contrasting approaches to responsible AI development, and Artikels key ethical guidelines.

Ethical Responsibilities of Developers and Companies

Developers and companies deploying AI for online income generation bear significant ethical responsibilities. These extend beyond simply creating functional systems; they encompass ensuring fairness, transparency, accountability, and user protection. This includes proactively identifying and mitigating potential biases in algorithms, designing systems that are easily understood and auditable, and establishing mechanisms for redress in case of errors or unfair outcomes.

For example, a company developing an AI-powered loan application system must ensure that the algorithm does not discriminate against specific demographic groups based on factors unrelated to creditworthiness. Furthermore, they should provide clear explanations of how the system makes its decisions and offer avenues for users to appeal decisions they believe to be unfair.

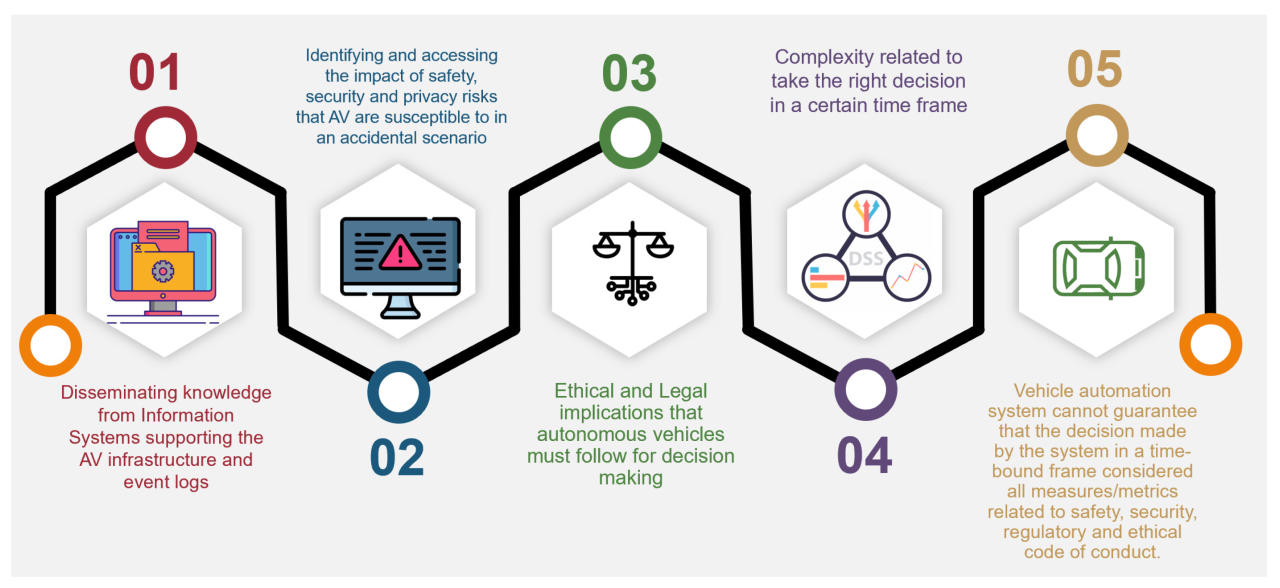

The Role of Regulatory Frameworks in Ensuring Ethical AI Use in Online Finance

Regulatory frameworks play a crucial role in shaping the ethical landscape of AI in online finance. Clear guidelines and regulations are needed to address issues such as data privacy, algorithmic bias, and transparency. These frameworks should promote responsible innovation while preventing the exploitation of users. Examples include regulations mandating data security protocols, requiring impact assessments of AI systems before deployment, and establishing mechanisms for oversight and enforcement.

The absence of robust regulatory frameworks can lead to a “Wild West” scenario where unethical practices proliferate, undermining trust and potentially causing significant harm to individuals and the financial system as a whole. Existing regulations, such as GDPR in Europe, offer a starting point but need to be adapted and expanded to specifically address the unique challenges posed by AI in online finance.

Comparison of Approaches to Responsible AI Development

Different approaches to responsible AI development exist, each with its strengths and weaknesses. A “principle-based” approach focuses on establishing high-level ethical principles, such as fairness, transparency, and accountability, leaving specific implementation details to developers. This approach offers flexibility but may lack the precision needed to address specific ethical challenges. A “rule-based” approach, conversely, defines specific rules and guidelines that developers must adhere to.

This approach provides greater clarity but can be less adaptable to evolving technological advancements and contexts. A hybrid approach, combining principle-based and rule-based elements, offers a more balanced solution, leveraging the strengths of both while mitigating their weaknesses. For instance, a company might adopt overarching principles of fairness and transparency while also implementing specific rules regarding data handling and algorithm testing.

Ethical Guidelines for AI Systems in Online Money-Making

The development and deployment of AI systems in the online money-making space should adhere to a set of clear ethical guidelines. These guidelines should be integrated throughout the entire AI lifecycle, from design and development to deployment and monitoring.

- Prioritize fairness and avoid discrimination: AI systems should be designed and trained to avoid perpetuating existing societal biases and should treat all users fairly, regardless of their background or characteristics.

- Ensure transparency and explainability: The decision-making processes of AI systems should be transparent and understandable to users and stakeholders. This allows for scrutiny and accountability.

- Protect user privacy and data security: AI systems should be designed and implemented with robust data protection measures to safeguard user privacy and prevent data breaches.

- Maintain accountability and responsibility: Clear lines of responsibility should be established for the actions and outcomes of AI systems. Mechanisms for redress should be in place in case of errors or harm.

- Promote human oversight and control: AI systems should be subject to appropriate levels of human oversight to ensure ethical and responsible use.

- Conduct thorough testing and validation: AI systems should be rigorously tested and validated to identify and mitigate potential biases and risks before deployment.

- Continuously monitor and improve: AI systems should be continuously monitored and improved to ensure their ongoing ethical and responsible operation.

The Impact of AI on Job Displacement and Economic Inequality in Online Work

The rise of artificial intelligence (AI) in online platforms presents both opportunities and challenges for workers. While AI can automate tasks and increase efficiency, it also poses a significant threat to employment in certain online sectors, potentially exacerbating existing economic inequalities. This section explores the potential negative impacts of AI-driven automation on online work, strategies for mitigating job displacement, and the potential for AI to both worsen and improve economic disparities in online income generation.AI-driven automation is rapidly transforming online work, impacting various sectors like content creation, customer service, and data entry.

The potential for AI to replace human workers in these areas is substantial, leading to job losses and increased competition for remaining positions. This displacement disproportionately affects low-skilled workers, who may lack the training and resources to adapt to the changing job market.

Negative Impacts of AI on Online Employment

AI-powered tools, such as automated writing software and chatbots, can perform tasks previously done by human workers. This automation can lead to job losses in areas such as content writing, customer support, and data entry. The speed and efficiency of AI systems mean that they can often outperform humans in these repetitive tasks, resulting in reduced demand for human labor.

Furthermore, the increasing sophistication of AI algorithms allows for the automation of more complex tasks, expanding the potential for job displacement. This effect is particularly pronounced in online freelance marketplaces, where competition is already fierce. The resulting downward pressure on wages can further disadvantage workers, especially those in developing countries who rely on online work for income.

Mitigating Job Displacement Caused by AI in Online Work, Ethical considerations of using AI for online money-making

Addressing the challenge of AI-driven job displacement requires a multi-pronged approach. Retraining and upskilling initiatives are crucial to equip workers with the skills needed for jobs that are less susceptible to automation. Focusing on skills like critical thinking, creativity, and complex problem-solving, which are difficult for AI to replicate, can help workers remain competitive. Government policies can play a vital role in supporting these initiatives, providing funding for training programs and offering incentives for businesses to invest in employee development.

Additionally, exploring alternative economic models, such as universal basic income, could provide a safety net for those displaced by automation. Furthermore, fostering collaboration between businesses, educational institutions, and government agencies is vital to develop effective strategies for workforce adaptation.

The Exacerbation of Economic Inequality by AI in Online Income Generation

AI has the potential to exacerbate existing economic inequalities in online work. The benefits of AI-driven automation are often concentrated among those who own or control the technology, leading to increased wealth concentration. Meanwhile, workers who are displaced by AI may struggle to find new employment, leading to increased income inequality. This disparity is particularly concerning in the gig economy, where workers often lack the benefits and protections afforded to traditional employees.

The lack of regulation and transparency in some online platforms can further disadvantage workers, making them vulnerable to exploitation and unfair practices. This unequal distribution of the benefits of AI highlights the need for policies that ensure a more equitable distribution of wealth and opportunity.

AI-Driven Creation of New Job Opportunities and Promotion of Economic Inclusion

While AI poses challenges, it also presents opportunities for creating new jobs and promoting economic inclusion in online work. AI can automate tedious tasks, freeing up human workers to focus on more creative and strategic endeavors. This can lead to the creation of new roles requiring higher-level skills and expertise. Furthermore, AI can be used to improve access to online work for individuals in underserved communities.

For example, AI-powered translation tools can make online work more accessible to individuals who speak different languages.

| AI Application | New Job Role | Potential Impact on Inequality |

|---|---|---|

| AI-powered content creation tools | AI content editor/curator | Could reduce low-skill content creation jobs, but create higher-skill roles requiring oversight and refinement. Potential for increased inequality if access to training is unequal. |

| AI-driven personalized learning platforms | AI educational content developer/personal tutor | Could increase access to education and improve learning outcomes, potentially reducing inequality. However, could also displace traditional teachers if not managed carefully. |

| AI-powered translation services | AI translation specialist/quality control | Could open up online work opportunities to a wider global workforce, reducing inequality. However, requires careful consideration of language nuances and cultural contexts. |

Last Recap

The ethical landscape of AI-driven online money-making is complex and constantly evolving. While AI offers tremendous potential for economic empowerment, its deployment demands a cautious and ethically informed approach. Addressing concerns around data privacy, algorithmic bias, transparency, and job displacement is crucial for ensuring that AI benefits all stakeholders fairly and responsibly. By proactively establishing robust ethical guidelines and regulatory frameworks, we can harness the power of AI for positive economic impact while safeguarding against its potential harms.

The future of online finance hinges on our ability to navigate these ethical challenges effectively, ensuring a future where technology serves humanity, not the other way around.