Ethical considerations of using AI in UI UX design process are paramount. The integration of artificial intelligence into the design process presents exciting possibilities for efficiency and innovation, but also raises crucial ethical questions. From algorithmic bias perpetuating societal inequalities to concerns about data privacy and the potential displacement of human designers, navigating these complexities is vital for responsible and equitable design practices.

This exploration delves into the key ethical challenges and offers strategies for mitigating risks and fostering responsible AI implementation in UI/UX.

The increasing reliance on AI in UI/UX design necessitates a thorough examination of its ethical implications. This involves not only understanding potential biases embedded within algorithms but also addressing concerns surrounding data privacy, accessibility, transparency, and the evolving role of human designers. By proactively addressing these ethical considerations, we can harness the power of AI while ensuring its application benefits all users and promotes a more inclusive and equitable digital landscape.

Accessibility and Inclusivity

Artificial intelligence (AI) presents both significant opportunities and considerable challenges in the pursuit of accessible and inclusive user interface (UI) and user experience (UX) design. Its potential to automate tasks, analyze vast datasets, and personalize interactions offers unprecedented possibilities for improving digital accessibility for users with diverse needs and abilities. However, the ethical implications and potential for bias inherent in AI algorithms necessitate careful consideration and proactive mitigation strategies.AI can significantly enhance accessibility by automating the creation of alternative text for images, generating captions for videos, and converting text to speech.

For example, AI-powered tools can analyze images and automatically generate accurate and descriptive alt text, ensuring visually impaired users using screen readers can understand the content. Similarly, AI can transcribe audio content with high accuracy, creating captions that are more comprehensive and accurate than those generated manually. These functionalities remove significant barriers for users with visual or auditory impairments, fostering greater inclusivity.

AI-Driven Accessibility Enhancements

AI algorithms can analyze user behavior and preferences to personalize the user interface, adapting to individual needs and disabilities. For instance, AI can dynamically adjust font sizes, contrast levels, and color palettes based on user preferences or detected visual impairments. Moreover, AI can facilitate the creation of customized navigation systems for users with motor impairments, employing features like voice control or gesture recognition.

This level of personalization surpasses the capabilities of traditional design methods, leading to more inclusive and user-friendly interfaces.

Challenges in Designing Inclusive Interfaces with AI

Despite its potential, utilizing AI in inclusive design presents several challenges. One major concern is the potential for algorithmic bias. If the training data used to develop AI models is not representative of the diverse population it is intended to serve, the resulting AI system may perpetuate existing biases, potentially excluding certain user groups. For example, an AI-powered facial recognition system trained primarily on images of light-skinned individuals may perform poorly when recognizing individuals with darker skin tones.

This bias can directly impact accessibility features designed to support diverse users. Furthermore, ensuring the transparency and explainability of AI-driven design decisions is crucial for building trust and addressing potential concerns. Users need to understand how and why the AI is making certain design choices.

Solutions to Challenges in AI-Driven Inclusive Design

Addressing these challenges requires a multi-faceted approach. Firstly, it’s crucial to use diverse and representative datasets when training AI models. This ensures the algorithms are not biased toward specific demographics. Secondly, rigorous testing and validation of AI-driven design features with diverse user groups are essential to identify and rectify any unintended biases or limitations. Employing human-in-the-loop approaches, where human designers work alongside AI tools, can help maintain control and oversight, ensuring ethical considerations are prioritized.

Finally, transparency and explainability are paramount. The design process should be documented, allowing users to understand how the AI contributed to the final design.

Example of an AI-Assisted Accessible Interface for Visually Impaired Users

Consider a news website designed with AI assistance for visually impaired users. The AI automatically generates detailed alt text for all images, including descriptions of charts and graphs. Headings are clearly structured using semantic HTML, allowing screen readers to navigate the content logically. The website offers multiple font sizes and customizable color contrast options. AI-powered text-to-speech functionality is seamlessly integrated, allowing users to listen to articles.

Navigation is simplified with a clear and concise sitemap, and keyboard navigation is fully implemented. The AI also analyzes user interactions to learn preferences and further personalize the experience, adjusting font sizes, speech rate, and other settings based on individual user behavior. Furthermore, the site uses ARIA attributes to provide rich contextual information to assistive technologies, ensuring a comprehensive and accessible experience.

Data Privacy and Security

The integration of AI into UI/UX design processes offers significant potential for personalization and optimization. However, this reliance on AI necessitates careful consideration of the ethical implications surrounding data privacy and security. The collection and use of user data, even for seemingly benign purposes like improving user experience, raises significant concerns regarding individual rights and the potential for misuse.

This section explores these concerns and Artikels best practices for responsible data handling.The ethical implications of collecting and using user data in AI-powered UI/UX design are multifaceted. AI algorithms often require large datasets to learn and improve, and these datasets frequently contain sensitive user information, including browsing history, preferences, and even personally identifiable details. The potential for data breaches, unauthorized access, or the unintended disclosure of private information presents a considerable risk.

Furthermore, the use of this data for targeted advertising or other commercial purposes without explicit consent raises serious ethical questions about user autonomy and control over their personal information. Concerns about algorithmic bias, where AI systems perpetuate or amplify existing societal biases based on the data they are trained on, further complicate the issue.

Data Privacy Concerns in AI-Driven UI/UX Design

The collection of user data for AI-powered UI/UX design raises several specific privacy concerns. For example, the use of eye-tracking technology to analyze user behavior on a website can reveal sensitive information about a user’s interests, cognitive abilities, or even health conditions. Similarly, the analysis of user input, such as text entered in forms or search queries, can expose personal beliefs, opinions, or even location data.

The potential for these data points to be aggregated and linked to other data sources to create detailed user profiles raises significant privacy concerns, especially when such profiles are used for purposes beyond improving the user experience. This potential for aggregation and linkage increases the risk of re-identification, even if individual data points are initially anonymized.

Best Practices for Protecting User Data and Ensuring Transparency

Protecting user data and ensuring transparency are crucial aspects of ethically responsible AI-driven UI/UX design. This requires implementing robust security measures, including data encryption, access control, and regular security audits. Organizations should adopt a privacy-by-design approach, embedding privacy considerations into every stage of the design and development process. Furthermore, obtaining informed consent from users before collecting and using their data is essential, with clear and concise explanations of how the data will be used and the measures taken to protect it.

Transparency in data usage is vital, and users should have the right to access, correct, or delete their data. Regularly publishing privacy policies and undergoing independent audits of data handling practices further enhances transparency and accountability. Implementing robust data minimization practices, only collecting the data absolutely necessary for the intended purpose, also mitigates risk.

Comparison of Anonymization Techniques for User Data

Effective anonymization techniques are critical for balancing the utility of user data for AI-driven design improvements with the need to protect user privacy. Several approaches exist, each with its own strengths and limitations:

The choice of anonymization technique depends on the specific context and the sensitivity of the data. While complete anonymization is the ideal, it’s often challenging to achieve in practice. A layered approach, combining multiple techniques, is frequently more effective in minimizing the risk of re-identification.

- Data Masking: Replacing sensitive data elements with pseudonyms or randomized values. This preserves the structure of the data while removing identifying information. However, it may reduce the analytical value of the data for AI training.

- Generalization: Replacing specific values with broader categories. For example, replacing a user’s exact age with an age range. This approach preserves some utility while reducing the risk of re-identification, but the level of detail lost can affect the AI’s performance.

- Differential Privacy: Adding carefully calibrated noise to the data to prevent the identification of individual data points. This technique offers strong privacy guarantees but can impact the accuracy of AI models if not implemented correctly.

- Federated Learning: Training AI models on decentralized data without directly accessing the data itself. This approach keeps sensitive data on individual devices, reducing the risk of breaches and improving privacy protection. However, it can be computationally expensive and may require careful coordination between participating entities.

Transparency and Explainability

Transparency in AI-driven UI/UX design is paramount for building trust and ensuring user acceptance. Users need to understand how AI influences their experience, fostering a sense of control and preventing potential biases or unexpected behaviors from undermining their interaction. A lack of transparency can lead to user frustration, distrust, and ultimately, rejection of the system. Therefore, designing for transparency is crucial for the success of any AI-powered UI/UX.Users should be aware of the extent to which AI is shaping their interactions.

This understanding isn’t necessarily about requiring users to grasp complex algorithms, but rather about providing clear and concise information about the AI’s role in the system. For instance, if an AI recommends products, users should know that it’s based on their past behavior and preferences, rather than believing the recommendations are purely objective. Providing this context helps manage user expectations and avoids the “black box” effect, where users feel manipulated by an unseen force.

Methods for Enhancing Transparency in AI-Powered Design Tools

Making the decision-making processes of AI-powered design tools transparent requires a multi-faceted approach. This involves not only informing designers about how the AI works but also providing mechanisms to understand and potentially override AI suggestions. This allows designers to maintain creative control while leveraging the efficiency of AI tools.One approach is to provide designers with clear visualizations of the AI’s reasoning.

For example, a design tool might highlight which elements of a user interface an AI has identified as potential points of improvement, along with a rationale for each suggestion. This could be displayed through color-coding, highlighting, or other visual cues that clearly indicate the AI’s influence without overwhelming the designer with technical details. Furthermore, tools could offer “explainability” features, allowing designers to drill down into the AI’s decision-making process to understand the underlying data and logic.

This empowers designers to assess the validity and appropriateness of AI suggestions before implementing them. Finally, providing designers with the option to accept, reject, or modify AI-generated suggestions maintains their control and agency within the design process.

Communicating AI Use in User Interfaces, Ethical considerations of using AI in UI UX design process

Designing a user interface that clearly communicates the use of AI without relying on images requires careful consideration of textual cues and contextual information. The goal is to inform users subtly but effectively, avoiding unnecessary technical jargon.For example, a personalized news feed powered by AI could include a simple statement such as: “Your feed is personalized based on your interests.” Similarly, a chatbot could include a disclaimer like: “I am an AI assistant designed to help you.” These brief, yet informative, messages provide transparency without disrupting the user experience.

Another approach involves using subtle visual cues such as loading indicators with text. For example, while an AI is generating personalized content, a message like “Personalizing your recommendations…” could appear, making the AI’s activity clear. The key is to choose appropriate wording and placement that are unobtrusive yet readily apparent to the user. Avoid overly technical terms; prioritize clarity and user understanding.

Job Displacement and the Future of Work: Ethical Considerations Of Using AI In UI UX Design Process

The integration of AI into UI/UX design raises significant questions about the future of work in the field. While some fear widespread job displacement, a more nuanced perspective reveals a complex interplay between human creativity and AI’s analytical capabilities. The reality likely lies in a shift in roles and responsibilities, rather than complete automation.AI tools are rapidly automating repetitive tasks, such as generating basic wireframes or performing A/B testing.

This automation has the potential to displace designers focusing primarily on these tasks, particularly those lacking advanced skills. However, the core creative and strategic aspects of UI/UX design remain firmly in the human domain, at least for the foreseeable future. The challenge for designers lies in adapting to this evolving landscape and acquiring new skill sets to complement AI’s capabilities.

Impact of AI on UI/UX Designer Roles

AI is transforming the roles and responsibilities of UI/UX designers. Tasks previously handled manually, such as generating design variations or analyzing user data, can now be partially or fully automated. This shift necessitates a reassessment of the skills and expertise needed for success in the field. The demand for designers with highly specialized skills in areas like user research, interaction design, and complex system design is likely to increase, while the need for designers focused solely on repetitive tasks may decrease.

For example, a junior designer primarily focused on creating button variations might find their role significantly impacted, while a senior designer specializing in user experience architecture and information architecture will likely see a shift in their workflow rather than job displacement. The overall effect will likely be a restructuring of the design team, with a focus on strategic roles and a reduced need for highly specialized tasks.

Essential Skills for UI/UX Designers in the AI Era

The increasing use of AI in UI/UX design necessitates the development of new skills and capabilities. Designers must adapt to collaborate effectively with AI tools and leverage their potential to enhance their work.

The following skills will become increasingly crucial:

- AI Literacy: Understanding the capabilities and limitations of various AI tools, including their strengths and biases.

- Prompt Engineering: The ability to effectively communicate design requirements and constraints to AI tools to generate desired outputs. This requires a deep understanding of the underlying AI algorithms and how to optimize prompts for better results.

- Data Analysis and Interpretation: The capacity to critically evaluate data generated by AI tools, such as user feedback and usage patterns, to inform design decisions. This involves understanding statistical methods and recognizing potential biases in the data.

- Human-Centered Design Expertise: Maintaining a strong focus on user needs and emotions, ensuring that AI-generated designs remain empathetic and inclusive. This skill is critical to prevent AI from overshadowing the human element of design.

- Ethical Considerations in AI Design: Understanding and applying ethical principles to the use of AI in design, ensuring fairness, transparency, and accountability in the design process.

Human-AI Collaboration in UI/UX Design

A scenario illustrating successful human-AI collaboration could involve a team designing a new mobile banking app. The AI could be used to generate multiple wireframe variations based on user data and design best practices. The human designers would then review these options, incorporating their creative vision and expertise in user experience to select the most promising designs. The AI could also assist with usability testing, analyzing user feedback and identifying areas for improvement.

The human designers would then refine the design based on the AI’s analysis, ensuring that the final product meets user needs and aligns with ethical considerations.This collaborative approach would leverage the strengths of both humans and AI: the AI’s speed and efficiency in handling repetitive tasks, and the human’s creativity, critical thinking, and emotional intelligence in making strategic design decisions and ensuring a user-centric approach.

The benefits for designers include increased productivity and the ability to focus on higher-level tasks. For users, the outcome would be a more refined, user-friendly, and ethically sound product.

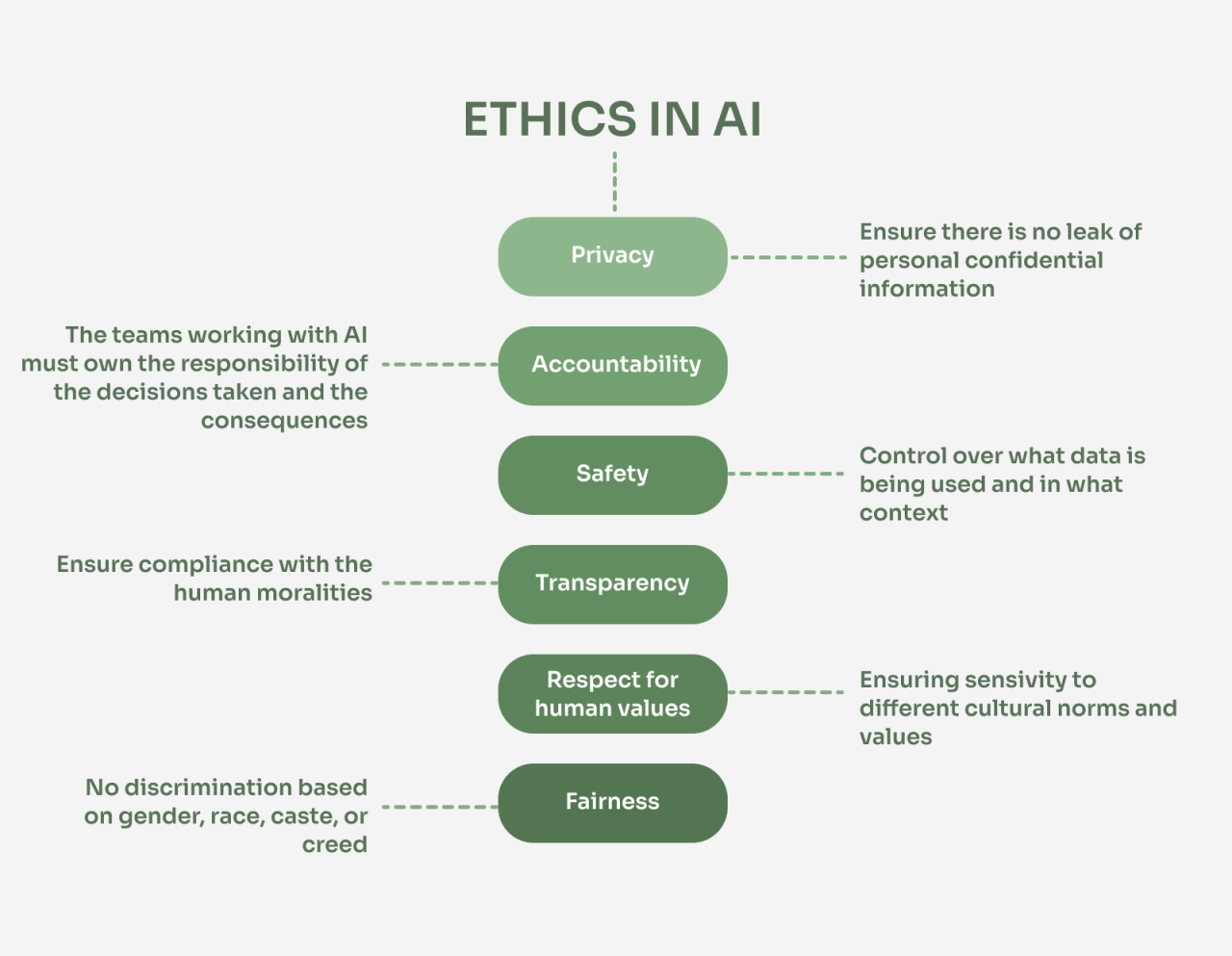

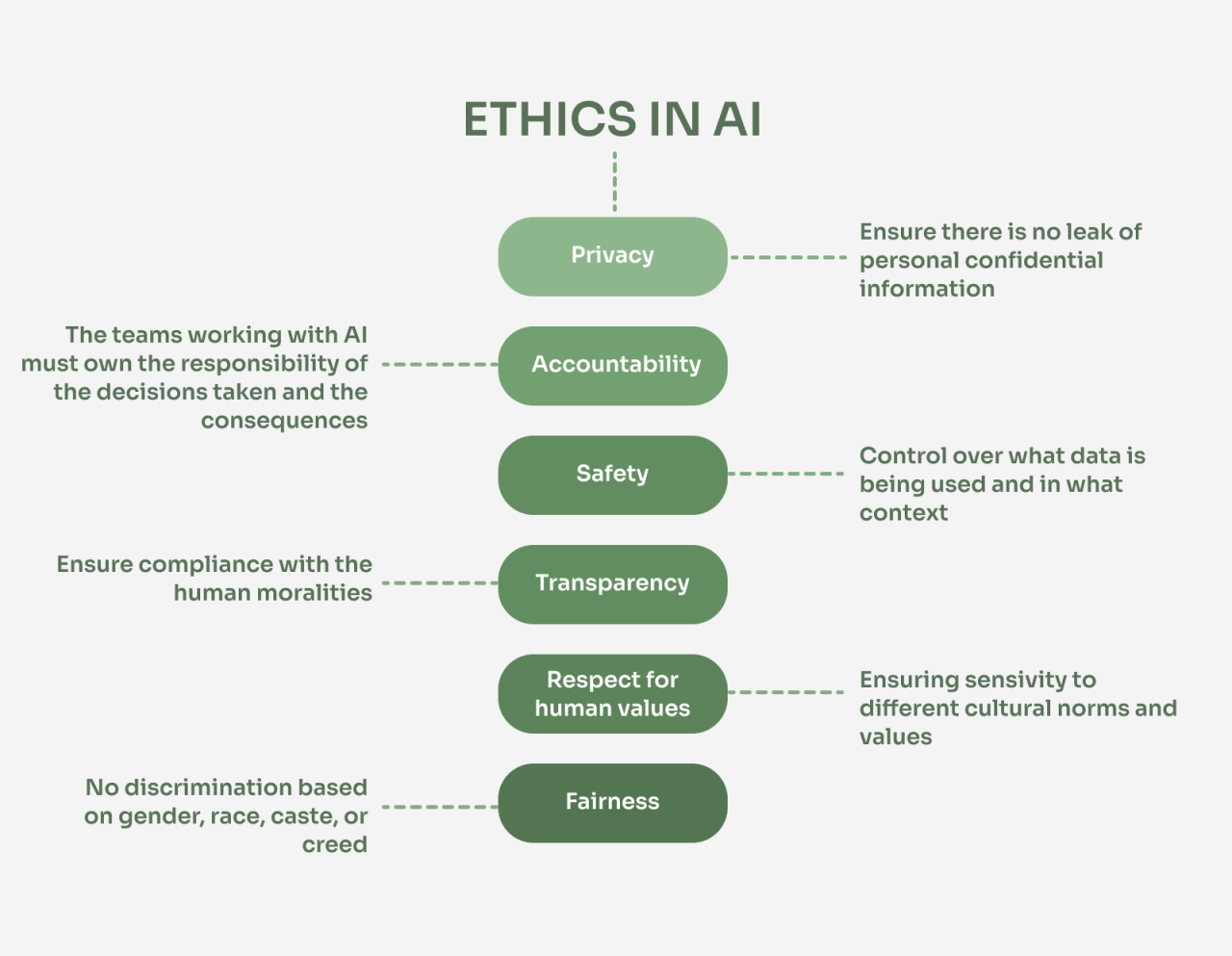

Responsibility and Accountability

The increasing integration of AI in UI/UX design raises crucial questions about responsibility and accountability when AI-driven designs lead to unintended negative consequences. Determining who should bear the brunt of the fallout—the designers, the developers, the AI algorithm creators, or even the users themselves—is a complex ethical challenge with significant legal implications. A robust framework is needed to navigate this intricate landscape and ensure ethical and responsible development and deployment.The assignment of accountability in AI-driven UI/UX design hinges on several factors, including the level of AI autonomy, the transparency of the design process, and the presence of human oversight.

When AI acts as a mere tool under significant human control, the human designers and developers retain primary responsibility. However, as AI systems become more autonomous and their decision-making processes less transparent, the lines of accountability blur, demanding a more nuanced approach to ethical considerations. The potential for algorithmic bias, for example, further complicates this issue, highlighting the need for rigorous testing and mitigation strategies throughout the design process.

Establishing Ethical Guidelines and Best Practices

A comprehensive framework for ethical AI in UI/UX design requires a multi-faceted approach. This framework should prioritize transparency in the AI’s decision-making process, allowing for human review and intervention. Regular audits of AI systems are essential to identify and address potential biases or unintended consequences. Furthermore, clear lines of responsibility should be established from the initial stages of design to the deployment and ongoing monitoring of the AI-powered tools.

These guidelines should also incorporate principles of user privacy and data security, ensuring compliance with relevant regulations and best practices. Robust testing and validation procedures are crucial to mitigate risks and prevent negative impacts on user experience and well-being. Finally, continuous learning and adaptation are essential, given the rapidly evolving nature of AI technology. The framework must be dynamic and adaptable to address emerging challenges and ethical dilemmas.

A Hypothetical Legal Agreement Addressing Liability and Accountability

A hypothetical legal agreement addressing liability and accountability for AI-driven design decisions could incorporate several key clauses. Firstly, it should clearly define the roles and responsibilities of all parties involved, including the designers, developers, AI algorithm providers, and the client. This should delineate the level of AI autonomy in the design process and specify the points at which human oversight is required.

Secondly, the agreement should Artikel mechanisms for identifying and addressing unintended negative consequences, including a clear process for reporting and investigating incidents. Thirdly, it should establish a framework for determining liability in case of harm caused by the AI-driven design, specifying the extent of responsibility for each party involved. This could involve a tiered system of liability based on the level of contribution and control each party exerted over the AI system.

Finally, the agreement should include provisions for dispute resolution, such as arbitration or mediation, to avoid costly and time-consuming litigation. This agreement should also incorporate provisions for ongoing monitoring and updates to the AI system, ensuring its continued alignment with ethical guidelines and legal requirements. The agreement should explicitly state that all parties are bound by the principles of fairness, transparency, and accountability in the development and deployment of the AI-driven design.

Final Summary

Ultimately, the ethical use of AI in UI/UX design requires a collaborative effort. Designers, developers, policymakers, and users must work together to establish clear guidelines, promote transparency, and prioritize fairness and inclusivity. By embracing responsible AI practices, we can ensure that this powerful technology enhances the user experience while upholding the highest ethical standards. The future of UI/UX design lies in a harmonious integration of human creativity and artificial intelligence, guided by a strong ethical compass.