How AI helps with video transcription and subtitling is revolutionizing the video industry. AI-powered tools are rapidly transforming how we create, access, and understand video content, offering unprecedented speed, accuracy, and affordability. This technology is no longer a futuristic dream; it’s a readily available solution impacting businesses, creators, and viewers alike. This exploration delves into the multifaceted ways AI enhances video transcription and subtitling, examining its benefits, limitations, and future potential.

From dramatically speeding up transcription processes to enabling multi-lingual subtitling with remarkable accuracy, AI offers a compelling alternative to traditional methods. We’ll explore how AI handles nuances like accents and complex terminology, analyze the cost-effectiveness compared to human transcriptionists, and discuss the crucial role it plays in enhancing accessibility and inclusivity for a broader audience. We’ll also address the inherent limitations and ethical considerations surrounding AI in this context, offering a balanced perspective on this rapidly evolving technology.

Accuracy and Speed of AI-Powered Transcription

AI-powered transcription services offer a significant leap forward in speed and efficiency compared to manual transcription, but accuracy remains a key consideration. The performance of these systems is influenced by a complex interplay of factors, including audio quality, language, and the sophistication of the underlying AI model. This section will explore these factors in detail, comparing AI transcription to human transcription across various scenarios.

Factors Influencing Accuracy of AI Transcription, How AI helps with video transcription and subtitling

The accuracy of AI transcription is highly dependent on the audio quality. Clear audio, free from background noise and interference, yields significantly better results. Conversely, noisy environments, such as crowded rooms or locations with significant ambient sound, present considerable challenges. Accents also impact accuracy; AI models trained primarily on standard accents may struggle with regional dialects or non-native speakers.

The presence of multiple speakers simultaneously can further reduce accuracy, as the AI may struggle to isolate and correctly attribute speech to individual speakers. Finally, the speech rate of the speaker influences accuracy; very fast or very slow speech can negatively affect transcription quality.

Speed Comparison: AI vs. Human Transcription

AI transcription significantly outpaces human transcription, especially for longer videos. For a short video (e.g., under 5 minutes), the difference might be minimal. However, for longer videos (e.g., 30 minutes or more), AI can complete the transcription in a matter of minutes, whereas a human transcriber might take several hours. The complexity of the audio also plays a role; videos with multiple speakers, background noise, or technical jargon will increase the time required for both AI and human transcription, although the AI’s speed advantage will likely remain.

For instance, a 60-minute interview with clear audio might be transcribed by AI in under 15 minutes, while a human transcriber could take 3-4 hours.

Error Rates in AI Transcription Across Models and Languages

Error rates vary considerably depending on the AI model and the language being transcribed. Generally, AI models trained on large datasets of high-quality audio in common languages (like English) exhibit lower error rates than those trained on smaller datasets or less common languages. While precise error rates are difficult to quantify universally due to variations in testing methodologies and datasets, studies have shown that leading AI transcription services achieve word error rates (WER) ranging from 2% to 10% in ideal conditions for common languages.

However, this rate can increase substantially under less-than-ideal conditions. For less commonly used languages, the WER can be significantly higher, sometimes exceeding 20%. For example, a state-of-the-art model might achieve a WER of 4% for English but a WER of 15% for a less-resourced language like Swahili.

Handling Complex Terminology and Technical Jargon

AI models are increasingly capable of handling complex terminology and technical jargon. However, the accuracy depends on the model’s training data. Models trained on corpora containing technical vocabulary will perform better in transcribing videos with such language. For example, an AI model trained on medical transcripts will likely achieve higher accuracy when transcribing a medical lecture compared to a general-purpose model.

Some AI platforms offer customization options, allowing users to upload domain-specific vocabulary to improve accuracy. This is particularly useful for videos containing highly specialized terms, ensuring that the AI correctly identifies and transcribes niche vocabulary. For instance, a financial analyst could improve the accuracy of transcribing a financial conference by providing a list of key financial terms to the AI system.

AI’s Role in Subtitling and Captioning

AI is revolutionizing the subtitling and captioning industry, offering significant improvements in speed, accuracy, and accessibility. Its ability to process vast amounts of audio data and translate between numerous languages makes it an invaluable tool for content creators and distributors worldwide. This increased efficiency allows for wider reach and greater inclusivity of video content for a global audience.

AI-powered subtitling tools are transforming how we create and deliver accessible video content. These tools leverage sophisticated algorithms to transcribe audio, translate the transcription into multiple languages, and format the text for various platforms. The automation offered by these tools significantly reduces the time and cost associated with traditional subtitling methods, making high-quality subtitles more readily available.

Advantages of AI-Powered Multi-Language Subtitling

AI’s capacity for multi-language subtitling offers numerous advantages. The speed and efficiency of automated translation drastically reduce turnaround times, enabling faster content deployment across various international markets. Moreover, AI ensures consistency in translation quality, avoiding the inconsistencies that can arise with human translators working independently. This is particularly beneficial for large-scale projects requiring subtitles in many languages.

Furthermore, AI-powered tools often provide options for customization, allowing users to adjust the style and tone of the subtitles to better suit their target audience. For example, an AI tool might offer different translation styles for formal versus informal settings, ensuring the subtitles appropriately reflect the video’s context.

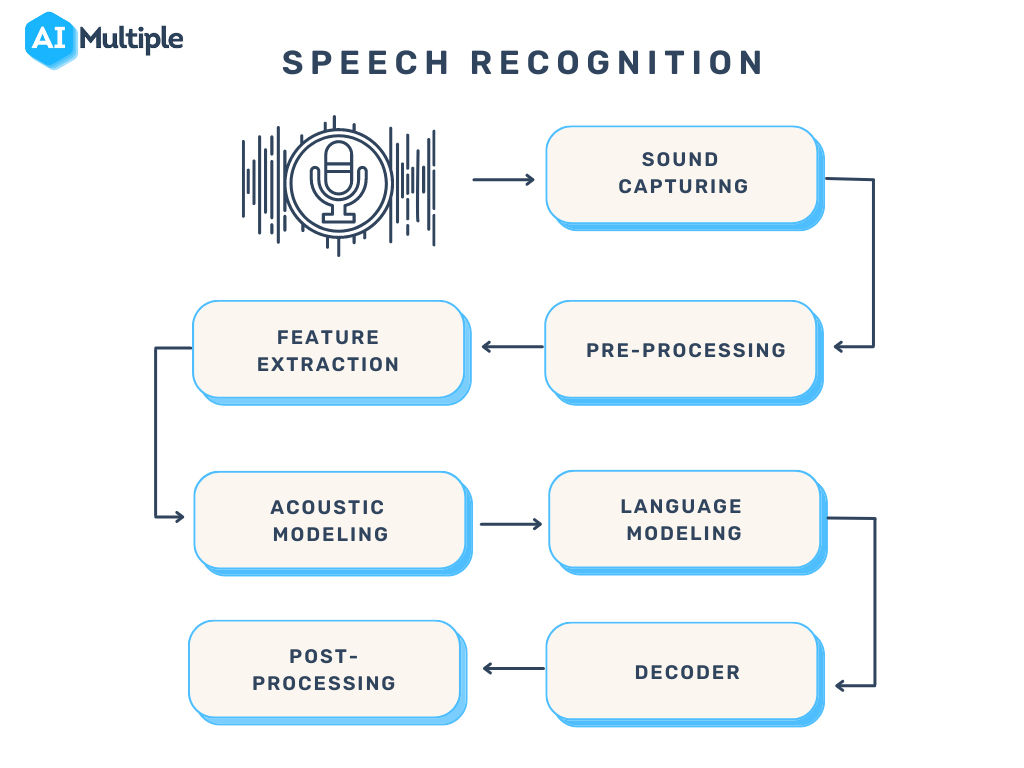

The Process of AI-Powered Subtitle Creation

AI-powered subtitle creation typically involves several key steps. First, the audio from the video is processed by a speech-to-text engine. This engine converts the audio into a text transcription, accurately identifying words and phrases. Next, this transcription is then translated into the desired target language(s) using a machine translation algorithm. These algorithms are continuously refined, learning from massive datasets to improve accuracy and fluency.

Finally, the translated text is timestamped and formatted according to industry standards, ensuring proper synchronization with the video. This timestamping process precisely aligns each segment of text with the corresponding section of the audio, resulting in accurate and readable subtitles. The formatting ensures compatibility with various video players and platforms.

Comparison of AI Subtitling Tools

Different AI subtitling tools offer varying functionalities, strengths, and weaknesses. Some platforms focus on speed and efficiency, while others prioritize accuracy and customization options. The choice of platform often depends on specific project needs and budget constraints. Features such as speaker identification, punctuation accuracy, and support for multiple audio channels can significantly influence the quality of the final subtitles.

Moreover, the availability of human review and editing options can be a critical factor for ensuring high accuracy, especially for complex audio or specialized terminology. Many platforms also offer integration with other video editing tools, streamlining the workflow and improving efficiency.

Comparison of Popular AI Subtitling Platforms

| Platform | Features | Pricing | User Reviews |

|---|---|---|---|

| Trint | High accuracy transcription, multi-language support, speaker identification, timestamping, various export formats. | Subscription-based, tiered pricing with varying features and transcription minutes. | Generally positive reviews, praising accuracy and ease of use. Some users mention higher pricing compared to competitors. |

| Descript | Transcription, translation, editing, collaboration tools, integrated audio and video editing capabilities. | Subscription-based, with different plans offering varying features and storage. | Positive reviews highlighting its versatile features and user-friendly interface. Some users find the learning curve steeper than other platforms. |

| Happy Scribe | Fast and accurate transcription, multi-language support, human review option, various file formats, and integrations. | Per-minute pricing, with discounts for bulk orders. | Generally positive feedback, with users appreciating the speed and accuracy. Some concerns about occasional inaccuracies in complex audio. |

Cost-Effectiveness of AI Transcription and Subtitling

The rising popularity of video content across various platforms necessitates efficient and affordable transcription and subtitling solutions. Traditional human-based methods, while offering high accuracy, can be prohibitively expensive, particularly for large volumes of video or projects with tight deadlines. AI-powered solutions present a compelling alternative, offering significant cost savings while maintaining acceptable accuracy levels for many applications. This section explores the cost-effectiveness of AI transcription and subtitling, comparing it to human-based methods and highlighting scenarios where AI provides a clear financial advantage.AI-powered transcription and subtitling services typically charge per minute of audio or video, with prices significantly lower than human transcriptionists.

Human transcription rates vary widely based on factors such as turnaround time, language, audio quality, and the complexity of the content. However, even the most affordable human transcriptionists often charge several times more per minute than AI services. This difference becomes particularly pronounced when dealing with extensive video libraries or large-scale projects.

Cost Comparison: AI vs. Human Transcription

A direct comparison reveals the financial benefits of AI. Let’s consider a hypothetical 60-minute video project. A human transcriptionist might charge $1.50-$3.00 per minute, resulting in a total cost of $90-$180. In contrast, an AI-powered transcription service might cost $0.25-$1.00 per minute, leading to a total cost of $15-$60. This difference represents a substantial cost saving, ranging from 33% to 83%, depending on the chosen human and AI service providers.

The savings become even more significant with longer videos.

Scenarios Favoring AI-Based Solutions

AI transcription excels in scenarios requiring rapid turnaround times or high-volume processing. For example, businesses generating daily video content, such as news outlets or educational institutions, can significantly reduce their operational costs by leveraging AI. Similarly, companies with large archives of video content needing transcription for search optimization or accessibility purposes can benefit greatly from AI’s speed and scalability.

Moreover, AI is particularly cost-effective for projects with less stringent accuracy requirements, such as internal training videos or social media content.

Real-World Examples of AI Implementation

Several businesses have successfully integrated AI-powered transcription and subtitling into their workflows. Netflix, for example, utilizes AI to generate subtitles and captions in multiple languages, reducing the costs associated with human translation and subtitling. Similarly, many educational institutions employ AI to create transcripts for online lectures, making the content more accessible to a wider audience and saving significant costs compared to manual transcription.

YouTube also leverages AI-powered automatic captioning, offering a cost-effective solution for content creators.

Cost-Benefit Analysis: Hypothetical Video Project

Consider a hypothetical corporate training video, 30 minutes long, requiring accurate transcription for internal use.

| Method | Cost per Minute | Total Cost | Accuracy | Turnaround Time |

|---|---|---|---|---|

| Human Transcription | $2.00 | $60 | 99%+ | 2-3 days |

| AI Transcription (with human review) | $0.50 (AI) + $0.50 (Review) = $1.00 | $30 | 95%+ | 1 day |

While human transcription offers higher accuracy, the AI-based solution with a human review step provides a 50% cost reduction while maintaining acceptable accuracy for internal training purposes. The faster turnaround time is an added benefit. The optimal solution depends on the specific project requirements and budget constraints.

Accessibility and Inclusivity through AI

AI-powered transcription and subtitling significantly enhance the accessibility and inclusivity of video content, breaking down barriers for individuals with diverse needs and backgrounds. By automating the creation of accurate and timely transcripts and subtitles, AI democratizes access to information and entertainment, fostering a more equitable digital landscape. This technology’s impact extends beyond simply providing text; it actively promotes broader participation and understanding.AI’s contribution to video accessibility is particularly impactful for people with hearing impairments.

Accurate and reliable subtitles are crucial for ensuring that individuals with hearing loss can fully engage with video content. AI streamlines this process, making it faster and more affordable than traditional methods, thereby expanding the reach of videos to a much wider audience. This includes educational videos, news broadcasts, and entertainment programming, all now more readily available to a significant portion of the population who would otherwise be excluded.

AI’s Role in Improving Accessibility for the Hearing Impaired

AI-powered transcription services provide real-time captions for live streams and pre-recorded videos. This ensures that individuals with hearing impairments can follow along with the audio content without difficulty. Furthermore, the ability to generate subtitles in multiple languages through AI expands accessibility beyond geographical limitations, allowing individuals from different linguistic backgrounds to enjoy the same content. Consider the example of a TED Talk: AI-generated subtitles in multiple languages allow viewers worldwide, regardless of their hearing ability or native language, to access and benefit from the speaker’s insights.

This surpasses the limitations of traditional methods, which often lack the speed, accuracy, and multilingual capabilities offered by AI.

AI’s Contribution to Inclusive Video Content

AI goes beyond simple transcription; it contributes to a more inclusive viewing experience by facilitating the creation of diverse and accessible video content. For instance, AI can be used to generate descriptive audio for visually impaired individuals, painting a vivid picture of the on-screen action. Furthermore, AI-powered translation tools can automatically generate subtitles in multiple languages, making videos accessible to a global audience.

This significantly broadens the reach and impact of the video content, fostering cross-cultural understanding and engagement. Imagine a documentary about a historical event: AI-generated subtitles in multiple languages make this valuable educational resource accessible to diverse audiences around the world, enriching their understanding of history.

Enhancing the Viewing Experience for Diverse Linguistic Backgrounds

AI’s ability to translate and transcribe audio in multiple languages opens up a world of possibilities for individuals with diverse linguistic backgrounds. AI-powered tools can accurately generate subtitles in various languages, making video content understandable and enjoyable for a global audience. For example, a cooking tutorial in English can be easily translated and subtitled in Spanish, French, and Mandarin, allowing individuals from different language backgrounds to learn new recipes.

This not only promotes cultural exchange but also empowers individuals to learn and participate in activities they might otherwise miss out on due to language barriers. This inclusivity extends to the creation of multilingual subtitles, allowing for greater audience engagement and broader cultural understanding.

Best Practices for Accurate and Accessible AI-Generated Subtitles

Creating accurate and accessible AI-generated subtitles requires careful consideration of several factors. It is crucial to select a high-quality AI transcription service known for its accuracy and reliability. Post-editing by a human is also essential to ensure the accuracy and clarity of the subtitles. This involves reviewing the AI-generated subtitles for any errors or omissions and making necessary corrections.

Furthermore, it is crucial to adhere to accessibility guidelines, such as using clear and concise language, avoiding overly complex sentence structures, and ensuring that the subtitles are properly synchronized with the audio. Consistent formatting and font selection are also important for optimal readability. Finally, regular testing and feedback mechanisms are vital to ensure the ongoing accuracy and effectiveness of the AI-generated subtitles.

This iterative approach ensures that the subtitles are accessible to the widest possible audience and provide an enjoyable viewing experience.

AI’s Impact on Video Editing Workflow

AI-powered transcription and subtitling tools are revolutionizing video editing workflows, significantly reducing post-production time and enhancing efficiency. By seamlessly integrating with existing video editing software, these tools automate previously manual and time-consuming tasks, allowing editors to focus on creative aspects of their projects. This integration leads to faster turnaround times, increased productivity, and ultimately, higher-quality video productions.AI transcription software directly impacts video editing by providing accurate text representations of audio and video content.

This text is then readily usable for creating subtitles, captions, and even searchable indexes within the video editing platform. The automated nature of this process minimizes the need for manual transcription, a task that is notoriously laborious and prone to errors. The speed and accuracy gains are substantial, leading to considerable cost savings and improved workflow efficiency.

Integration of AI Transcription with Video Editing Software

Many popular video editing suites now offer direct integration with AI transcription services or have APIs that allow for seamless data transfer. For example, imagine a scenario where a video editor imports a raw video file into Adobe Premiere Pro. Through a plugin or integrated feature, the editor can initiate an AI-powered transcription. Once the transcription is complete, the editor can directly import the generated text file into the Premiere Pro timeline, creating timed subtitles or captions with minimal additional effort.

This streamlined process eliminates the need to export audio files to a separate transcription service, manually edit the transcription, and then re-import it into the editing software. The time saved can be substantial, especially for longer videos.

Streamlining Subtitle and Caption Creation

AI significantly streamlines subtitle and caption creation. After transcription, the AI can often automatically generate timed subtitles based on the identified timestamps within the transcript. While some manual review and adjustment may be necessary to ensure accuracy and stylistic consistency, the automated process drastically reduces the time required compared to manual creation. Furthermore, AI can assist with formatting subtitles to comply with various standards (e.g., SRT, VTT), simplifying the export and distribution process.

For example, a video editor working on a YouTube video can leverage AI to create captions that are directly compatible with YouTube’s platform, eliminating additional steps in the publishing process.

Time Saved and Efficiency Gains

The time saved using AI for transcription and subtitling in post-production is considerable. Manual transcription of a one-hour video can take several hours, even for experienced transcribers. AI can complete the same task in minutes, depending on the complexity of the audio and the chosen AI service. This reduction in transcription time translates directly to faster project completion, allowing editors to manage more projects within the same timeframe or allocate more time to creative aspects of the editing process.

For instance, a video production company might reduce their post-production time by 50% or more by implementing AI-powered transcription and subtitling tools. This increased efficiency leads to significant cost savings and improved profitability.

Step-by-Step Guide: Using AI for Transcription and Subtitling in Video Editing

The exact steps may vary depending on the video editing software and AI transcription service used. However, a general workflow might look like this:

1. Import Video

Import the video file into your chosen video editing software (e.g., Adobe Premiere Pro, Final Cut Pro, DaVinci Resolve).

2. Initiate Transcription

Utilize the integrated AI transcription feature or a compatible plugin to initiate the transcription process. Specify the language and any desired audio settings (e.g., speaker identification).

3. Review Transcription

Once the transcription is complete, carefully review the generated text for accuracy. Correct any errors or inconsistencies.

4. Create Subtitles/Captions

Use the editing software’s tools to create timed subtitles or captions using the reviewed transcription. Many software packages offer automated timestamping features that simplify this process.

5. Export and Review

Export the subtitles or captions in the desired format (e.g., SRT, VTT) and review the final output within the video player to ensure accuracy and timing.

Limitations and Challenges of AI in Video Transcription and Subtitling

While AI has revolutionized video transcription and subtitling, offering significant improvements in speed and cost-effectiveness, it’s crucial to acknowledge its inherent limitations and the ongoing challenges in achieving perfect accuracy and seamless integration. Current AI systems still struggle with certain audio characteristics and nuanced linguistic features, requiring human oversight to ensure high-quality results.

Complex Audio Scenarios

AI transcription accuracy significantly diminishes when confronted with complex audio scenarios. Background noise, multiple speakers talking simultaneously (overlapping speech), accents, poor audio quality (low bitrate, distortion), and the presence of music or other interfering sounds all pose significant challenges. For instance, a bustling marketplace scene in a documentary might produce a transcript riddled with errors due to the AI’s difficulty in isolating individual voices and filtering out ambient noise.

Similarly, strong regional accents or non-native speakers can lead to misinterpretations and inaccurate word choices. These limitations highlight the need for sophisticated algorithms capable of better handling acoustic variability and speaker diarization.

Maintaining Accuracy and Consistency Across Languages

Ensuring consistent accuracy and quality across multiple languages presents a formidable challenge. AI models are trained on vast datasets, but the availability of high-quality, annotated data varies significantly between languages. This disparity in data quantity and quality directly impacts the performance of AI transcription and subtitling systems. Languages with complex grammatical structures, rich morphology, or less digitized content often suffer from lower accuracy rates.

For example, accurately transcribing a video in a low-resource language like Quechua might require substantially more human intervention compared to transcribing the same video in English. Moreover, maintaining consistency in terminology and style across different translations necessitates careful human review and editing.

Ethical Considerations and Data Privacy

The use of AI in transcription and subtitling raises significant ethical concerns, particularly regarding data privacy. AI models are trained on vast amounts of audio and video data, often including sensitive information. Ensuring the privacy and security of this data is paramount, requiring robust data anonymization techniques and adherence to strict data protection regulations like GDPR. Furthermore, the potential for bias in AI models trained on biased datasets needs careful consideration.

This bias can manifest in inaccurate or unfair representations of certain groups or individuals, highlighting the need for responsible AI development and deployment practices. The potential for misuse, such as unauthorized recording and transcription of conversations, also needs careful ethical consideration.

Situations Requiring Human Intervention

Despite advancements in AI, human intervention remains crucial in several scenarios. Highly technical or specialized terminology, nuanced linguistic features (sarcasm, humor), emotionally charged speech, and the need for stylistic adjustments all necessitate human expertise. For example, a medical lecture might require a human expert to ensure the accuracy of technical terms and concepts. Similarly, subtitling a comedic video requires a human to accurately convey the intended humor and tone.

In cases where high accuracy and nuanced understanding are paramount, human review and editing are indispensable. The final quality control step, involving a human reviewing the AI-generated output, remains a critical component of the process, ensuring the accuracy and quality of the final product.

Future Trends in AI-Powered Video Transcription and Subtitling: How AI Helps With Video Transcription And Subtitling

The field of AI-powered video transcription and subtitling is rapidly evolving, driven by advancements in machine learning and natural language processing. We can expect significant improvements in accuracy, efficiency, and accessibility in the coming years, transforming how we create and consume video content. This section explores the key trends shaping the future of this technology.

Several factors contribute to the exciting possibilities ahead. The ever-increasing computational power available, coupled with the development of more sophisticated algorithms, allows for more nuanced processing of audio and video data. This, combined with the growing availability of large, high-quality datasets for training AI models, promises to significantly enhance the performance of these systems.

Advancements in AI Technology for Improved Accuracy and Efficiency

Ongoing research in deep learning, particularly in areas like recurrent neural networks (RNNs) and transformers, is leading to more accurate and efficient transcription models. These models are becoming better at handling complex audio scenarios, including overlapping speech, background noise, and various accents. Furthermore, advancements in speaker diarization – the ability to identify and separate different speakers in a conversation – will improve the accuracy of transcriptions, particularly in multi-person videos.

For example, the integration of contextual understanding into AI models allows for better disambiguation of homophones and improved grammatical accuracy. This moves beyond simple word-for-word transcription towards a more semantically rich understanding of the content.

AI-Driven Automation of Video Accessibility

The ultimate goal is to automate the entire video accessibility pipeline. This means AI systems will not only transcribe audio but also generate accurate and compliant captions and subtitles, potentially even translating them into multiple languages simultaneously. This complete automation will significantly reduce the time and cost associated with making videos accessible to a wider audience. Imagine a future where uploading a video automatically triggers the generation of accurate transcripts, subtitles in multiple languages, and even descriptive audio for visually impaired users—all within minutes.

Companies like Google Cloud and Amazon Web Services are already making strides in this direction with their automated captioning services, although full automation with perfect accuracy remains a goal.

Emerging Technologies Revolutionizing Video Transcription and Subtitling

Several emerging technologies promise to revolutionize the field. One example is the use of advanced speech recognition models that leverage both acoustic and linguistic features to improve accuracy, especially in noisy environments. Another is the application of computer vision to analyze visual cues in videos, providing context that can enhance the accuracy of transcriptions and the generation of descriptive video captions.

Furthermore, the development of more robust and efficient multilingual models will facilitate the creation of subtitles and captions in a wider range of languages, making video content accessible to a truly global audience. For instance, the development of real-time transcription and translation systems, powered by advancements in edge computing, will allow for immediate accessibility during live broadcasts or video conferences.

Future Trends and Predictions

The following points summarize the key future trends and predictions in AI-powered video transcription and subtitling:

The following points highlight significant developments expected in the near future:

- Increased Accuracy and Speed: AI models will continue to improve in accuracy and speed, handling increasingly complex audio and video scenarios with minimal human intervention.

- Enhanced Multilingual Support: AI will power real-time translation and subtitling in a wide array of languages, breaking down language barriers in video content.

- Complete Automation of Accessibility: The entire process of creating accessible videos, from transcription to captioning and translation, will become fully automated.

- Integration with Video Editing Workflows: AI-powered transcription and subtitling tools will seamlessly integrate with existing video editing software, streamlining the production process.

- Rise of Personalized Subtitles: AI could tailor subtitles based on individual user preferences, such as language, reading speed, and font size.

- Improved Handling of Noisy Environments: AI models will become increasingly adept at filtering out background noise and accurately transcribing speech in challenging acoustic conditions.

- Advanced Speaker Diarization: More precise identification and separation of individual speakers in conversations will enhance transcription accuracy.

Final Summary

The integration of AI in video transcription and subtitling represents a significant leap forward, democratizing access to video content and streamlining workflows for creators. While challenges remain, the ongoing advancements in AI promise even greater accuracy, efficiency, and affordability in the future. By understanding both the capabilities and limitations of this technology, businesses and individuals can leverage AI to enhance their video content and reach a wider audience more effectively.

The future of video accessibility and content creation is undeniably intertwined with the continued development and refinement of AI-powered solutions.